Falcon Exposure Management’s AI-Powered Risk Prioritization Shows Organizations What to Fix First

As the attack surface expands and the number of vulnerabilities grows, organizations face a new crisis:…

As the attack surface expands and the number of vulnerabilities grows, organizations face a new crisis:…

AV-Comparatives, an independent cybersecurity software testing organization, has released the results of two key evaluations of…

WordCamp US 2025 is heading to vibrant Portland, Oregon, from August 26–29, 2025! Join fellow open…

Ready to cram 11 drives, dual 10 GbE + 2.5 GbE, and an 8-core Zen 4…

When I launched my first WordPress website, I wasn’t thinking about privacy laws. Like most beginners,…

IDC MarketScape vendor analysis model is designed to provide an overview of the competitive fitness of…

OVHcloud is on track to exceed €1 billion in revenue for its fiscal year 2025, following…

A slow or complicated checkout process can quietly hurt your WooCommerce store’s sales. I’ve seen it…

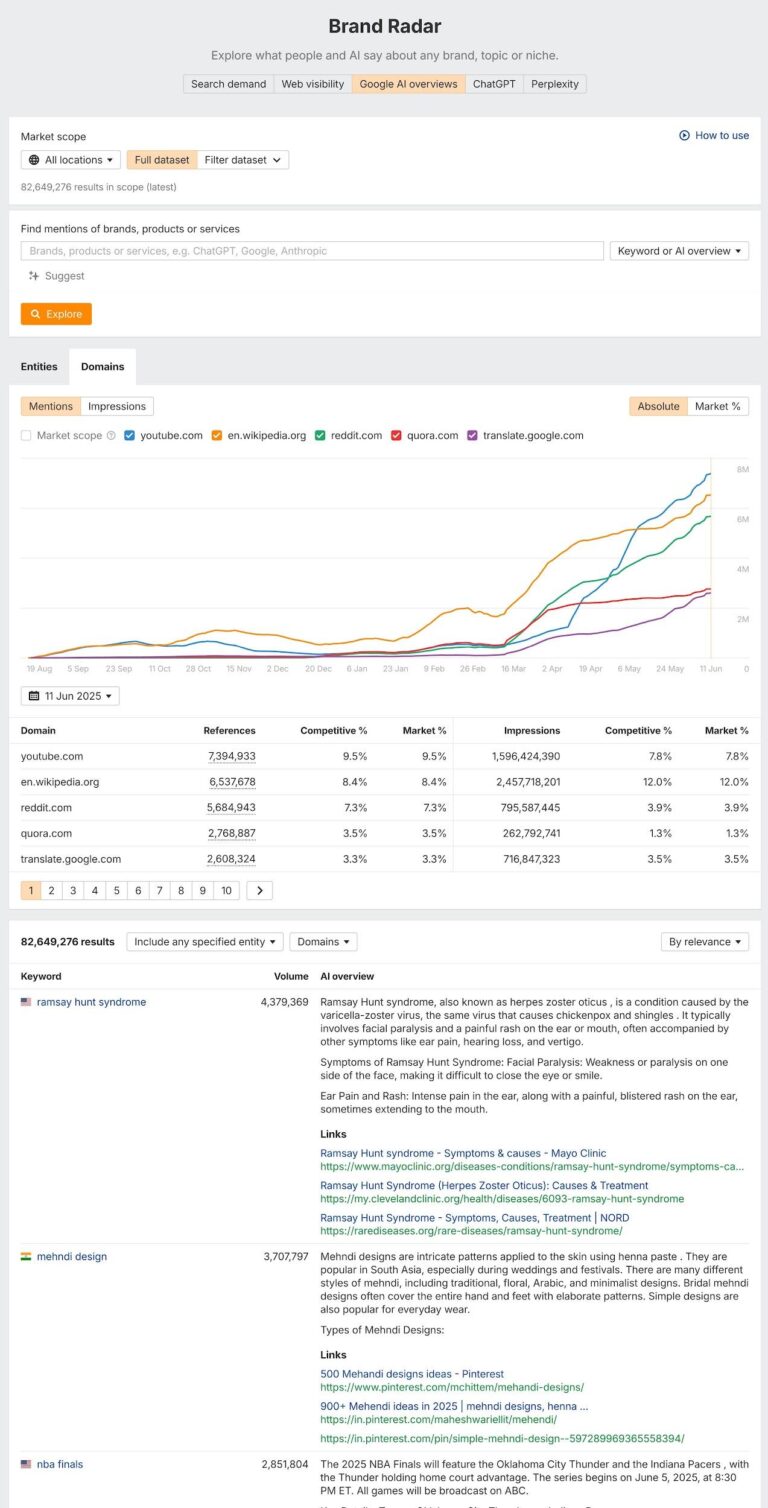

Going into this study, I suspected that domain-level link metrics would not be a good predictor…

A six-month-old artificial intelligence startup, Thinking Machines Lab, has secured $2 billion in funding, pushing its…

The iPhone 16E caused some controversy when it was first announced. 3 months of daily use…

For many small business owners, translating a website feels like a luxury they simply can’t afford….

The traditional model of cybersecurity, which relies heavily on securing the perimeter of trusted internal networks,…

🎙️ Episode 2 – BetweenTheClouds🛠️ Featured Tools & ProjectsVMware Cloud Foundation 9.0 Explore major upgrades like…

Your Smart TV is spying on you. It’s taking snapshots of everything you watch — sometimes…

God-tier gaming monitor or minor improvement over the previous version? Let’s talk about the LG UltraGear…

Have you ever clicked on an image on a website expecting it to zoom in, only…

Cloud is the new battleground, and more adversaries are joining the fight: New and unattributed cloud…