OpenAI cofounder Reid Hoffman says the company is better off with Sam Altman restored as CEO, and he was shocked that board members he used to serve alongside would think otherwise. Hoffman, who left OpenAI’s board in March after cofounding the competitor Inflection AI, offered his first comments on the recent chaos at OpenAI on stage at WIRED’s LiveWIRED 30th anniversary event in San Francisco on Tuesday.

“Surprise would be an understatement,” he said about his reaction to learning of Altman’s firing. After employees and investors revolted, Altman got his job back days later. “We are in a much better place for the world to have Sam as CEO. He’s very competent in that,” said Hoffman, who with Elon Musk and other wealthy tech luminaries formed the earliest vision for OpenAI when it was founded in 2015.

“I don’t think I have ever seen in all of corporation history where a board fires a CEO and something that rounds to 100 percent of the employees sign a letter saying, ‘Reinstate the CEO or we’re out of here,’” Hoffman said. “That’s history-making.”

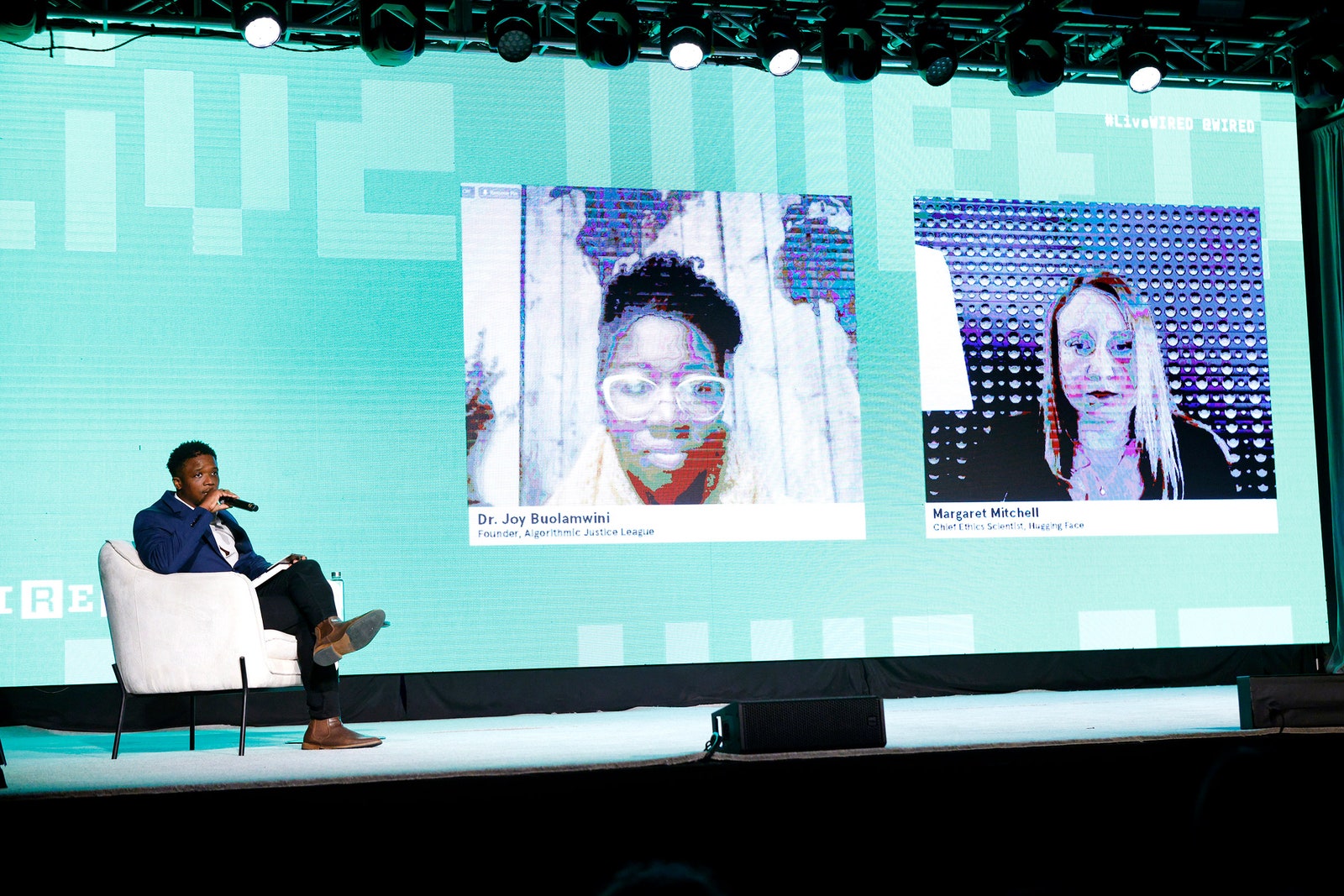

Hoffman spoke during a panel dubbed the “AI Optimist Club” on Tuesday. On a separate panel at LiveWIRED, computer scientist Joy Buolamwini said that while Altman presents like many tech leaders with a sense “privileged optimism about what AI can do and how AI can be beneficial,” she’s welcomed finding common ground with him on issues such as the need for government regulation of AI.

Support for Altman from tech leaders is significant as OpenAI tries to move past the crisis—even as much remains unknown about the concerns that led four board members to oust him. The rapid uptake of technologies such as OpenAI’s ChatGPT are providing early signs of how powerful AI systems could reshape work and entertainment, with those in charge such as Altman having the greatest influence over the course of change. On Wednesday, Google announced Gemini, a multifaceted new AI model intended to counter OpenAI.

“Just because you can build the technology doesn’t mean that necessarily has a good outcome,” said Hoffman, a LinkedIn cofounder and investor at venture capital firm Greylock. “You have to shape it. You have to direct what you’re doing—being an intelligent shaper of it and driving in the right direction.”

Among the questions OpenAI will have to confront as it remakes its board, and presumably doubles down on its mission, is how to balance a focus on the profit potential and value of AI systems today with the prospect of future systems that become more intelligent than humans.

Some speakers at LiveWIRED said that issues of today, like the potential for ChatGPT to spread falsehoods or problematic racial, gender, or religious stereotypes, should take priority to help society correct what appears to be a concerning course.

The problems of tomorrow can wait, said Margaret Mitchell, research and chief ethics scientist at startup Hugging Face, which hosts open source AI projects. “It’s not like something is just suddenly going to fundamentally change and a lot of people die,” she said. “People are dying now, today, due to deployed AI drones that are making the decision to kill people.”

Hoffman and others said that there’s no need to pause development of AI. He called that drastic measure, for which some AI researchers have petitioned, foolish and destructive. Hoffman identified himself as a rational “accelerationist”—someone who knows to slow down when driving around a corner but that, presumably, is happy to speed up when the road ahead is clear. “I recommend everyone come join us in the optimist club, not because it’s utopia and everything works out just fine, but because it can be part of an amazing solution,” he said. “That’s what we’re trying to build towards.”

Mitchell and Buolamwini, who is artist-in-chief and president of the AI harms advocacy group Algorithmic Justice League, said that relying on company promises to mitigate bias and misuse of AI would not be enough. In their view, governments must make clear that AI systems cannot undermine people’s rights to fair treatment or humanity. “Those who stand to be exploited or extorted, even exterminated” need to be protected, Buolamwini said, adding that systems like lethal drones should be stopped. “We’re already in a world where AI is dangerous,” she said. “We have AI as the angels of death.”

Applications such as weaponry are far from OpenAI’s core focus on aiding coders, writers, and other professionals. The company’s tools by their terms cannot be used in military and warfare—although OpenAI’s primary backer and enthusiastic customer Microsoft has a sizable business with the US military. But Buolamwini suggested that companies developing business applications deserve no less scrutiny. As AI takes over mundane tasks such as composition, companies must be ready to reckon with the social consequences of a world that may offer workers fewer meaningful opportunities to learn the basics of a job that it may turn out are vital to becoming highly skilled. “What does it mean to go through that process of creation, finding the right word, figuring out how to express yourself, and learning something in the struggle to do it?” she said.

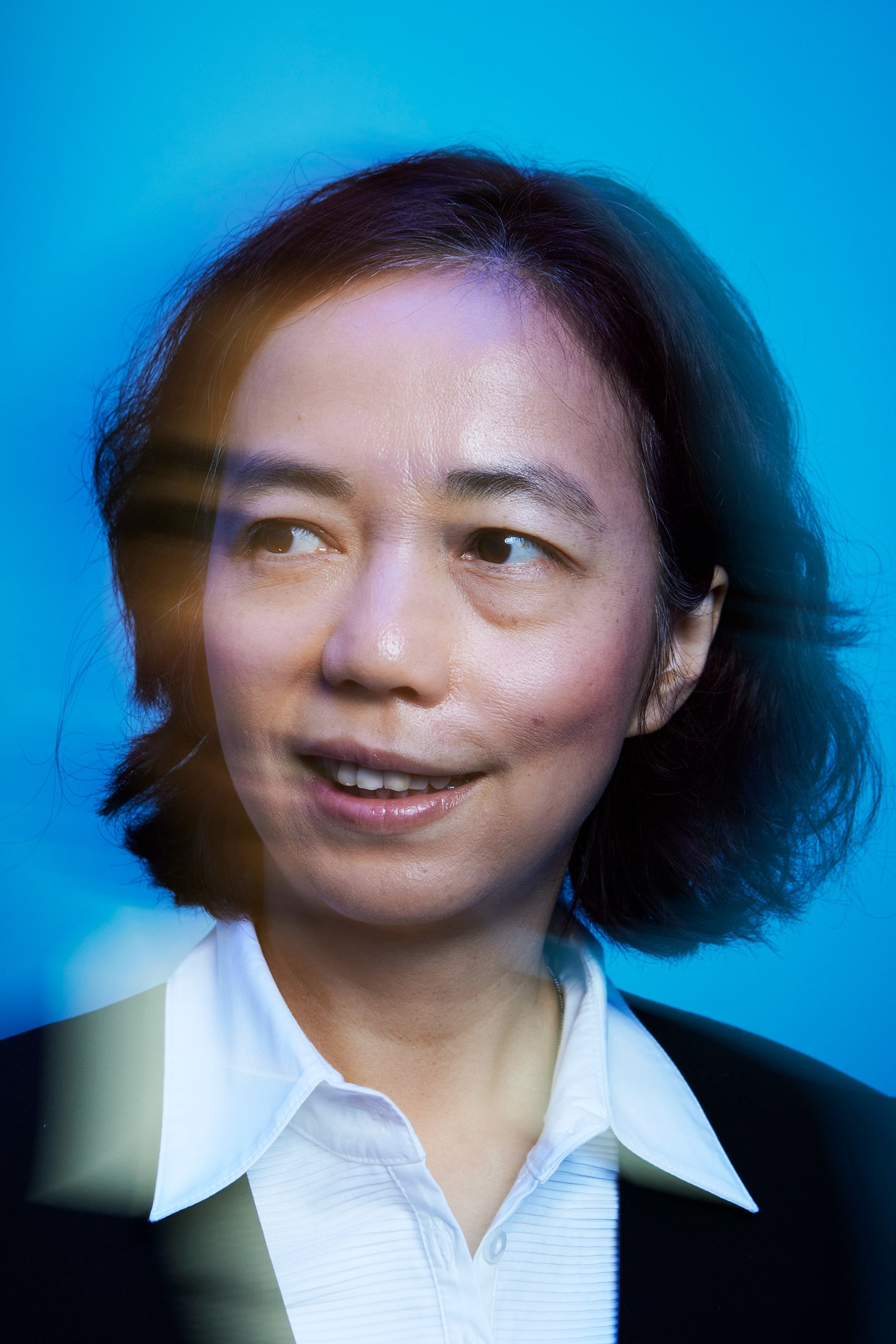

Fei-Fei Li, a Stanford University computer scientist who runs the school’s Institute for Human-Centered Artificial Intelligence, said the AI community has to be focused on its impacts on people, all the way from individual dignity to large societies. “I should start a new club called the techno-humanist,” she said. “It’s too simple to say, ‘Do you want to accelerate or decelerate?’ We should talk about where we want to accelerate, and where we should slow down.”

Li is one of the modern AI pioneers, having developed the computer vision system known as ImageNet. Would OpenAI want a seemingly balanced voice like hers on its new board? OpenAI board chair Bret Taylor did not respond to a request to comment. But if the opportunity arose, Li said, “I will carefully consider that.”

Reid Hoffman, the renowned entrepreneur and co-founder of OpenAI, recently expressed his confidence in Sam Altman, the CEO of the artificial intelligence research laboratory. Hoffman’s endorsement comes as Altman takes the helm of OpenAI, following the departure of Elon Musk, who co-founded the organization in 2015.

Hoffman, known for his successful ventures including LinkedIn and Greylock Partners, has been a long-time supporter of Altman. In a recent interview, he praised Altman’s leadership skills and his ability to navigate complex technological landscapes. Hoffman believes that Altman’s vision and expertise make him the ideal candidate to lead OpenAI into the future.

OpenAI, a non-profit organization dedicated to developing safe and beneficial AI technologies, has been at the forefront of cutting-edge research in the field. With a mission to ensure that artificial general intelligence (AGI) benefits all of humanity, OpenAI has made significant strides in advancing AI capabilities while prioritizing ethical considerations.

Hoffman’s confidence in Altman stems from their shared belief in the potential of AGI to revolutionize various industries and improve human lives. Both entrepreneurs recognize the importance of responsible development and deployment of AI technologies. They understand that AGI has the power to shape our future and must be guided by values that prioritize safety, transparency, and fairness.

Altman, who joined OpenAI as a research fellow in 2015 before becoming its CEO in 2019, has already demonstrated his commitment to these principles. Under his leadership, OpenAI has actively engaged in partnerships with other organizations to collaborate on AI research and share knowledge. Altman has also emphasized the need for cooperation among different stakeholders to ensure that AGI is developed in a manner that benefits society as a whole.

Hoffman’s endorsement of Altman is not only a testament to his leadership abilities but also an acknowledgment of OpenAI’s significant achievements under his guidance. The organization has made substantial progress in AI research, including breakthroughs in natural language processing and reinforcement learning. OpenAI’s GPT-3 (Generative Pre-trained Transformer 3) model, which can generate human-like text, has garnered widespread attention and acclaim.

As Altman takes on the role of CEO, he faces the challenge of steering OpenAI towards its long-term goals. One of the key objectives is to ensure that AGI development remains safe and beneficial. Altman has emphasized the importance of avoiding a competitive race without proper safety precautions, advocating for cooperation and responsible practices within the AI community.

Hoffman’s confidence in Altman’s ability to navigate these challenges is well-founded. Altman’s track record as an entrepreneur and his deep understanding of the AI landscape make him an ideal leader for OpenAI. His commitment to ethical considerations, collaboration, and responsible development aligns with the organization’s core values.

With Hoffman’s endorsement, Altman’s leadership at OpenAI is poised to continue driving innovation in AI research while prioritizing safety and societal impact. As the world progresses towards an AI-driven future, having leaders like Altman who are dedicated to responsible development becomes increasingly crucial. OpenAI’s work under Altman’s guidance will undoubtedly shape the trajectory of AGI and its impact on society for years to come.