2020 was a year like no other, but the silver lining of a changed world brought data quality, speed, and insights to the forefront for businesses. Here’s what’s coming next.

If 2021 is anything like 2020, we’ll see curveballs and things we didn’t expect. Let’s hope it’s not quite as wild as 2020…but in case it is, you can be prepared for the unexpected.

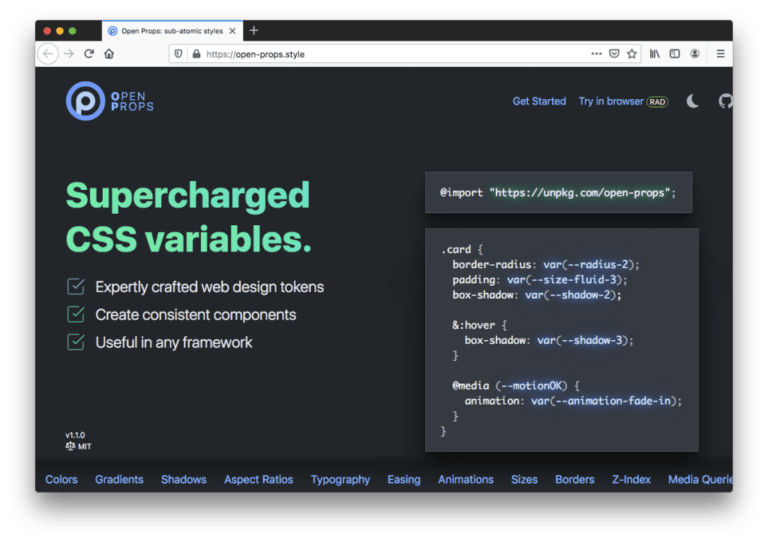

Google Cloud

In this year of unexpected, often unimaginable events and constant change, some common themes rose to the surface. On the technology front, it became apparent that what we considered advanced a few years ago is now as essential as electricity. Top-notch videoconferencing and always-on wireless networks are no longer just nice to have, and neither are the data platforms that support enterprises. Knowing what employees and customers needed in 2020, and knowing it immediately, meant that gathering and using available data really became essential.

Some of what’s important to us at Google Cloud, like free public datasets, grew popular quickly this year to help track and understand the pandemic. In 2020, the concept of an unsiloed, data-first business, where anyone can access the data insights they need, also became more important. So did the understanding that data quality can make or break a business. Adapting to changing customer needs almost instantly also became a high priority for retailers and others. According to Gartner’s 2020 Magic Quadrant for Data Quality Solutions, poor data quality costs organizations an average of $12.9 million per year. This number is likely to rise as business environments become increasingly digitized and complex.

We asked Google Cloud’s data leaders what they see coming as we enter a new year. Here are the top five trends to look out for in 2021.

1. Real-time data analytics will help you see the future

Table of Contents

- 1 1. Real-time data analytics will help you see the future

- 2 2. In 2021, you’ll demand more of your databases

- 3 3. Analytics will no longer be dashboard-driven—they’ll come to you through AI-powered data experiences

- 4 4. “Location, location, location” matters for data, too: Geospatial data will be key to unlocking enterprise transformation

- 5 5. Data lakes will smarten up to support open and multicloud infrastructure

Debanjan Saha, VP, Google Cloud

With the massive shift toward the cloud comes a parallel shift toward stronger data assets and better data analytics. Future-looking platforms are being built around data analysis, and 2020 proved how important business agility is. One of the big leaps we’re seeing that will only become more prevalent in 2021 is real-time analytics. Following past data can be informative, but there are many use cases that require immediate data, especially when it comes to reacting to unexpected events. And that can make a huge difference to the bottom line. For example, identifying and stopping a network security breach based on real-time data availability could completely change risk mitigation.

While real-time data revolutionizes how quickly we gather data, perhaps the most unexpected yet incredibly useful domain of data analytics we’ve seen is predictive analytics. Traditionally, data is gathered only from the physical world, meaning the only way to plan for what will happen was to look at what could physically be tested. But with predictive models and AI/ML tools like BigQuery ML, organizations can run simulations based on real-life scenarios and information, giving them data on circumstances that would be difficult, costly, or even impossible to test for in physical environments.

Related: Modernize your analytics strategy. Get the whitepaper, “Data analytics decisions: Steps to building a modern data warehouse.”

2. In 2021, you’ll demand more of your databases

Andi Gutmans, VP, Google Cloud

During this year of constant challenges, digital transformation sped up quickly. Enterprises are increasing their pace to make sure they can deliver for their customers, with data at the center of it all. For over 40 years, enterprises have been putting databases on-premises. But in the next 18 months or so, we’ll continue to see a huge acceleration in deploying or migrating databases to the cloud, reaching 75% by 2022. This won’t just mean migrating databases as is, but rethinking the requirements of what’s needed for bringing transformation to the business, which will likely include developing on cloud-native databases and more closely integrating with analytical and ML capabilities.

Databases have always been an essential part of every enterprise, but now more than ever they are critical to accelerating innovation and growth. There’s a convergence of analytical and operational data to support real-time business needs. Breaking down these silos between teams and systems will help enterprises make faster decisions, identify new revenue opportunities, more easily meet changing compliance requirements, and save on overall operating costs.

3. Analytics will no longer be dashboard-driven—they’ll come to you through AI-powered data experiences

Colin Zima, Director of Product Management, Looker

We’ve already started the move away from the static dashboards that present business teams with a specific set of data. Those dashboards have been a common iteration of business intelligence, but they’ve required tradeoffs, and don’t allow the kind of intelligence and visibility that modern enterprise workers need.

What’s coming next is data experiences, where employees get the data they need in their existing workflows. The key to these experiences is that they aren’t one-size-fits-all—they’re tailored for what the user needs. So for many enterprises, that means moving away from making dashboards and pivot tables available for employees, and moving toward building data products for internal usage. This year, I’ve seen amazing examples, such as a touch-enabled interface designed specifically for employees to quickly see metrics about streaming service titles. This kind of approach brings a product experience to solve employee problems faster and leads to better productivity.

The technology is finally available in the broader enterprise market to give the power of analytics to entire teams—that includes business analysts, sales teams, and others who don’t have specialized knowledge or training. Easy-to-use data and AI/ML solutions will combine with these new data experiences to enable real-time, data-driven decisions.

4. “Location, location, location” matters for data, too: Geospatial data will be key to unlocking enterprise transformation

Jen Bennett, Office of the CTO, Google Cloud

There has been a great focus on big data and the soaring volumes of data, but in 2021, let’s not forget data variety, which continues to grow as a key enabler to business transformation.

Digital transformation often comes from looking at your business from a new angle—quite literally. The use of data from satellites and drones, and data that has geo-location attributes, is becoming a key differentiator in understanding your business. In a supply chain, understanding the location of raw materials, products, or assets combined with the ability to better predict logistic disruptions globally becomes critical to business resilience. In sales and marketing, having a better understanding of demand signals through geo-tagged information helps you optimize your limited resources and grow your market reach cost effectively. Mobility information became instrumental in managing COVID-19 and the broader disruption of the global pandemic.

As cities and governments turned to geospatial data in response to COVID-19, we also saw a growing demand and innovative thinking around what is possible when combining geospatial data with other data such as retail sales. With the increasing focus on sustainability, geospatial data is proving to unlock a number of sustainability initiatives, such as sourcing. Historically, geospatial data was reserved for those who were experts; however, the democratization of geospatial data and analytics and global scale compute are making this once-specialized data accessible across the business.

In 2021, a business’s ability to fuse geospatial data with other data and collaborate across their business and throughout their value chain globally will prove to be a key differentiator.

5. Data lakes will smarten up to support open and multicloud infrastructure

Debanjan Saha, VP, Google Cloud

Data comes from so many sources these days, and data types long kept separate are now stored and analyzed in the same place. Business data now meets log data, and structured, semi-structured, and unstructured data are all combined. Data sources span cloud providers and cross long-established boundaries.

The scale of cloud makes it possible to perform advanced data analytics on all of these data types. With further moves to open and multicloud computing, stronger data lakes or warehouses will be even more essential. They aren’t simply storage—they should be a pillar of an enterprise data strategy. In the cloud, these have taken the shape of either a data warehouse—which stores primarily structured data so that everything is easily searchable—or data lakes—which bring together all of a business’ data regardless of structure. The line between “lake” and “warehouse” keeps blurring. This allows warehouses to integrate that unstructured data and use AI/ML solutions to make data lakes easier to navigate, ultimately leading to insights and collaboration faster.

If 2021 is anything like 2020, we’ll see curveballs and things we didn’t expect. Let’s hope it’s not quite as wild as 2020…but in case it is, you can be prepared for the unexpected. That means you can take advantage of real-time data, expect a lot more from enterprise databases, and everyone in your organization can get the data insights and reports they need on their own.

Take the next steps: Download “Google’s guide to building a data-driven culture” to learn how to foster a culture that improves agility, intelligence, insights, and trust. Or explore our fully managed database and smart analytics solutions and services that can help you unlock the potential of big data and manage enterprise data more reliably and securely.