Starting Tuesday, Facebook and Instagram users have a new way to try to get offending Facebook posts removed. The oversight board, a court-like body that the company has created to handle its trickiest content moderation decisions, has announced that users can now appeal decisions made by Facebook to leave posts up.

It’s a big expansion in scope. For the first few months of the oversight board’s operations, users were only been able to appeal to the board if they thought Facebook had wrongly taken down their own posts. But now, the oversight board is rolling out a process that allows users to challenge decisions made by Facebook’s content moderation team that resulted in content not being taken down. That could include anything from content a user has flagged because they think it’s a scam to content they believe to be hate speech.

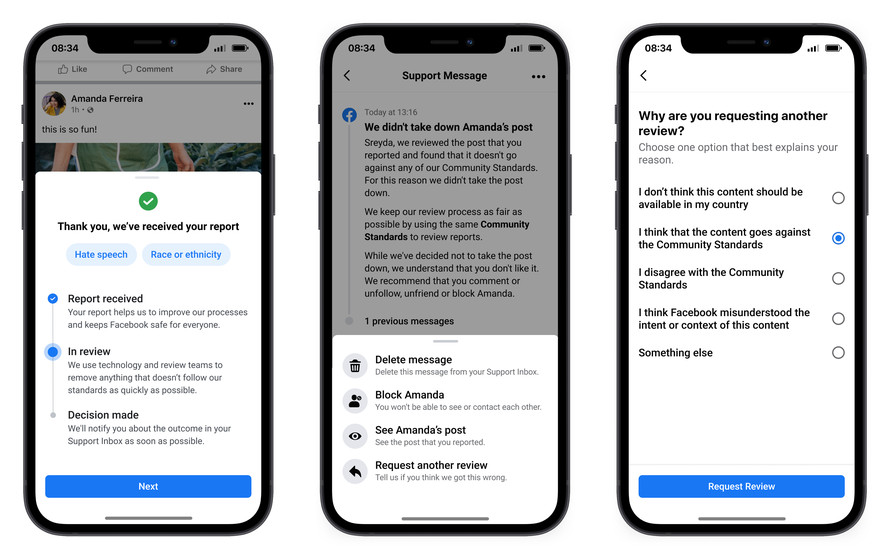

Here’s how it works: To get the oversight board involved in appealing a decision, users first need to report content that they see to Facebook, and then exhaust their options to get the content removed through Facebook’s existing review systems. After Facebook issues its final decision, users are notified. This is where the oversight board can get involved.

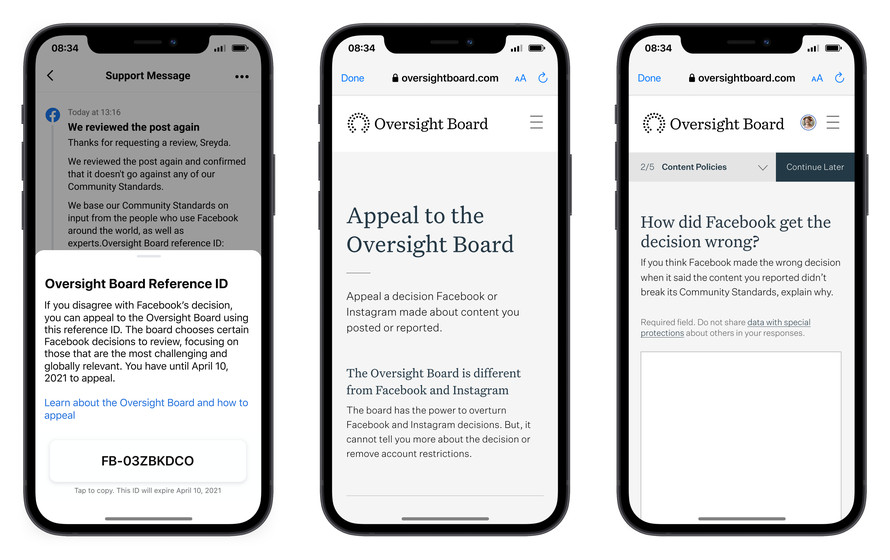

If Facebook decides to keep up a post or comment that a user reported, that user will receive a special “Oversight Board Reference ID” and a deadline to appeal the decision to the oversight board. To file such an appeal, users need to log onto the oversight board’s website using their Facebook account, where they’ll need that code for reference when writing up their request for appeal.

If it decides to take on the case, the oversight board will designate a panel of five of its members. (Currently, the board has 20 members, though it eventually wants to expand to 40). After those members deliberate, they’ll issue a draft decision, which the entire board then reviews. If a majority of the board supports the ruling, it’s shared with Facebook, which has said it will respect whatever decision the board makes. In other words, the board’s decisions are supposed to be binding. The board can also issue recommendations based on the issues and questions raised in the case, but those are nonbinding, meaning Facebook doesn’t have to listen.

the board’s bylaws.

A clear downside to this workflow is that the odds that the oversight board takes up your appeal in a case are probably low. The body says that, since it began operations last October, it has received more than 300,000 user appeals, but it’s only issued a handful of decisions. If you appeal your decision and your case isn’t taken up, it’s unlikely you’ll find why.

Still, it’s notable that Facebook oversight board seems to be expanding its scope. Some of the most contentious moderation decisions involve content that Facebook has chosen to leave up.

“Think of the Nancy Pelosi cheapfake video in which footage of the speaker of the House was edited misleadingly so that she appeared intoxicated,” Evelyn Douek, a content moderation expert at Harvard Law School, wrote in Lawfare last year, “or hate speech in Myanmar, or the years that Facebook hosted content from Infowars chief Alex Jones before finally deciding to follow Apple’s lead and remove Jones’s material.”

The announcement comes as the oversight board continues to try to bolster its own legitimacy and introduce itself to Facebook’s billions of users. Some experts have criticized the board for its limited powers, pointing out that it makes decisions post-by-post, and that it doesn’t have discretion over Facebook’s overall design, like the algorithms that decide what gets the most attention in peoples’ News Feeds. Others have said the oversight board is an essentially an exercise in public relations, and can’t provide the oversight that the company requires.

The “Real Facebook Oversight Board,” a group of scholars and activists who have criticized the company and the oversight board, said that Tuesday’s announcement reflects how “Facebook is washing its hands of the toughest decisions the company itself should be making.” The group added, “And rather than address content moderation issues across its platforms, it’s turning increasingly to adjudicating random, high-profile cases as cover for the company’s failings, instead of seriously grappling with the systemic content moderation crisis that its algorithms and leadership have created.”

At the same time, attention will remain on the oversight board, especially as people await its upcoming decision as to whether to uphold Facebook’s decision to suspend Donald Trump from the service. Trump frequently posted content that Facebook decided to keep up, despite outcry from civil rights group, Facebook employees, and users.

Open Sourced is made possible by Omidyar Network. All Open Sourced content is editorially independent and produced by our journalists.