In my last article, we went over how to set up a web app that serves chunks and bundles of CSS and JavaScript from CloudFront. We integrated it into Vite so that when the app runs in a browser, the assets requested from the app’s root HTML file would pull from CloudFront as the CDN.

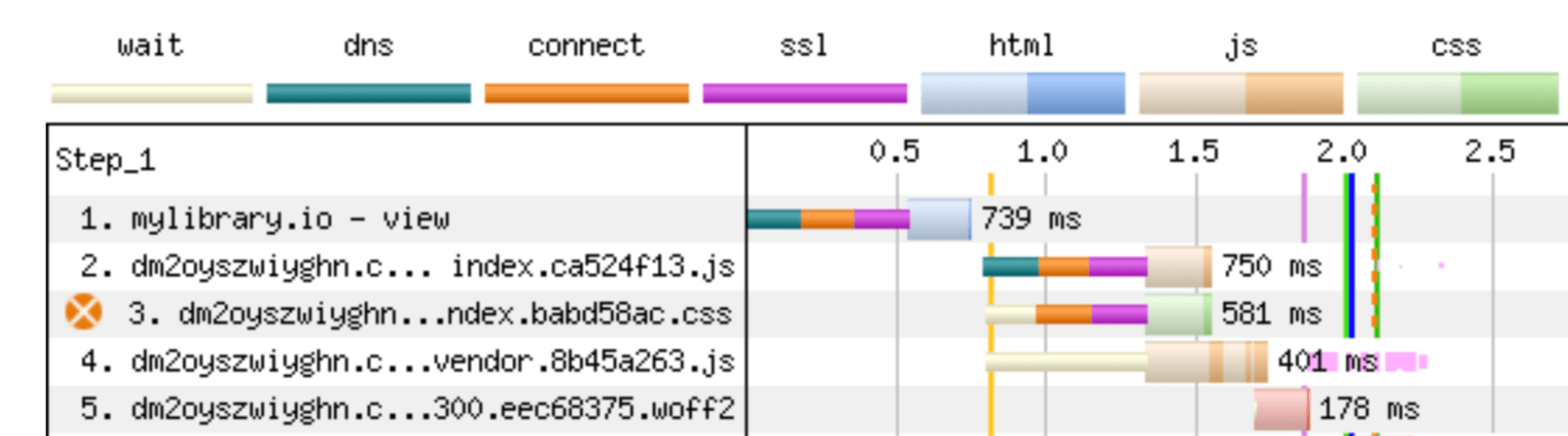

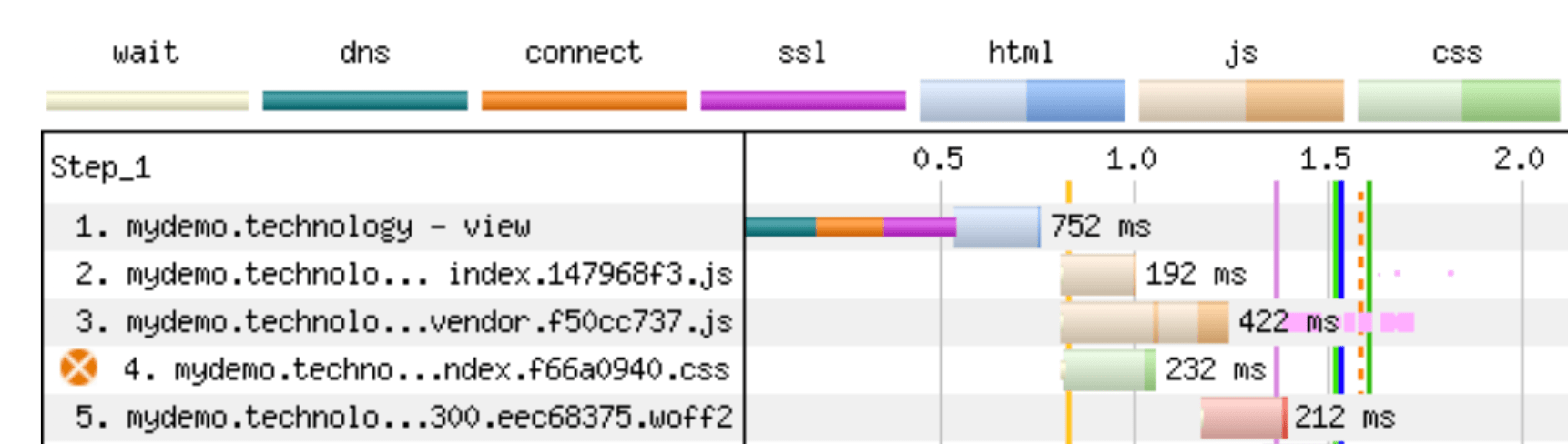

While CloudFront’s edge caching does offer benefits, serving your app’s resources from these multiple locations is not without a cost of its own. Let’s take a look at a WebPageTest trace of my own web app, running with the configuration from the last blog post.

This post will show you how to get around this. We’ll walk through how to host the entire web app on CloudFront and have CloudFront forward — or “proxy” — non-cacheable requests for data, auth, etc., onto our underlying web server.

Note that this is substantially more work than what we saw in the last article, and the instructions are likely to be different for you based on the exact needs of your web app, so your mileage may vary. We’ll be changing DNS records and, depending on your web app, you may have to add some cache headers in order to prevent certain assets from ever being cached. We’ll get into all of this!

You may be wondering whether the setup we covered in the last article even offers any benefits because of what we’re doing here in this article. Given the long connection time, would we have been better off forgoing the CDN, and instead serve all our assets from the web server to avoid that longer wait? I measured this with my own web app, and the CDN version, above, was indeed faster, but not by a lot. The initial LCP page load was about 200-300ms faster. And remember, that’s just for the initial load. Once this connection has been set up, edge caching should add much more value for all your subsequent, asynchronously loaded chunks.

Setting up our DNS

Table of Contents

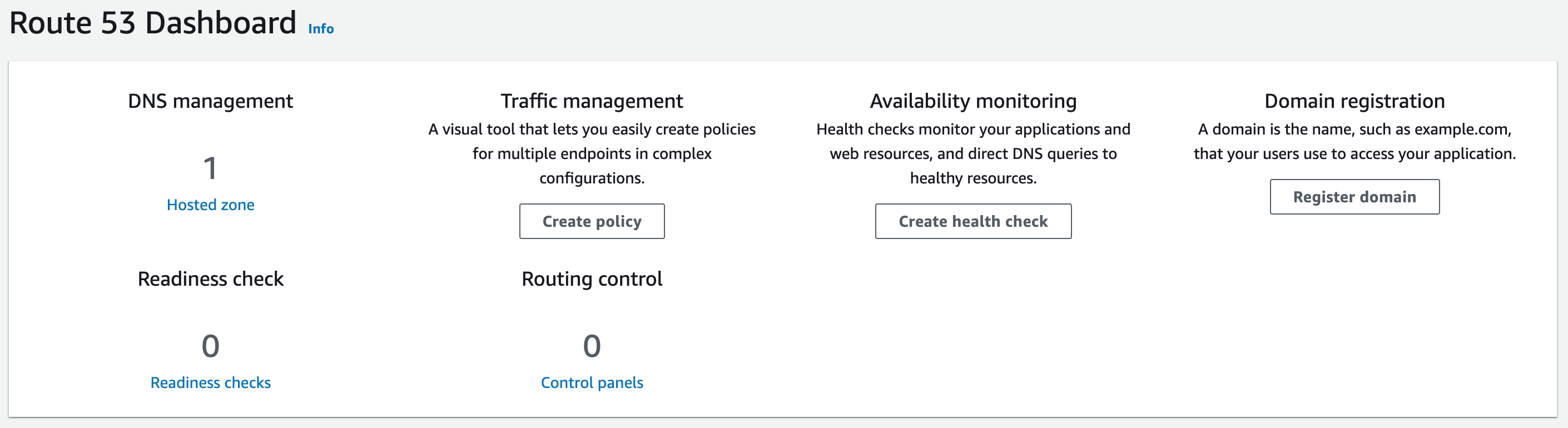

Our end goal is to serve our entire web app from CloudFront. That means when we hit our domain, we want the results to come from CloudFront instead of whatever web server it’s currently linked to. That means we’ll have to modify our DNS settings. We’ll use AWS Route 53 for this.

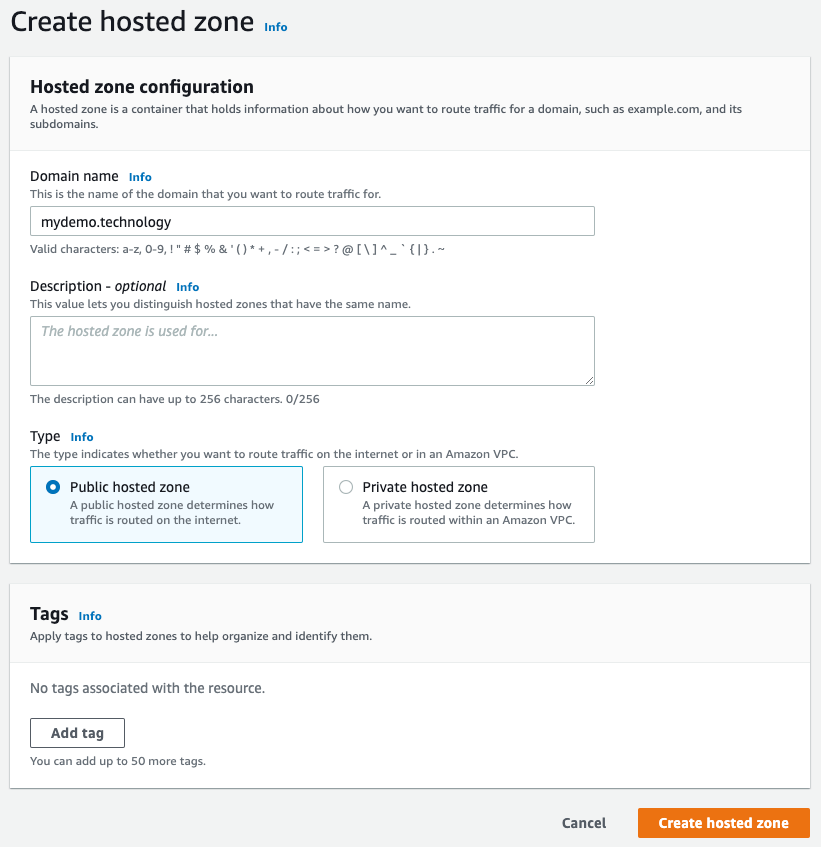

I’m using mydemo.technology as an example, which is a domain I own. I’ll show you all the steps here. But by the time you read this, I’ll have removed this domain from my web app. So, later when I start showing you actual CNAME records, and similar, those will no longer exist.

Go to the Route 53 homepage, and click on hosted zones:

Click Create hosted zone and enter the app’s domain:

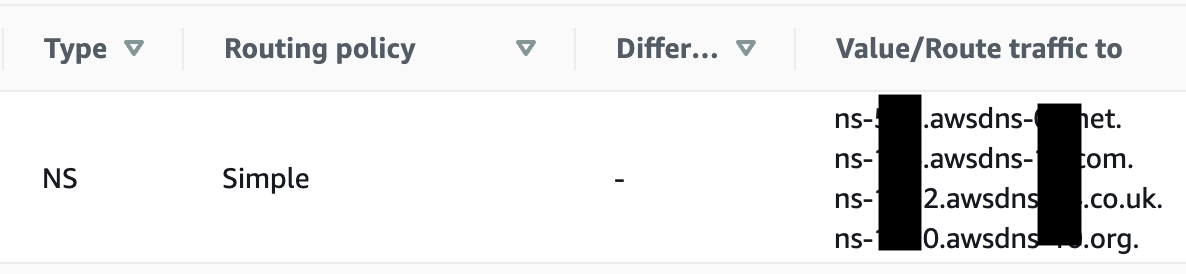

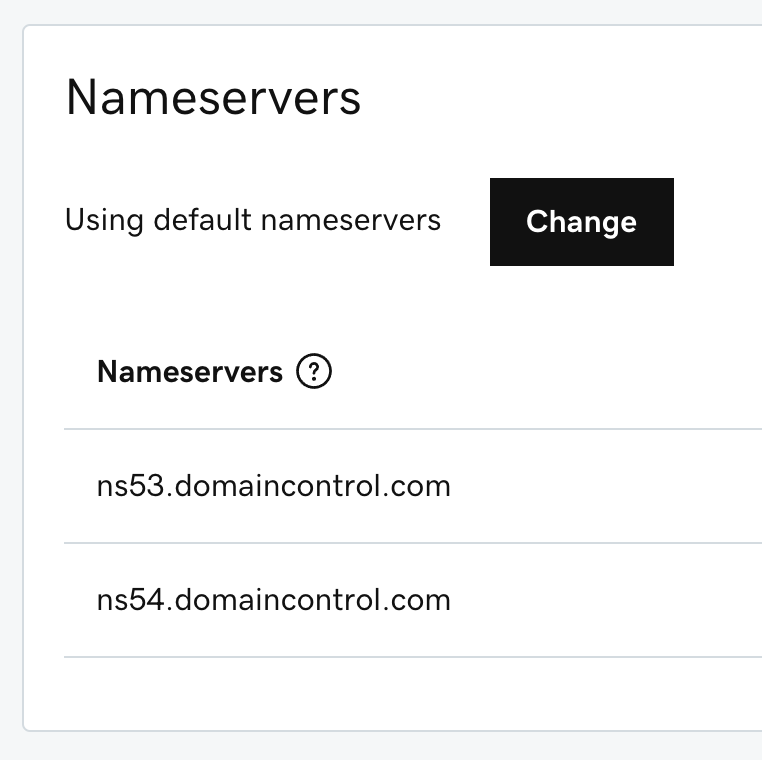

We haven’t really accomplished anything yet. We told AWS we want it to manage this domain for us, and AWS gave us the name servers it’ll route our traffic through. To put this into effect, we need to go to wherever our domain is registered. There should be a place for you to enter in your own custom name servers.

Note that my domain is registered with GoDaddy and that is reflected in the screenshots throughout this article. The UI, settings, and options may differ from what you see in your registrar.

Warning: I recommend writing down the original name servers as well as any and all DNS records before making changes. That way, should something fail, you have everything you need to roll back to how things were before you started. And even if everything works fine, you’ll still want to re-add any other records into Route 53, ie MX records, etc.

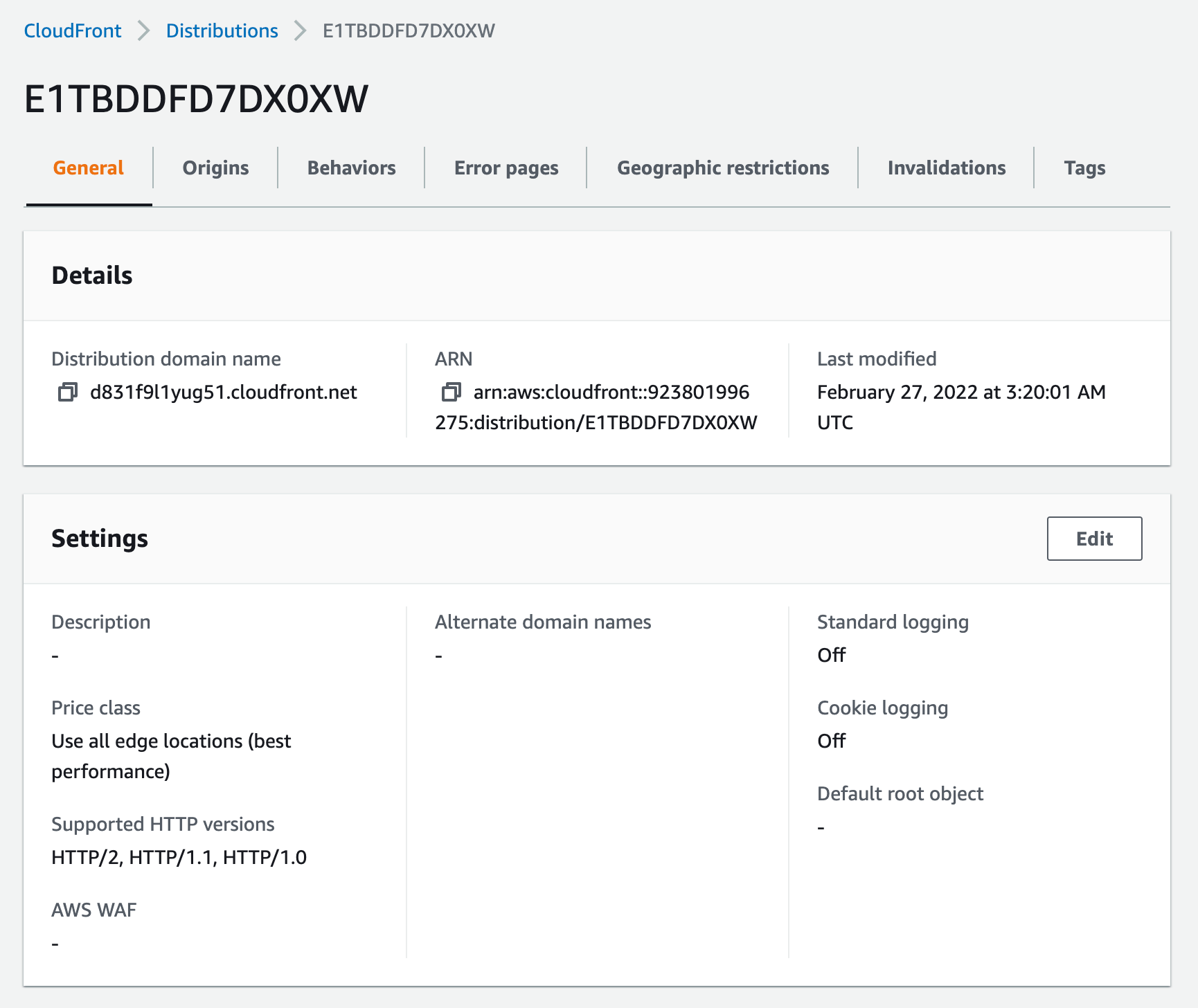

Setting up a CloudFront distribution

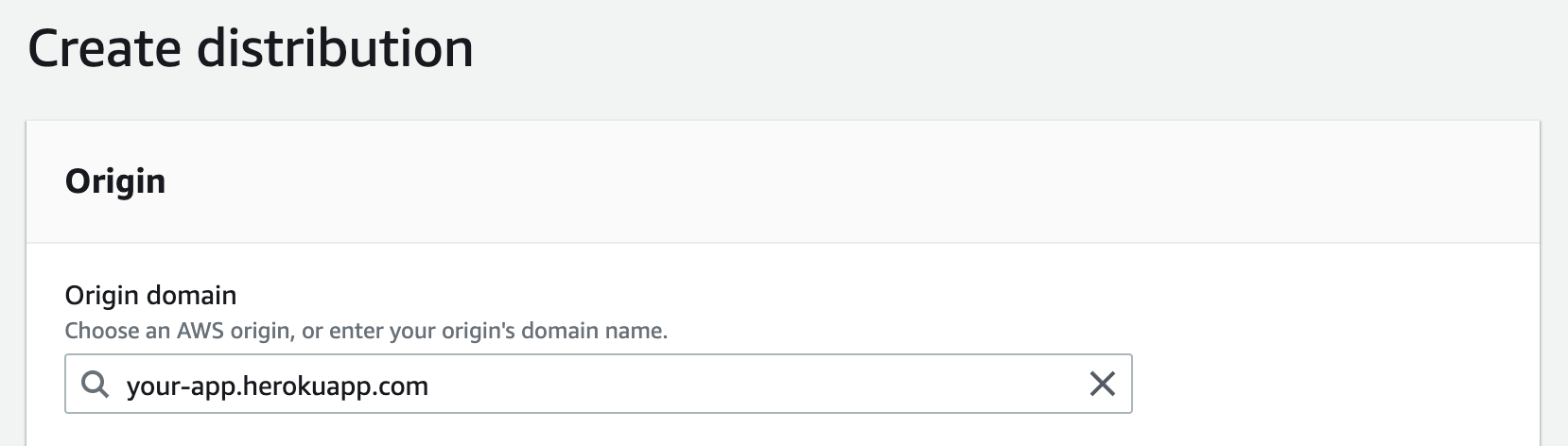

Let’s make a CloudFront distribution to host our web app. We covered the basics in the last post, so we’ll get right to it. One big change from last time is what we enter for the origin domain. Do not put the top-level domain, e.g. your-app.net. What you need is the underlying domain where your app is hosted. If that’s Heroku, then enter the URL Heroku provides you.

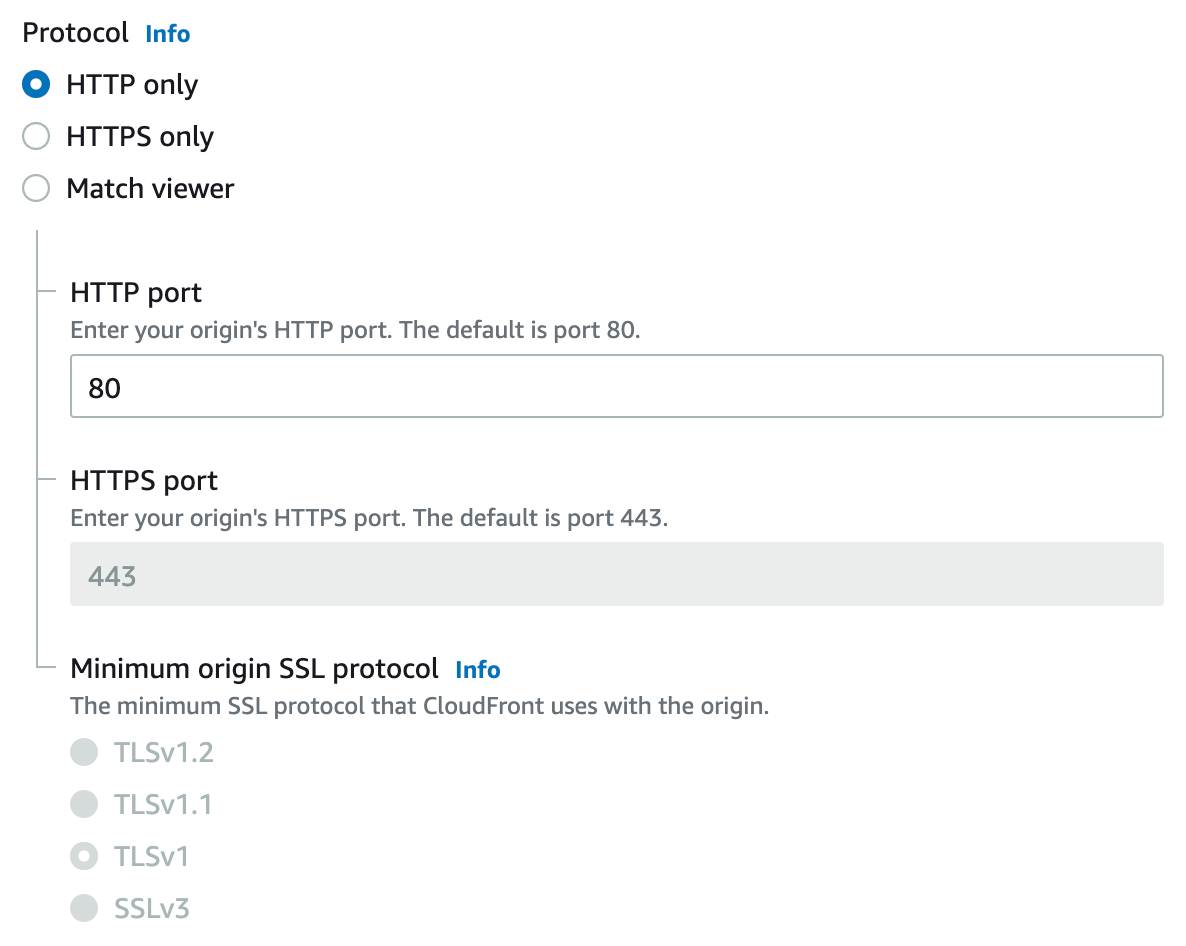

Next, be sure to change the default protocol if you plan to use this site over a secure HTTPS connection:

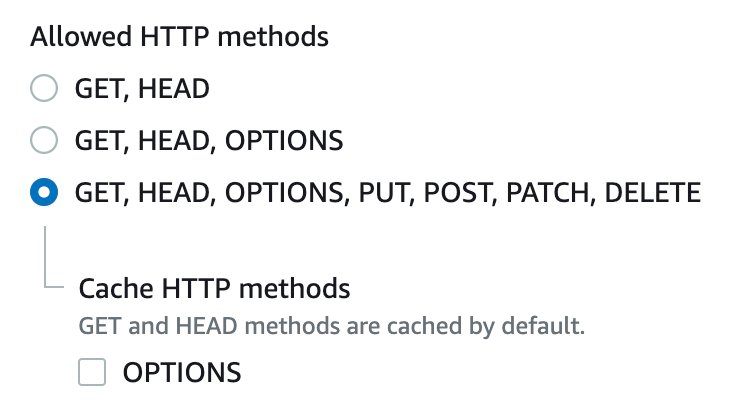

This part is crucial. If your web app is running authentication, hosting data, or anything else, be sure to enable other verbs besides GET. If you skip this part, then any POST requests for authentication, mutating data, etc., will be rejected and fail. If your web app is doing nothing but serving assets and all those things are handled by external services, then outstanding! You have a great setup, and you can skip this step.

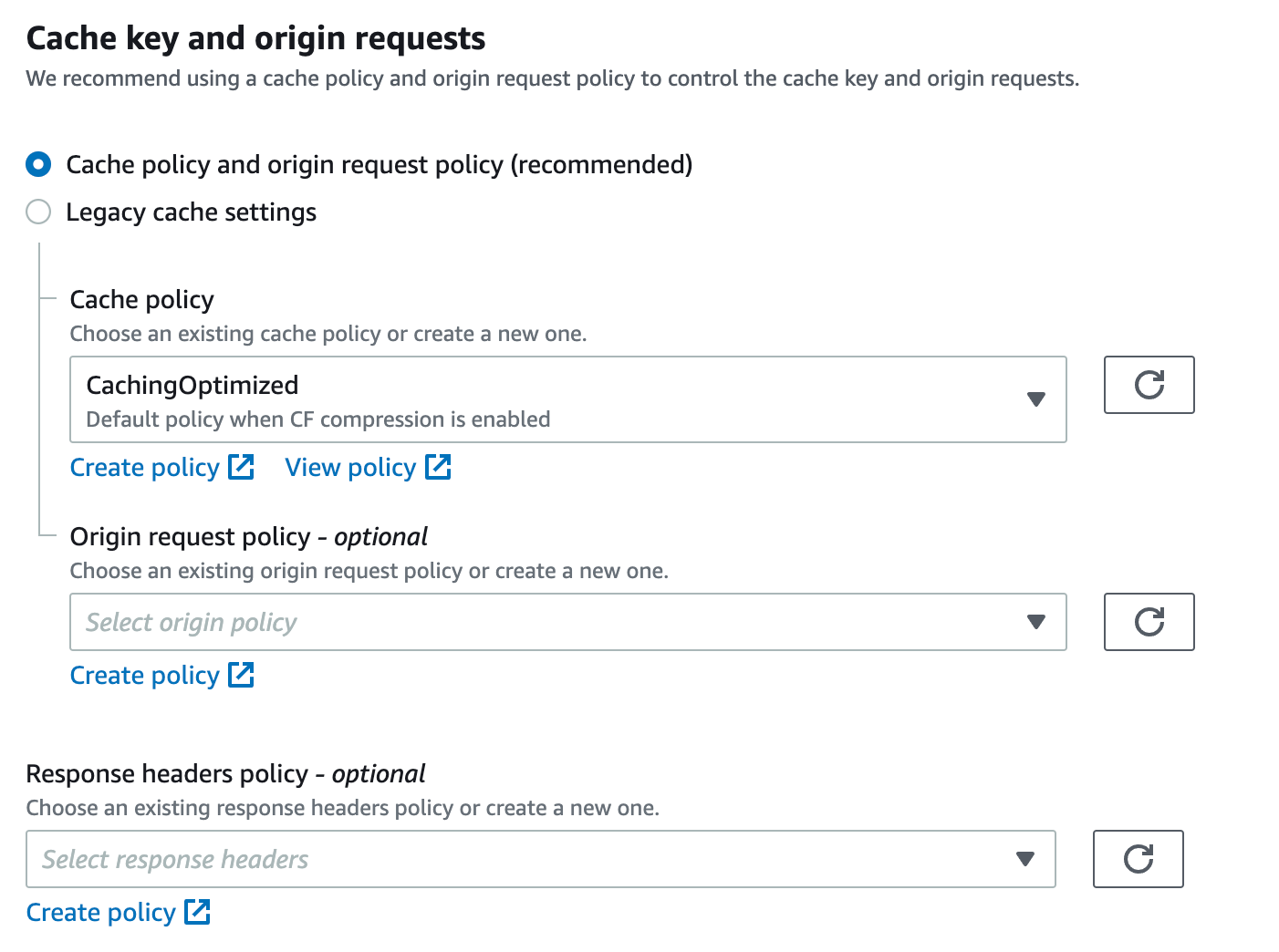

We have to make quite a few changes to the cache key and origin requests settings compared to last time:

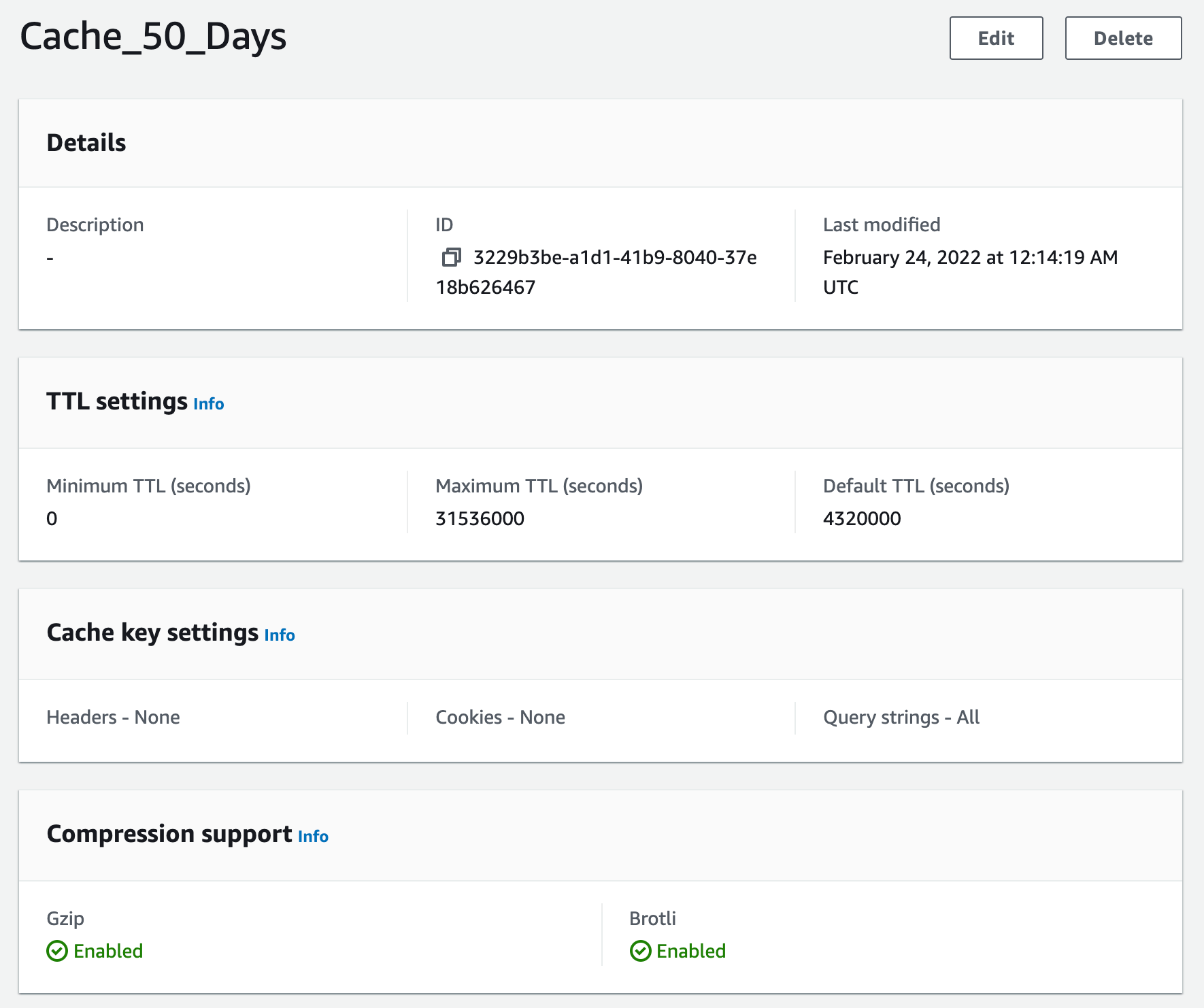

We need to create a cache policy with a minimum TTL of 0, so non-caching headers we send back will are properly respected. You may also want to enable all query strings. I was seeing weird behavior when multiple GraphQL requests went out together with different query strings, which were ignored, causing all these requests to appear identical from CloudFront’s perspective.

My policy wound up looking like this:

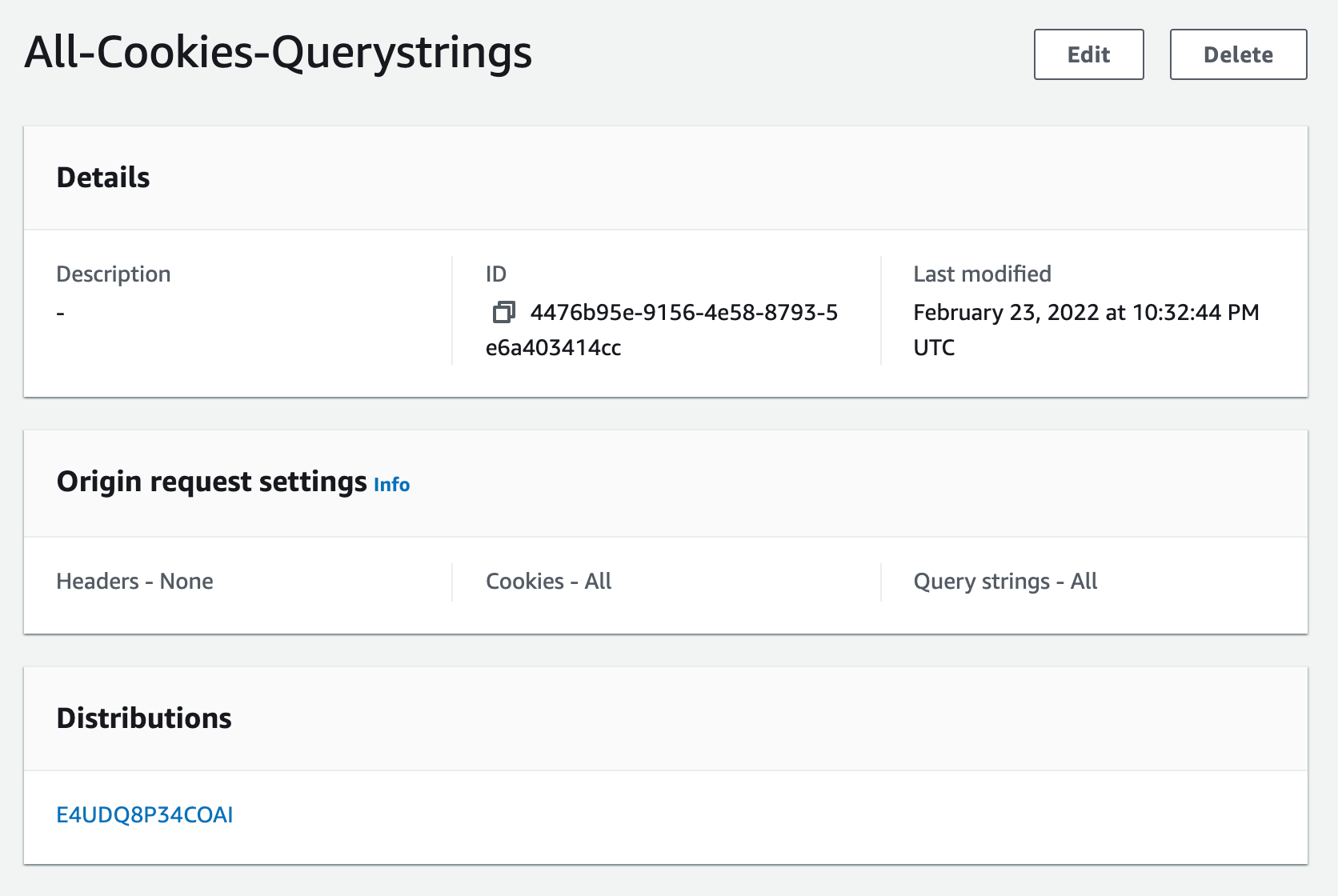

For an origin request policy, if needed, we should make sure to send query strings and cookies for things like authentication and data queries to work. To be clear, this determines whether cookies and query strings will be sent from CloudFront down to your web server (e.g. Heroku, or similar).

Mine looks like this:

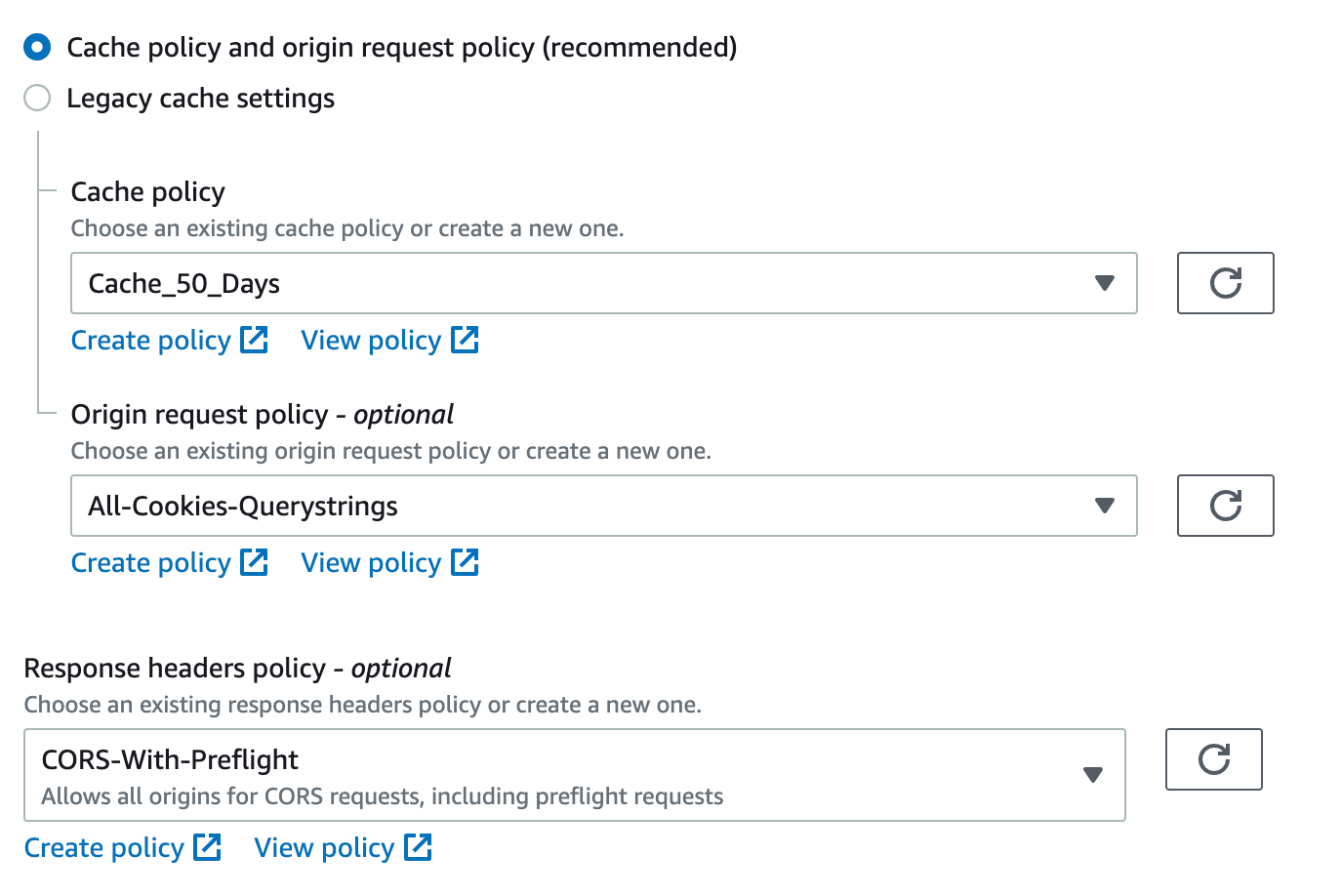

Lastly, for response headers policy, we can select “CORS With Preflight” from the list. In the end, your first two will have different names depending on how you set them up. But mine looks like this:

Let’s connect our domain, whatever it is, to this CloudFront distribution. Unfortunately, this is more work than you might expect. We need to prove to AWS that we actually own the domain because, for all Amazon knows, we don’t. We created a hosted zone in Route 53. And we took the nameservers it gave us and registered them with GoDaddy (or whoever your domain is registered with). But Amazon doesn’t know this yet. We need to demonstrate to Amazon that we do, in fact, control the DNS for this domain.

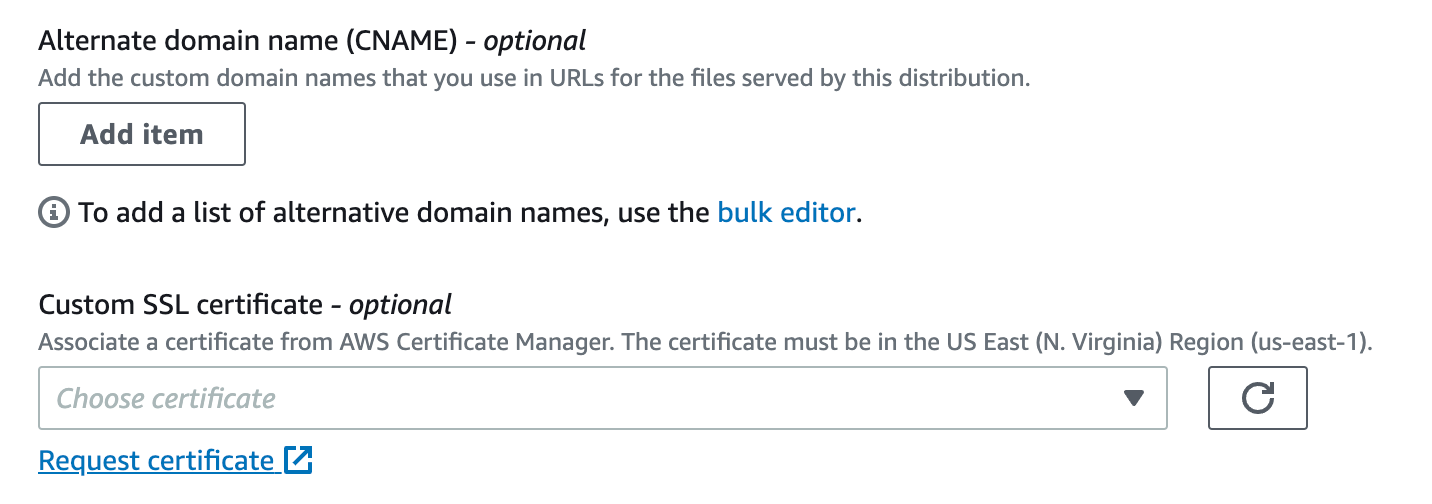

First, we’ll request an SSL certificate.

Next, let’s request the certificate link:

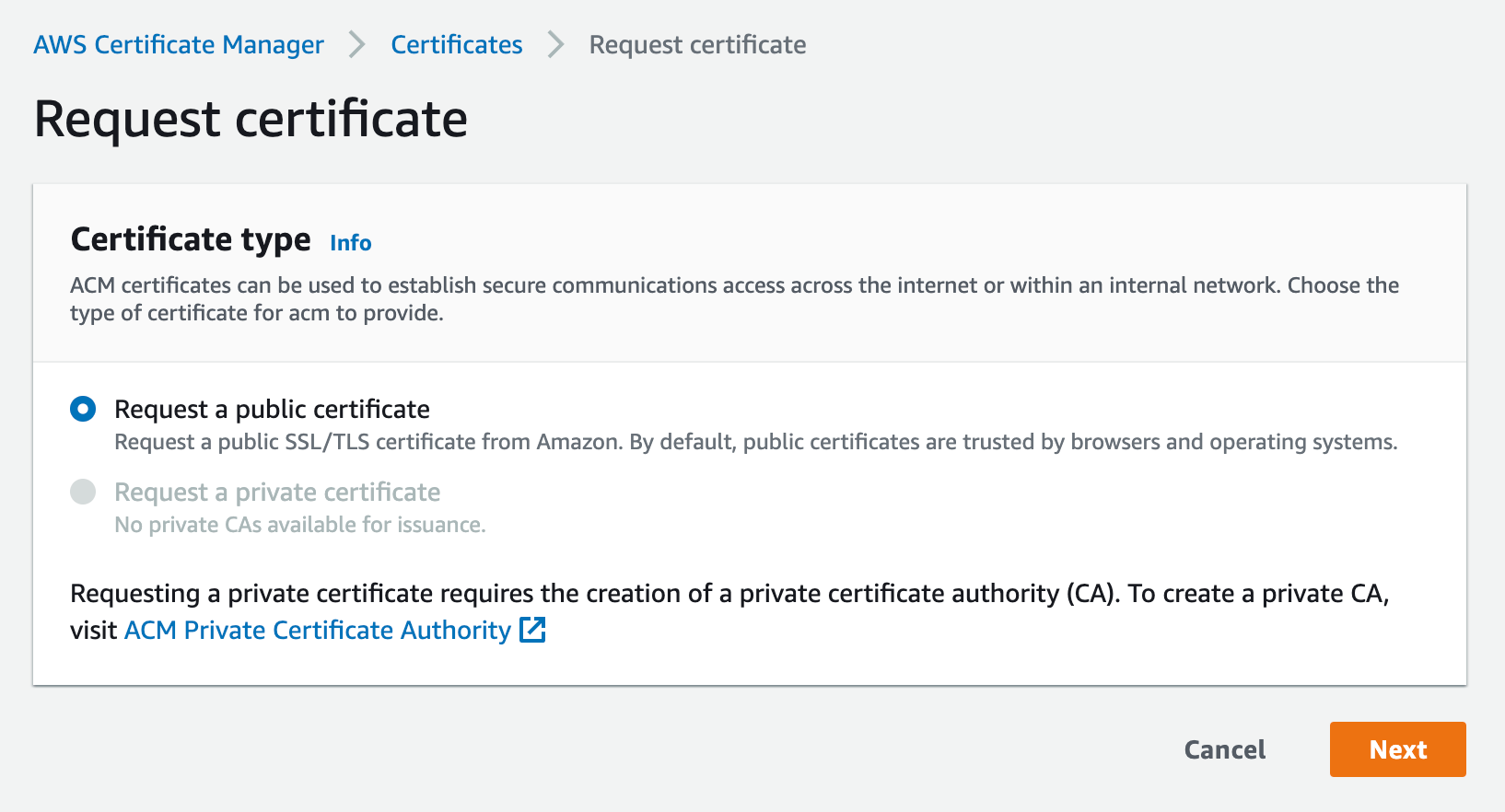

Now, we’ll select the option to request a public certificate option:

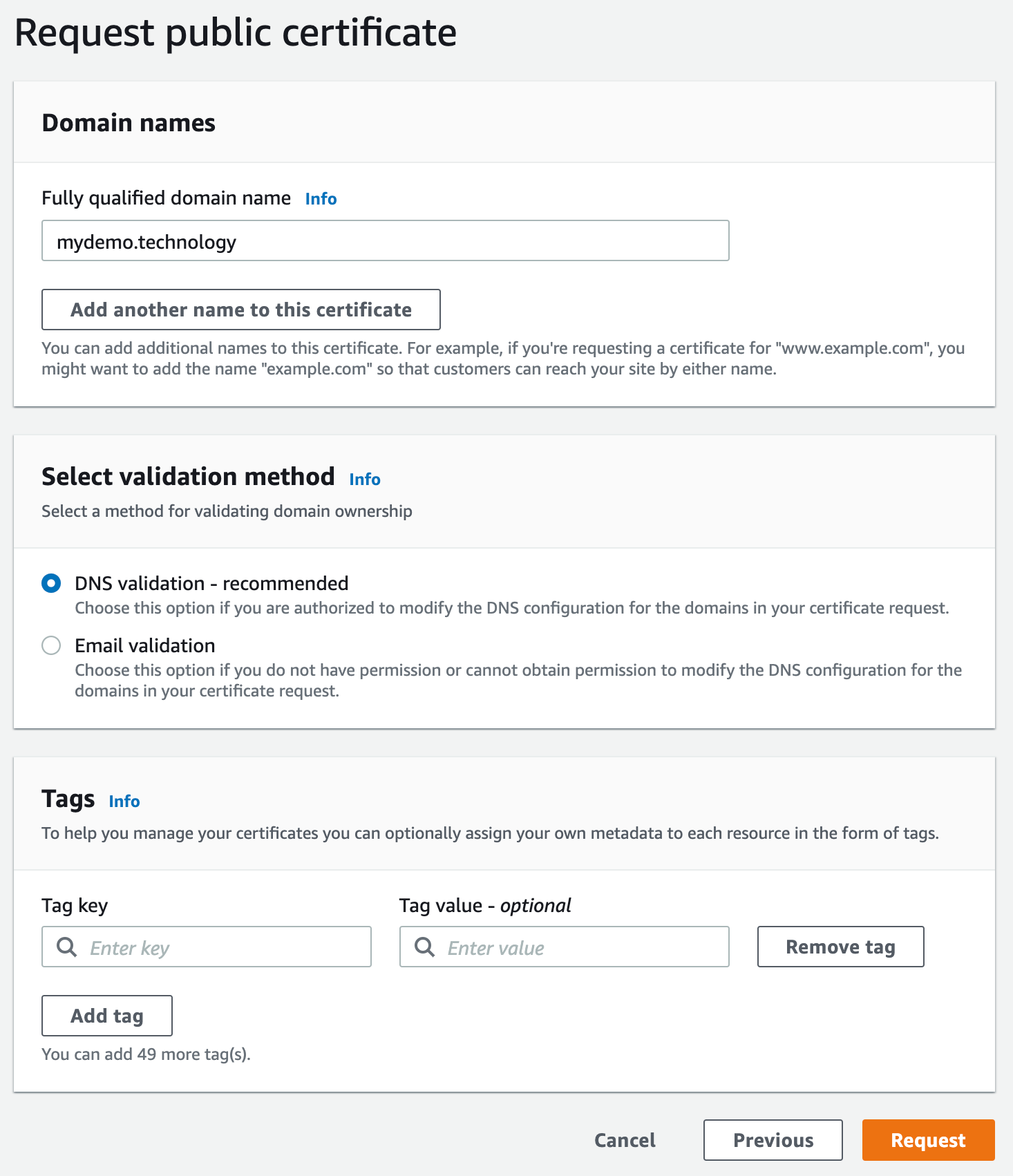

We need to provide the domain:

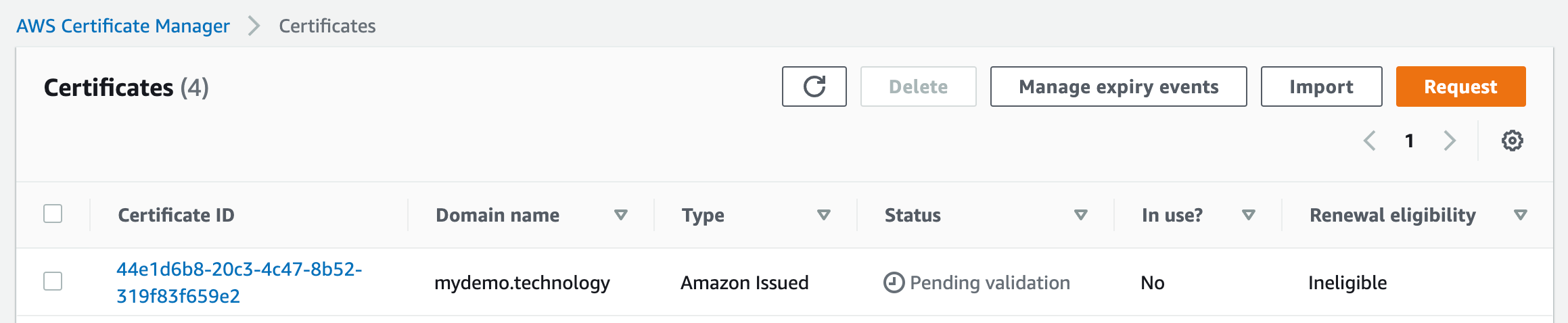

And, in my case, the certificate is pending:

So, I’m going to click it:

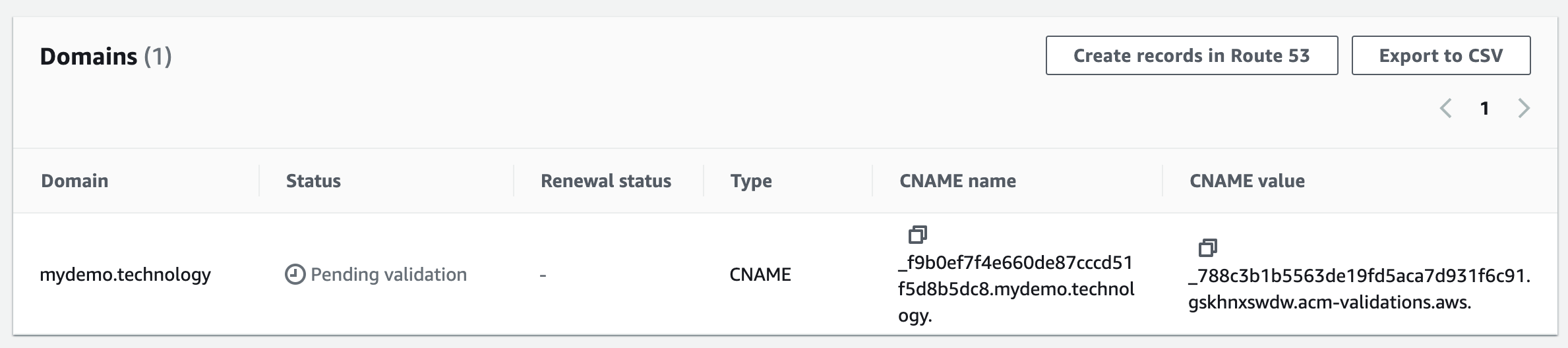

This proves that we own and control this domain. In a separate tab, go back to Route 53, and open our hosted zone:

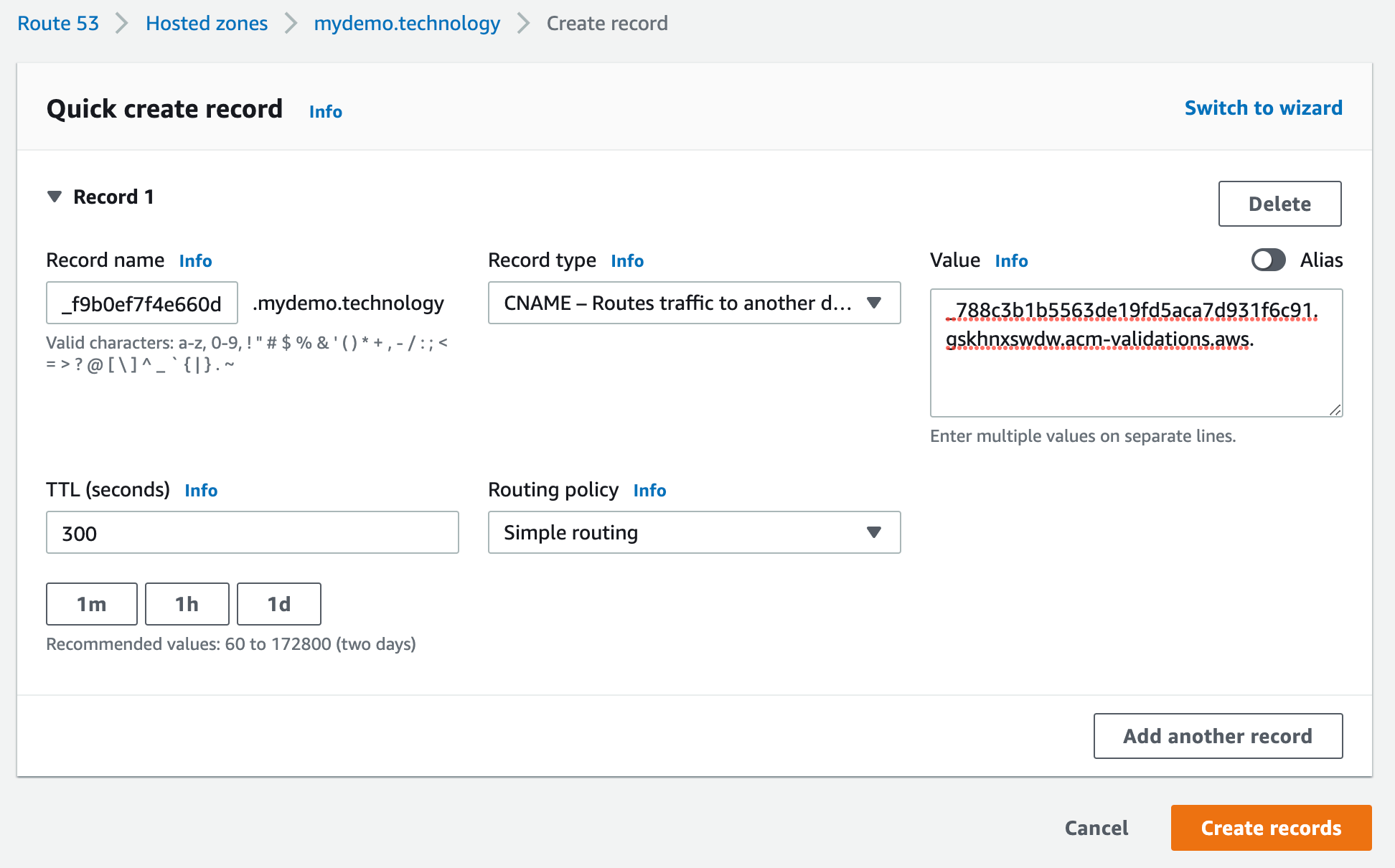

Now we need to create the CNAME record. Copy the first part for the Record name. For example, if the CNAME is _xhyqtrajdkrr.mydemo.technology, then put the _xhyqtrajdkrr part. For the Record value, copy the entire value.

Assuming you registered the AWS name servers with your domain host, GoDaddy or whomever, AWS will soon be able to ping the DNS entry it just asked you to create, see the response it expects, and validate your certificate.

It can take time for the name servers you set at the beginning to propagate. In theory, it can take up to 72 hours, but it usually updates within an hour for me.

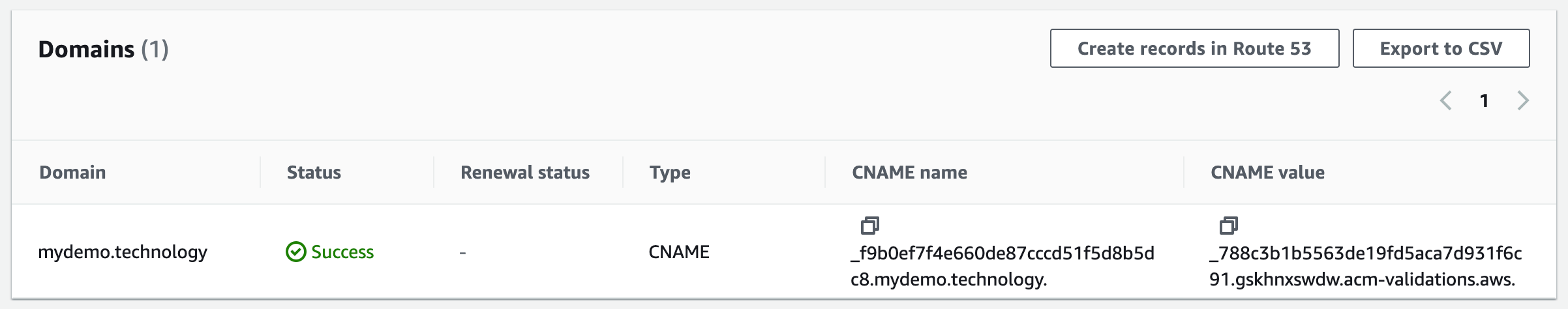

You’ll see success on the domain:

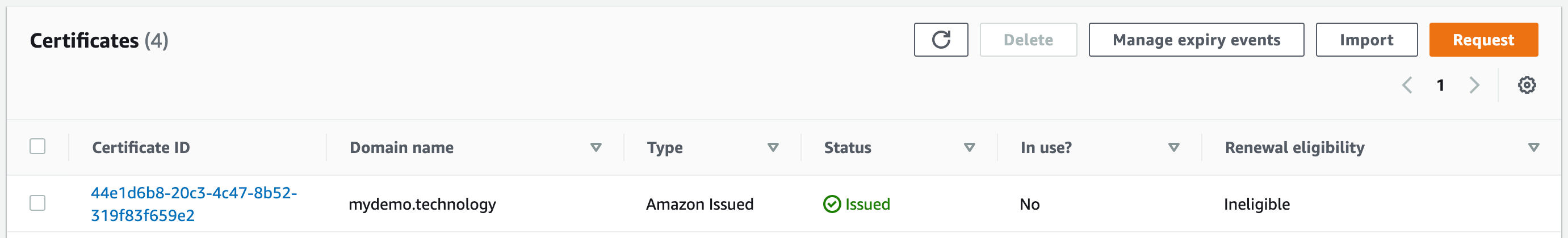

…as well as the certificate:

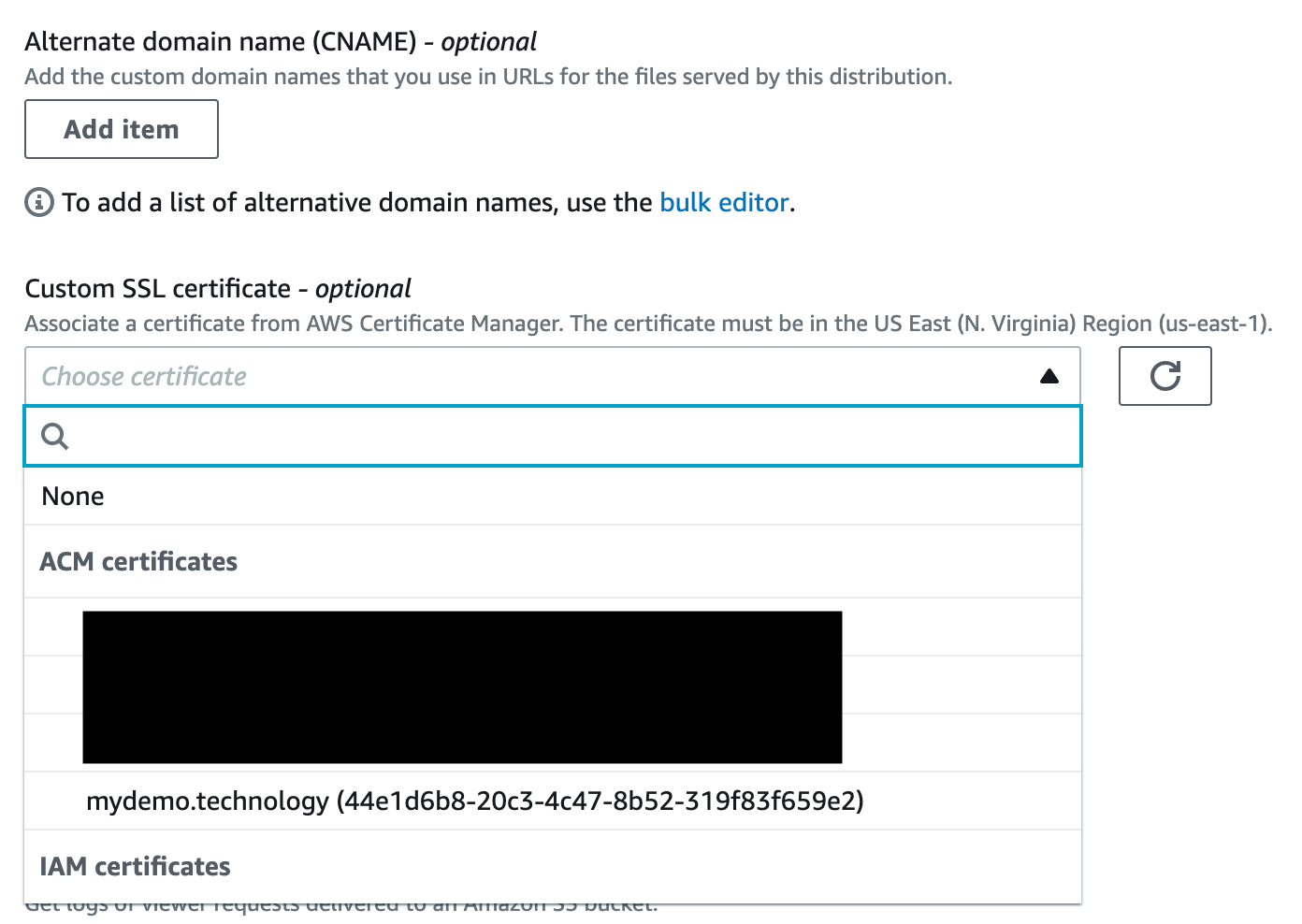

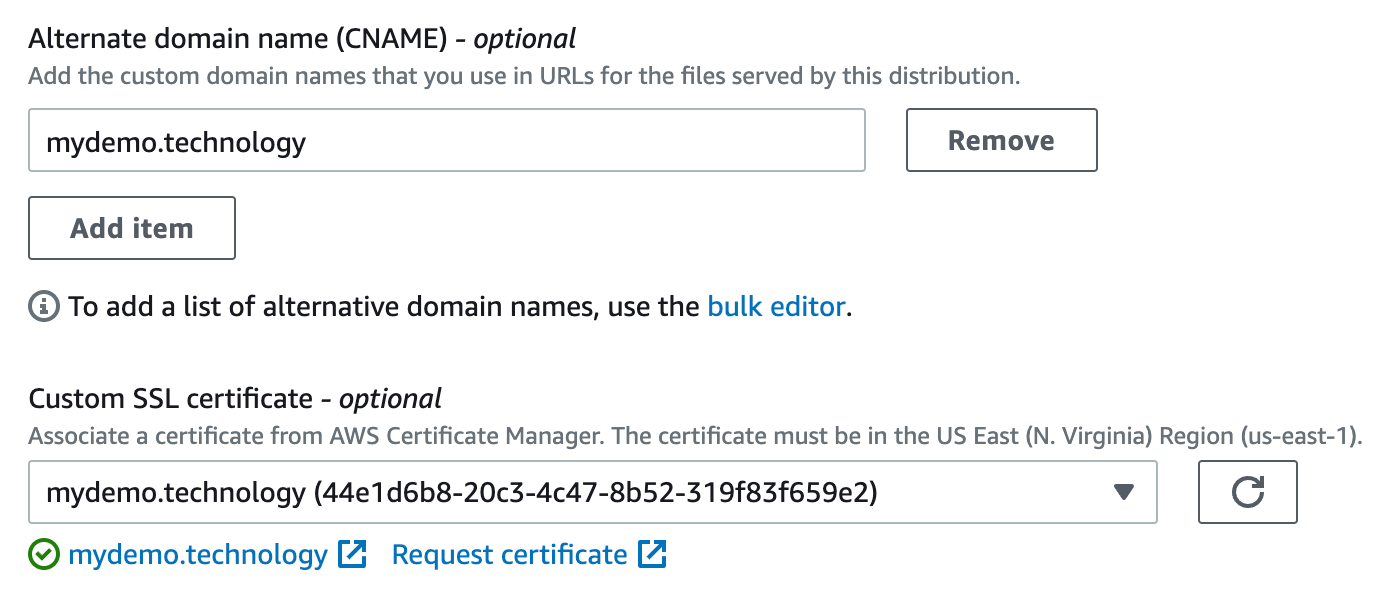

Whew! Almost done. Now let’s connect all of this to our CloudFront distribution. We can head back to the CloudFront settings screen. Now, under custom SSL certificate, we should see what we created (and any others you’ve created in the past):

Then, let’s add the app’s top-level domain:

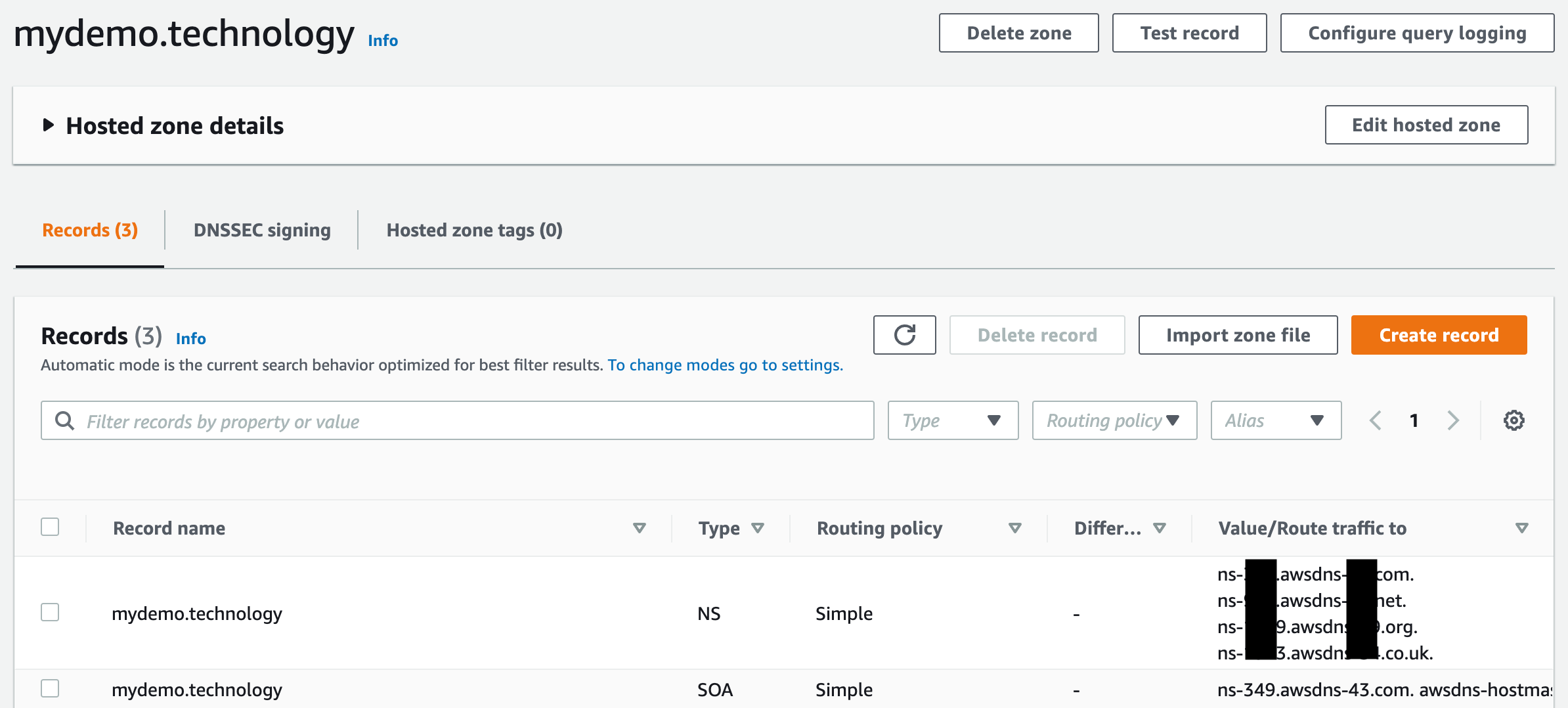

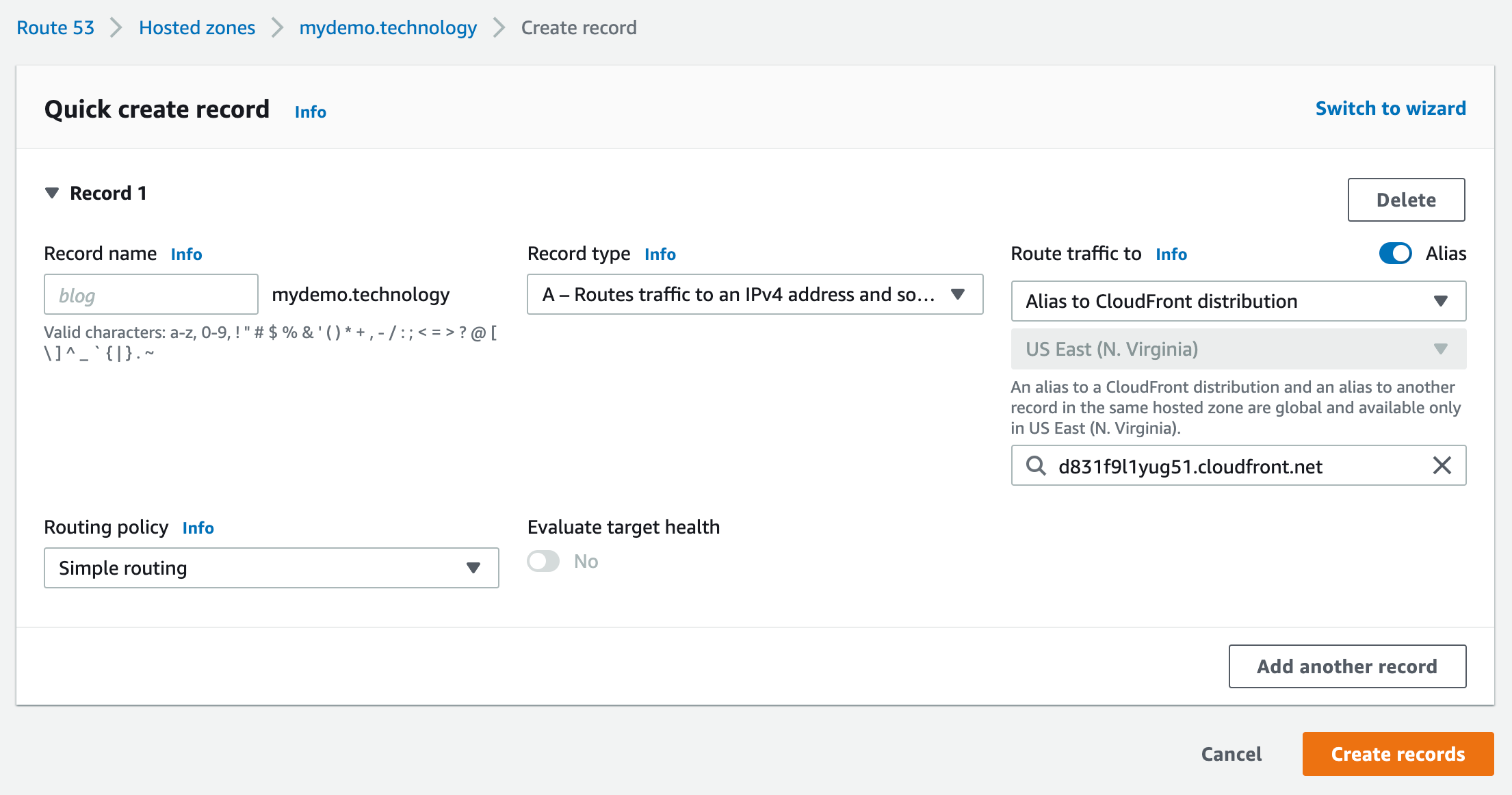

All that’s left is to tell Route 53 to route our domain to this CloudFront distribution. So, let’s go back to Route 53 and create another DNS record.

We need to enter an A record for IPv4, and an AAAA record for IPv6. For both, leave the record name empty since we’re only registering our top-level domain and nothing else.

Select the A record type. Next, specify the record as an alias, then map the alias to the CloudFront distribution. That should open up an option to choose your CloudFront distribution, and since we previously registered the domain with CloudFront, you should see that distribution, and only that distribution when making a selection.

We repeat the exact same steps for the AAAA record type we need for IPv6 support.

Run your web app, and make sure it actually, you know, works. It should!

Things to test and verify

OK, while we’re technically done here, chances are there are still a few things left to do to meet the exact needs of your web app. Different apps have different needs and what I’ve demonstrated so far has walked us through the common steps to route things through CloudFront for better performance. Chances are there are things unique to your app that require more love. So, for that, let me cover a few possible additional items you might encounter during setup.

First off, make sure any POSTs you have are correctly sent to your origin. Assuming CloudFront is correctly configured to forward cookies to your origin, this should already work but there’s no harm in checking.

The bigger concern are all other GET requests that are sent to your web app. By default, any GET requests CloudFront receives, if cached, are served to your web app with the cached response. This can be disastrous. Any data requests to any REST or GraphQL endpoints sent with GET are cached by the CDN. And if you’re shipping a service worker, that will be cached too, instead of the normal behavior, where the current version is sent down in the background and updated if there are changes.

In order to tell CloudFront not to cache certain things, be sure to set the "Cache-Control" header to "no-cache" . If you’re using a framework, like Express, you can set middleware for your data access with something like this:

app.use("/graphql", (req, res, next) => { res.set("Cache-Control", "no-cache"); next();

});

app.use( "/graphql", expressGraphql({ schema: executableSchema, graphiql: true, rootValue: root })

); For things like service workers, you can put specific rules for those files before your static middleware:

app.get("/service-worker.js", express.static(__dirname + "/react/dist", { setHeaders: resp => resp.set("Cache-Control", "no-cache") }));

app.get("/sw-index-bundle.js", express.static(__dirname + "/react/dist", { setHeaders: resp => resp.set("Cache-Control", "no-cache") }));

app.use(express.static(__dirname + "/react/dist", { maxAge: 432000 * 1000 * 10 }));And so on. Test everything thoroughly because there’s so much that can go wrong. And after each change you make, be sure to run a full invalidation in CloudFront and clear the cache before re-running your web app to test that things are correctly excluded from cache. You can do this from the Invalidations tab in CloudFront. Open that up and put /* in for the value, to clear everything.

A working CloudFront implementation

Now that we have everything running, let’s re-run our trace in WebPageTest:

And just like that, we no longer have setup connections like we saw before for our assets. For my own web app, I was seeing a substantial improvement of 500ms in LCP. That’s a solid win!

Hosting an entire web app on a CDN can offer the best of all worlds. We get edge caching for static resources, but without the connection costs. Unfortunately, this improvement doesn’t come for free. Getting all of the necessary proxying correctly set up isn’t entirely intuitive, and then there’s still the need to set up cache headers in order to avoid non-cacheable requests from winding up in the CDN’s cache.