It’s no secret that Instagram has major problems with harassment and bullying on its platform. One recent example: a report that Instagram failed to act on 90 percent of over 8,700 abusive messages received by several high-profile women, including actress Amber Heard.

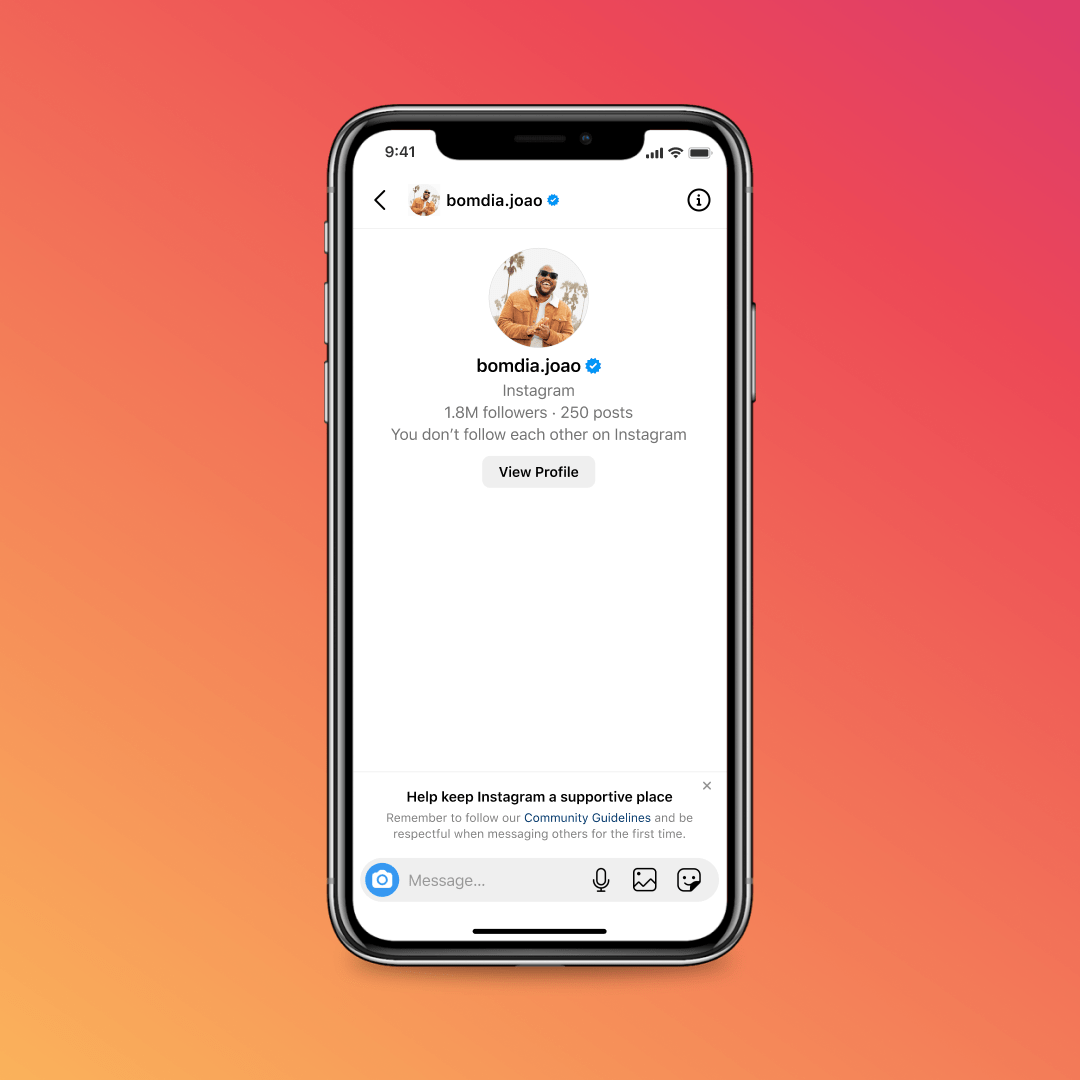

To try to make its app a more hospitable place, Instagram is rolling out features that will start reminding people to be respectful in two different scenarios: Now, anytime you send a message to a creator for the first time (Instagram defines a creator as someone with more than 10,000 followers or users who set up “creator” accounts) or when you reply to an offensive comment thread, Instagram will show a message on the bottom of your screen asking you to be respectful.

These gentle reminders are part of a broader strategy called “nudging,” which aims to positively impact people’s online behavior by encouraging — rather than forcing — them to change their actions. It’s an idea rooted in behavioral science theory, and one that Instagram and other social media companies have been adopting in recent years.

While nudging alone won’t solve Instagram’s issues with harassment and bullying, Instagram’s research has shown that this kind of subtle intervention can curb some users’ cruelest instincts on social media. Last year, Instagram’s parent company, Meta, said that after it started warning users before they posted a potentially offensive comment, about 50 percent of people edited or deleted their offensive comment. Instagram told Recode that similar warnings have proven effective in private messaging, too. For example, in an internal study of 70,000 users whose results were shared for the first time with Recode, 30 percent of users sent fewer messages to creators with large followings after seeing the kindness reminder.

Nudging has shown enough promise that other social media apps with their own bullying and harassment issues — like Twitter, YouTube, and TikTok — have also been using the tactic to encourage more positive social interactions.

“The reason why we are so dedicated about this investment is because we see through data and we see through user feedback that those interventions actually work,” said Francesco Fogu, a product designer on Instagram’s well-being team, which is focused on ensuring that people’s time spent on the app is supportive and meaningful.

Instagram first rolled out nudges attempting to influence people’s commenting behavior in 2019. The reminders asked users for the first time to reconsider posting comments that fall into a gray area — ones that don’t quite violate Instagram’s policies around harmful speech overtly enough to be automatically removed, but that still come close to that line. (Instagram uses machine learning models to flag potentially offensive content.)

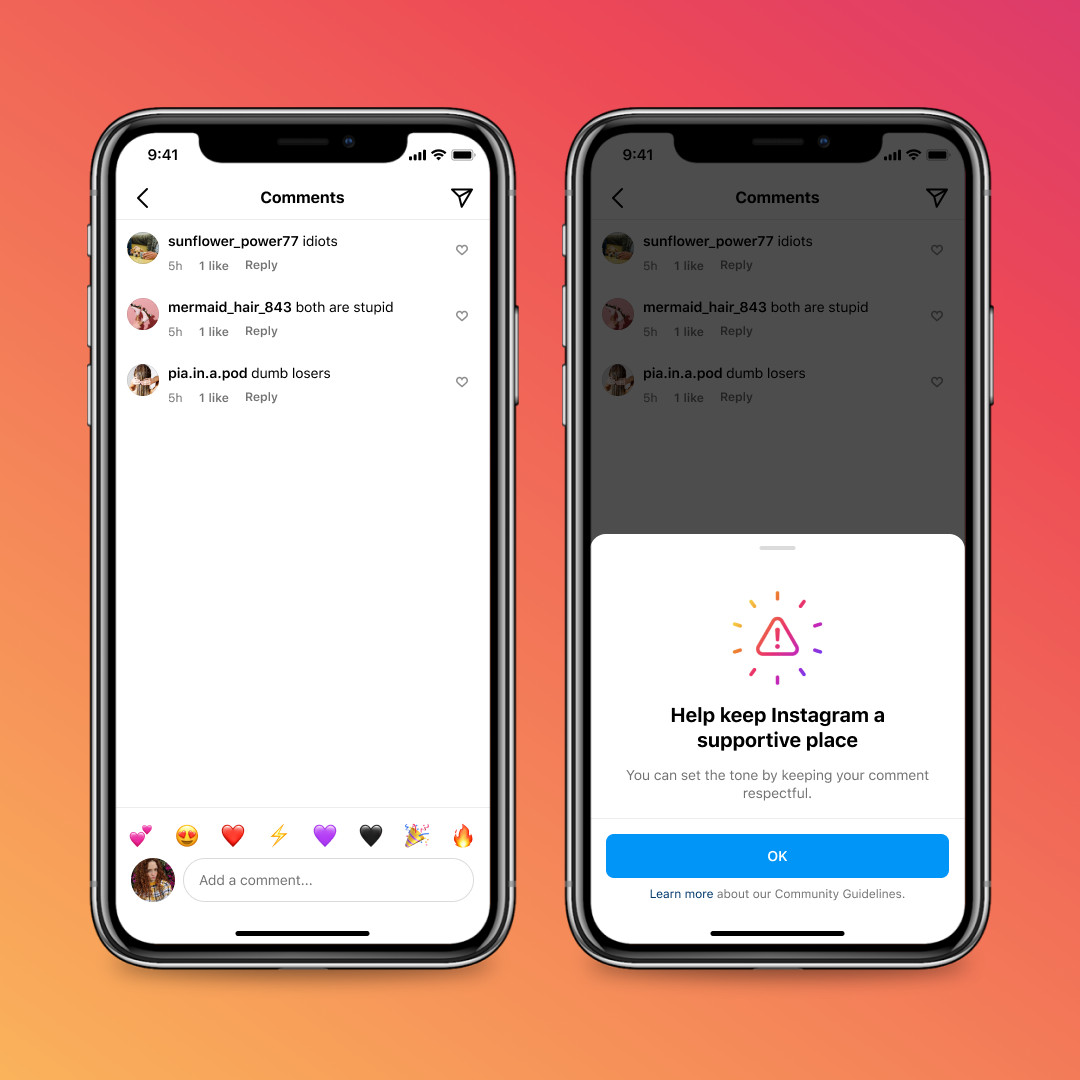

The initial offensive comment warnings were subtle in wording and design, asking users, “Are you sure you want to post this?” Over time, Fogu said, Instagram made the nudges more overt, requiring people to click a button to override the warning and proceed with their potentially offensive comments, and warning more clearly when comments could violate Instagram’s community guidelines. Once the warning became more direct, Instagram said it resulted in 50 percent of people editing or deleting their comments.

The effects of nudging can be long-lasting too, Instagram says. The company told Recode it conducted research on what it calls “repeat hurtful commenters” — people who leave multiple offensive comments within a window of time — and found that nudging had a positive long-term effect in reducing the number and proportion of hurtful comments to regular comments that these people made over time.

Starting Thursday, Instagram’s new nudging feature will apply this warning not just to people who post an offensive comment, but also to users who are thinking of replying to one. The idea is to make people reconsider if they want to “pile onto a thread that’s spinning out of control,” said Instagram’s global head of product policy, Liz Arcamona. This applies even if their individual reply doesn’t contain problematic language — which makes sense, considering that a lot of pile-on replies to mean-spirited comment threads are simple thumbs-up or tears-of-joy emojis, or “haha.” For now, the feature will roll out over the next few weeks to Instagram users whose language preferences are set to English, Portuguese, Spanish, French, Chinese, or Arabic.

One of the overarching theories behind Instagram’s nudging features is the idea of an “online disinhibition effect,” which argues that people have less social restraint interacting with people on the internet than they do in real life — and that can make it easier for people to express unfiltered negative feelings.

The goal of many of Instagram’s nudging features is to contain that online disinhibition, and remind people, in non-judgmental language, that their words have a real impact on others.

“When you’re in an offline interaction, you see people’s responses, you kind of read the room. You feel their emotions. I think you lose a lot of that oftentimes in an online context,” said Instagram’s Arcamona. “And so we’re trying to bring that offline experience into the online experience so that people take a beat and say, ‘wait a minute, there is a human on the other side of this interaction and I should think about that.’”

That’s another reason why Instagram is updating its nudges to focus on creators: People can forget there are real human emotions at stake when messaging someone they don’t personally know.

Some 95 percent of social media creators surveyed in a recent study by the Association for Computing Machinery received hate or harassment during their careers. The problem can be particularly acute for creators who are women or people of color. Public figures on social media, from Bachelorette stars and contestants to international soccer players, have made headlines for being targeted by racist and sexist comments on Instagram, in many cases in the form of unwanted comments and DMs. Instagram said it’s limiting its kindness reminders toward people messaging creator accounts for now, but could expand those kindness reminders to more users in the future as well.

Aside from creators, another group of people that are particularly vulnerable to negative interactions on social media is, of course, teens. Facebook whistleblower Frances Haugen revealed internal documents in October 2021 showing how Instagram’s own research indicated a significant percentage of teenagers felt worse about their body image and mental health after using the app. The company then faced intense scrutiny over whether it was doing enough to protect younger users from seeing unhealthy content. A few months after Haugen’s leaks in December 2021, Instagram announced it would start nudging teens away from content they were continuously scrolling through for too long, such as body-image-related posts. It rolled that feature out this June. Instagram said that, in a one-week internal study, it found that one in five teens switched topics after seeing the nudge.

While nudging seems to encourage healthier behavior for a good chunk of social media users, not everyone wants Instagram reminding them to be nice or to quit scrolling. Many users feel censored by major social media platforms, which might make some resistant to these features. And some studies have shown that too much nudging to quit staring at your screen can turn users off an app or cause them to disregard the message altogether.

But Instagram said that users can still post something if they disagree with a nudge.

“What I consider offensive, you might be considering a joke. So it’s really important for us to not make a call for you,” said Fogu. “At the end of the day, you’re in the driver’s seat.”

Several outside social media experts Recode spoke with saw Instagram’s new features as a step in the right direction, although they pointed out some areas for further improvement.

“This kind of thinking gets me really excited,” said Evelyn Douek, a Stanford law professor who researches social media content moderation. For too long, the only way social media apps dealt with offensive content was to take it down after it had already been posted, in a whack-a-mole approach that didn’t leave room for nuance. But over the past few years, Douek said “platforms are starting to get way more creative about the ways to create a healthier speech environment.”

In order for the public to truly assess how well nudging is working, Douek said social media apps like Instagram should publish more research, or even better, allow independent researchers to verify its effectiveness. It would also help for Instagram to share instances of interventions that Instagram experimented with but weren’t as effective, “so it’s not always positive or glowing reviews of their own work,” said Douek.

Another data point that could help put these new features in perspective: how many people are experiencing unwanted social interactions to begin with. Instagram declined to tell Recode what percentage of creators, for example, receive unwanted DMs overall. So while we may know how much nudging can reduce unwanted DMs to creators, we don’t have a full picture of the scale of the underlying problem.

Given the sheer enormity of Instagram’s estimated over 1.4 billion user base, it’s inevitable that nudges, no matter how effective, will not come close to stopping people from experiencing harassment or bullying on the app. There’s a debate about to what degree social media’s underlying design, when maximized for engagement, is negatively incentivizing people to participate in inflammatory conversations in the first place. For now, subtle reminders may be some of the most useful tools to fix the seemingly intractable problem of how to stop people from behaving badly online.

“I don’t think there’s a single solution, but I think nudging looks really promising,” said Arcamona. “We’re optimistic that it can be a really important piece of the puzzle.”