Docker is the silver bullet that solved the problem with software containers and virtualization once and for all. Yes, that’s a strong claim! Other products had attempted to deal with these problems, but Docker’s fresh approach and ecosystem has wiped the competition off the map. This guide will help you understand the basic concepts of Docker, so that you can get started using it for your own applications and adopt it into your workflow.

Contents:

- The History of Docker

- How Does Docker Work?

- Docker Components and Tools

- Understanding Docker Containers

- How to Run a Container?

- Why Would You Use a Container?

- Useful Tips

- Final Thoughts

The History of Docker

Table of Contents

Docker was created in 2013 by Solomon Hykes while working for dotCloud, a cloud hosting company. It was originally built as an internal tool to make it easier to develop and deploy applications.

Docker containers are based on Linux containers, which have been around since the early 2000s, but they weren’t widely used until Docker created a simple and easy-to-use platform for running containers that quickly caught on with developers and system administrators alike.

In March of 2014, Docker open-sourced its technology and became one of the most popular projects on GitHub, raising millions from investors soon after.

In an incredibly short amount of time, Docker has become one of the most popular tools for developing and deploying software, and it has been adopted by pretty much everyone in the DevOps community!

How Does Docker Work?

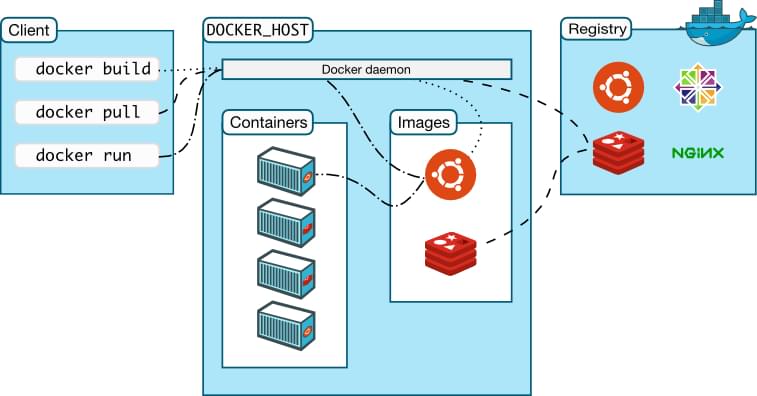

Docker architecture, by nhumrich

Docker is a technology that allows you to build, run, test, and deploy distributed applications. It uses operating-system-level virtualization to deliver software in packages called containers.

The way Docker does this is by packaging an application and its dependencies in a virtual container that can run on any computer. This containerization allows for much better portability and efficiency when compared to virtual machines.

These containers are isolated from each other and bundle their own tools, libraries, and configuration files. They can communicate with each other through well-defined channels. All containers are run by a single operating system kernel, and therefore use few resources.

As mentioned, OS virtualization has been around for a while in the form of Linux Containers (LXC), Solaris Zones, and FreeBSD jail. However, Docker took this concept further by providing an easy-to-use platform that automated the deployment of applications in containers.

Here are some of the benefits of Docker containers over traditional virtual machines:

- They’re portable and can run on any computer that has a Docker runtime environment.

- They’re isolated from each other and can run different versions of the same software without affecting each other.

- They’re extremely lightweight, so they can start up faster and use fewer resources.

Let’s now take a look at some of the components and tools that make it all possible.

Docker consists of three major components:

- the Docker Engine, a runtime environment for containers

- the Docker command line client, used to interact with the Docker Engine

- the Docker Hub, a cloud service that provides registry and repository services for Docker images

In addition to these core components, there’s also a number of other tools that work with Docker, including:

- Swarm, a clustering and scheduling tool for dockerized applications

- Docker Desktop, successor of Docker Machine, and the fastest way to containerize applications

- Docker Compose, a tool for defining and running multi-container Docker applications

- Docker Registry, an on-premises registry service for storing and managing Docker images

- Kubernetes, a container orchestration tool that can be used with Docker

- Rancher, a container management platform for delivering Kubernetes-as-a-Service

There’s even a number of services supporting the Docker ecosystem:

- Amazon Elastic Container Service (Amazon ECS), a managed container orchestration service from Amazon Web Services

- Azure Kubernetes Service (AKS), a managed container orchestration service from Microsoft Azure

- Google Kubernetes Engine (GKE), a fully managed Kubernetes engine that runs in Google Cloud Platform

- Portainer, for deploying, configuring, troubleshooting and securing containers in minutes on Kubernetes, Docker, Swarm and Nomad in any cloud, data center or device

Understanding Docker Containers

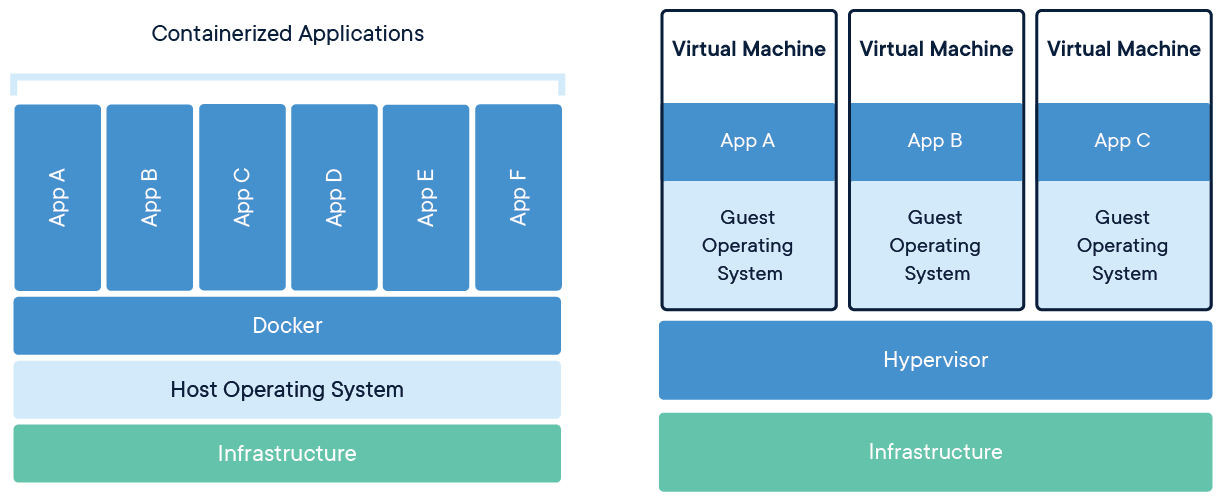

Docker containers vs virtual machines. Source: Wikipedia

Containers are often compared to virtual machines, but there are some important differences between the two. Virtual machines run a full copy of an operating system, whereas containers share the host kernel with other containers. This makes containers much more lightweight and efficient than virtual machines.

For starters, a container is a self-contained unit of software that includes all the dependencies required to run an application. This makes it easy to package and ship applications without having to worry about compatibility issues. Docker containers can be run on any machine that has a Docker engine installed.

These containers are isolated from one another and bundle their own tools, libraries, and configuration files, and they can communicate with each other through well-defined channels.

Building a Docker Container with Docker Images

Docker containers are built from images, which are read-only template with all the dependencies and configurations required to run an application.

A container, in fact, is a runtime instance of an image — what the image becomes in memory when actually executed. It runs completely isolated from the host environment by default, only accessing host files and ports if configured to do so. As such, containers have their own networking, storage, and process space; and this isolation makes it easy to move containers between hosts without having to worry about compatibility issues.

Images can be created by either using a Dockerfile (which contains all the necessary instructions for creating an image) or by using Docker commit, which takes an existing container and creates an image from it.

What’s in a Docker Container?

Docker containers include everything an application needs to run, including:

- the code

- a runtime

- libraries

- environment variables

- configuration files

A Docker container consists of three main parts:

- the Dockerfile, used to build the image.

- the image itself, a read-only template with instructions for creating a Docker container

- the container, a runnable instance created from an image (you can create, start, stop, move or delete a container using the Docker API or CLI)

A container shares the kernel with other containers and its host machine. This makes it much more lightweight than a virtual machine.

For a more in-depth guide, see Understanding Docker, Containers and Safer Software Delivery.

How to Run a Container?

Docker containers are portable and can be run on any host with a Docker engine installed (see How to Install Docker on Windows 10 Home.

To run a container, you need to first pull the image from a registry. Then, you can create and start the container using this image.

For example, let’s say we want to launch an Alpine Linux container. We would first pull the Alpine Docker image from Docker Hub. To do that, we use the docker pull command, followed by the name of the repository and tag (version) that we want to download:

docker pull alpine:latest

This particular image is very small — only 5MB in size! After pulling it down to our system using docker pull, we can verify that it exists locally by running docker images. This should give us output similar to what’s shown below:

REPOSITORY TAG IMAGE ID CREATED SIZE

alpine latest f70734b6b2f0 3 weeks ago 5MB

Now that we have the image locally, we can launch a container using it. To do so, we use the docker run command, followed by the name of the image:

docker run alpine

This will give us an error message telling us that we need to specify a command to be executed inside our container. By default, Docker containers won’t launch any processes or commands when they’re created.

We can provide this command as an argument to docker run, like so:

docker run alpine echo "Hello, World!"

Here, all we’re doing is running the echo program and passing in “Hello, World!” as input. When you execute this line, you should see output similar to what’s shown below:

Hello, World!

Great! We’ve successfully launched our first Docker container. But what if we want to launch a shell inside an Alpine container? To do so, we can pass in the sh command as input to docker run:

docker run -it alpine sh

The -i flag stands for “interactive” and is used to keep stdin open even if not attached. The -t flag allocates a pseudo TTY device. Together, these two flags allow us to attach directly to our running container and give us an interactive shell session:

/ From here, we can execute any commands that are available to us in the Alpine Linux distribution. For example, let’s try running ls:

/ bin dev etc home lib media mnt proc root run sys tmp var

boot home lib64 media mnt opt rootfs sbin usr

If we want to exit this shell, we can simply type exit:

/ And that’s it! We’ve now successfully launched and exited our first Docker container.

For another practical case, check out Setting Up a Modern PHP Development Environment with Docker.

Why Would You Use a Container?

There are many good reasons for using containers:

-

Flexibility. Containers can be run on any platform that supports Docker, whether it’s a laptop, server, virtual machine, or cloud instance. This makes it easy to move applications around and helps DevOps teams achieve consistent environments across development, testing, and production.

-

Isolation. Each container runs in its own isolated environment and has its own set of processes, file systems, and network interfaces. This ensures that one container can’t interfere with or access the resources of another container.

-

Density and Efficiency. Multiple containers can be run on the same host system without requiring multiple copies of the operating system or extra hardware resources, and containers are lightweight and require fewer resources than virtual machines, making them more efficient to run. All this saves precious time and money when deploying applications at scale.

-

Scalability. Containers can be easily scaled up or down to meet changing demands. This makes it possible to efficiently utilize resources when demand is high and quickly release them when demand decreases.

-

Security. The isolation capabilities of containers help to secure applications from malicious attacks and accidental leaks. By running each container in its own isolated environment, you can further minimize the risk of compromise.

-

Portability. Containers can be easily moved between different hosts, making it easy to distribute applications across a fleet of servers. This makes it possible to utilize resources efficiently and helps ensure that applications are always available when needed.

-

Reproducibility. Containers can be easily replicated to create identical copies of an environment. This is useful for creating testing and staging environments that match production, or for distributing applications across a fleet of servers.

-

Speed. Containers can be started and stopped quickly, making them ideal for applications that need to be up and running at a moment’s notice.

-

Simplicity. The container paradigm is simple and easy to understand, making it easy to get started with containers.

-

Ecosystem. The Docker ecosystem includes a wide variety of tools and services that make it easy to build, ship, and run containers.

Useful Tips

We’ll end this guide with some tips on best practices and handy commands for making the best use of Docker.

Docker Best Practices

There are a few best practices that you should follow when working with Docker:

- Use a

.dockerignorefile to exclude files and directories from your build context. - Keep your

Dockerfilesimple and easy to read. - Avoid using

sudowhen working with Docker. - Create a user-defined network for your application using the

docker networkcreate command. - Use Docker secrets to manage sensitive data used by your containers.

Docker Commands

If you want to learn more about Docker, here you have a commands list to get you started:

docker, for managing containers on your systemdocker build, for creating a new image from aDockerfiledocker images, for listing all available images on your systemdocker run, for launching a new container from an imagedocker ps, for listing all running containers on your systemdocker stop, for gracefully stopping a running containerdocker rm, for removing a stopped container from your systemdocker rmi, for removing an image from your systemdocker login, for logging in to a Docker registrydocker push, for pushing an image to a Docker registrydocker pull, for pulling an image from a Docker registrydocker exec, for executing a command in a running containerdocker export, for exporting a container as a tar archivedocker import, for importing a tar archive as an image

Finally, use a text editor like Vim or Emacs to edit your Dockerfile.

For a full deep dive into docker, check out the book Docker for Web Developers, by Craig Buckler.

Final Thoughts

Docker is a powerful tool that can help you automate the deployment of your applications. It’s simple to use and doesn’t require you to install any dependencies on your host machine.

Over the past few years, Docker has become one of the most popular tools for developing and deploying software. It’s used by developers to pack their code and dependencies into a standardized unit, which can then be deployed on any server. Additionally, Docker also enables developers to run multiple isolated applications on a single host, making it an ideal tool for microservices.

If you’re looking for a tool that can help you streamline your workflow and make your life as a developer and sysadmin easier, then Docker is a mandatory tool to add to your box.