Sen. Amy Klobuchar (D-MN) introduced new legislation today that aims to finally hold tech companies responsible for allowing misinformation about vaccines and other health issues to spread online.

The bill, called the Health Misinformation Act and co-sponsored by Sen. Ray Luján (D-NM), would create an exception to the landmark internet law Section 230, which has always shielded tech companies like Facebook, Google, and Twitter from being sued over almost any of the content people post on their platforms.

Klobuchar’s bill would change that — but only when a social media platform’s algorithm promotes health misinformation related to an “existing public health emergency.” The legislation tasks the Secretary of Health and Human Services (HHS) to define health misinformation in these scenarios.

“Features that are built into technology platforms have contributed to the spread of misinformation and disinformation,” reads a draft of the law seen by Recode, “with social media platforms incentivizing individuals to share content to get likes, comments, and other positive signals of engagement, which rewards engagement rather than accuracy.”

The law wouldn’t apply in cases where a platform shows people posts using a “neutral mechanism,” like a social media feed that ranks posts chronologically, rather than algorithmically. This would be a big change for the major internet platforms. Right now, almost all of the major social media platforms rely on algorithms to determine what content they show users in their feeds. And these ranking algorithms are generally designed to show users the content that they engage with the most — posts that produce an emotional response — which can prioritize inaccurate information.

The new bill comes at a time when social media companies are under fire for the Covid-19 misinformation spreading on their platforms despite their efforts to fact-check or take down some of the most egregiously harmful health information. Last week, as Covid-19 cases began surging among unvaccinated Americans, President Biden accused Facebook of “killing people” with vaccine misinformation (a statement he later partially walked back).

At the same time, major social media companies continue to face criticism from some Republicans, who have opposed the Surgeon General’s recent health advisory focused on combating the threat of health misinformation. Conservatives, and especially Sen. Josh Hawley (R-MO), have also pushed back against the White House’s work flagging problematic health misinformation to social media platforms, calling the collaboration “scary stuff” and “censorship.”

Even though tech giants are facing bipartisan criticism, Klobuchar’s plan to repeal Section 230 — even partially — will likely be challenging. Defining and identifying public health misinformation is often complicated, and having a government agency decide where to draw that boundary could run into challenges. At the same time, a court would also have to determine whether a platform’s algorithms were “neutral” and whether health misinformation was promoted — a question that doesn’t have a simple answer.

Also, it may prove difficult for individual users to successfully sue Facebook, even if Section 230 is partially repealed, because it’s not illegal to post health misinformation (unlike, say, posting child pornography or defamatory statements).

And free speech advocates have warned that repealing Section 230 — even in part — could limit free speech on the internet as we know it because it would pressure tech companies to more tightly control what users are allowed to post online.

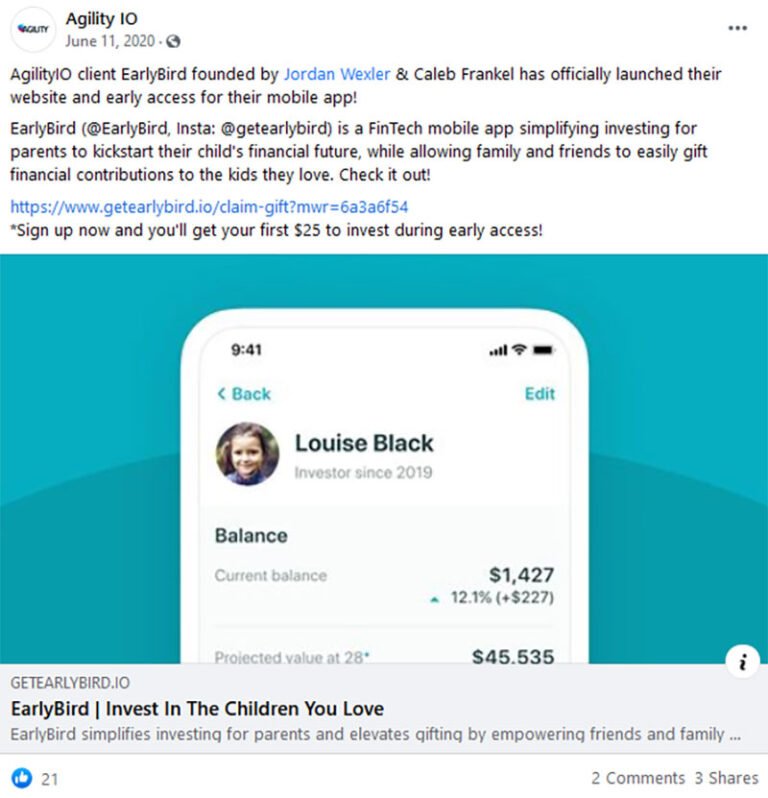

as Recode first reported. The letter cited research by a nonprofit, the Center for Countering Digital Hate, which found that 12 anti-vaccine influencers — a “Disinformation Dozen” — were responsible for 65 percent of anti-vaccine content on Facebook and Twitter.

In responses to those letters, which were seen by Recode, both platforms largely defended their approach to these influencers, noting that they’d taken some actions on their accounts. Across both platforms, many of the accounts are still up. While data revealing the extent to which misinformation on Facebook has exacerbated vaccine hesitancy is limited, longtime online advocates for vaccines told Recode earlier this year that Facebook’s approach to vaccine content has made their job harder, and that content in Facebook groups, in particular, has made some people more opposed to vaccines.

It’s also not the first time that Congress has tried to repeal parts of Section 230. Most recently, Congress introduced the EARN IT Act, which would take away Section 230 immunity from tech companies if they don’t adequately address child pornography on their platforms. That bill, which had bipartisan support when introduced, is still in Congress. Earlier this year, Reps. Tom Malinowski (D-NJ) and Anna Eshoo (D-CA) also reintroduced their proposal, the Protecting Americans from Dangerous Algorithms Act, which would remove platforms’ Section 230 protections in cases where their algorithms amplified posts that involved international terrorism or interfered with civil rights.

President Trump also attempted to repeal Section 230 through a legally unenforceable executive order, a few days after Twitter started fact-checking his misleading posts about voting by mail in the 2020 elections.

Despite potential hurdles to their proposal, Sens. Klobuchar and Luján’s bill is a reminder that lawmakers concerned about misinformation are thinking more and more about the algorithms and ranking systems that drive engagement on this kind of content.

“The social media giants know this: The algorithms encourage people to consume more and more misinformation,” Imran Ahmed, the CEO for the Center for Countering Digital Hate, told Recode in February. “Social media companies have not just encouraged growth of this market and tolerated it and nurtured it, they also have become the primary locus of misinformation.”