Preparing for the next generation of data scientists and ML engineers

Machine learning has only realized a small fraction of its potential—and this field is poised for exponential growth in the years to come.

getty

Francois Chollet needs no introduction for most of the artificial intelligence (AI) and machine learning (ML) community.

Besides being the creator of the deep-learning library Keras and a contributor to the Tensorflow machine learning framework, Francois is also well known for his artificial intelligence research, which includes a popular benchmark for machine reasoning. Today Francois works to build the tools that help power the workflows of ML engineers both at Google and outside the organization for the open-source community at large.

With that in mind, Francois used his keynote address at Applied ML Summit to talk about where he thinks the field of AI and ML is headed, the role of deep learning in the future, and what we can do to prepare the next generation of data scientists and ML engineers.

What is the current state of machine learning today?

Table of Contents

Currently, we’re in a transition period where ML is slowly becoming a ubiquitous tool that’s part of every developer’s toolbox. We’re already applying it to an amazing range of important problems across domains, such as medical imaging, agriculture, autonomous driving, education, manufacturing, and many more.

Over time, ML will make its way into most developer workflows and become as commonplace as web development is today.

But so far, machine learning has only realized a small fraction of its potential. Reaching its full potential is going to be a multi-decade process. Over time, ML will make its way into most developer workflows and become as commonplace as web development is today.

Where’s deep learning headed, and what should we expect in 2025 and beyond?

Our job as makers of tools is to track trends and facilitate these trends. We should always be looking at the evolution of deep learning as a field. There are four trends that I see emerging in the world of ML:

1. Ecosystems of reusable parts

The reality is that most deep learning workflows are still very inefficient because they involve recreating the same kinds of models repeatedly and retraining them from scratch every time, instead of just importing what you need. This is a big contrast to the traditional software development world, where reusing packages is the norm and most engineering actually consists of assembling together existing functionality.

In the future, we’re going to see a larger ecosystem of reusable, pre-trained models for many different tasks. You shouldn’t have to build what you need from the ground up; you should be able to search for it and import it.

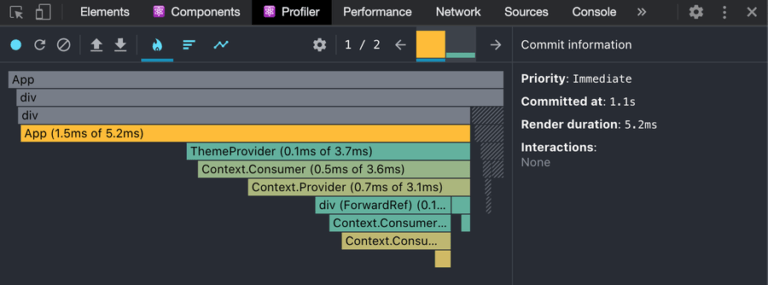

2. Increasing automation and higher-level workflows

Today, engineers and researchers are still tuning lots of things by hand through trial and error. But tuning things manually is really the job of an algorithm, not a human. So, over the next few years, we’re going to move beyond handcrafting architectures, manually tuning learning rates, and constantly fiddling with trivial configuration details.

In the next five to ten years, machine learning workflows will become increasingly higher level and automated. For example, you’ll be able to use an API to help you create your models.

As a data scientist, you’ll be able to focus on the problem specification and data curation instead of the trivial configuration details.

3. Larger-scale workflows in the cloud

This is more a universal trend in computer science. We’re starting to see faster, specialized chips (like TPU) and distributed computing at an increasingly large scale. Workflows are moving away from local hardware and into the cloud towards larger-scale distributed training and cloud-based workflows.

In the future, it will be as easy to start training a model on hundreds of GPUs as it is to train a model today on a collab notebook or your laptop. You’ll be able to access remote, large-scale distributed resources with no more friction than when you access your local GPU. That’s not exclusive to deep learning—this trend is present throughout the entire software industry.

4. Real-world deployment

Research labs are not where the cool stuff happens anymore. We are still using deep learning for only a tiny fraction of the problems it could solve, but deep learning is actually applicable to pretty much every single industry.

Over the next five to 10 years, I expect to see deep learning technology move out of the lab into the real world. Therefore, we need to make deep learning portable. You should be able to save a machine learning model and run it anywhere—on a mobile device, a web browser, or on an embedded device on a microcontroller.

Read this next: See how deep learning is being used to recover wildlife populations or work with governments on meeting their climate goals.

What will these trends look like in practice?

There are lots of synergies across these trends. The push towards automation is making machinery more accessible as well as making distributed cloud computing easy. This shift to the cloud is helping to make machine learning more accessible, so you don’t need to be an expert or have your own hardware to train—and when deep learning is more accessible, it’s easier to move it to real-world applications faster.

Of course, the systems I am outlining won’t be built in one day. It will happen layer by layer, with each new layer building on top of the foundations established by the software that existed before. So, pragmatically, we should not be working to build the exact future system we want. Instead, we should all be focusing on establishing solid foundations that will eventually enable the systems of tomorrow.

What can we do to get the most value for the industry and research community?

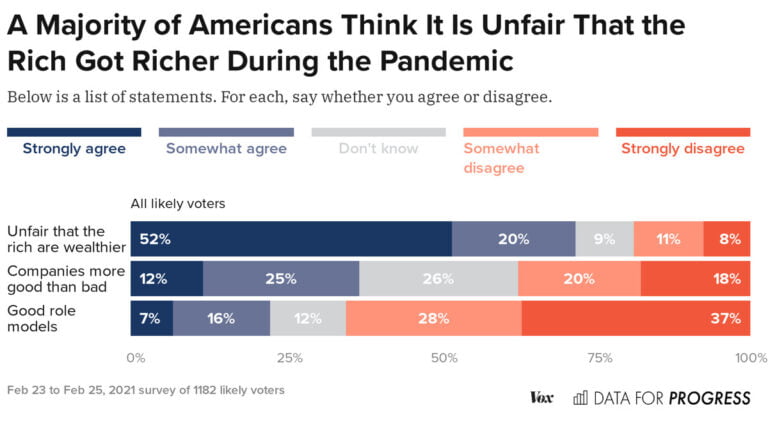

It’s critical to understand that we won’t realize the full potential of ML if we wait around for researchers and big tech companies to solve everything. Deep learning can be used to optimize fish farming in Norway or automate monitoring the Amazon rainforest for illegal logging, but the tech industry may lack the scope and proximity to understand these problems.

This means as a community, we need to make AI technologies radically accessible, with a keen eye towards responsibility. We need to put them into the hands of anyone who has an idea and some coding skills so that people who are familiar with these types of problems can start solving them on their own. In the same way anyone can now make a website without having to wait for someone in tech to make it for them, we are slowly building towards a future where AI can be put into the hands of everyone.

That’s how we will succeed at ensuring these technologies reach their maximum potential.

Watch the Applied ML Summit on-demand to learn how to apply groundbreaking machine learning technology in your projects, keep growing your skills at the pace of innovation, and boost your career.