Sorting algorithms are a fundamental part of computer science and have a variety of applications, ranging from sorting data in databases to organizing music playlists. But what exactly are sorting algorithms, and how do they work? We’ll answer that question in this article by providing a comprehensive look at the different types algorithms and their uses, including sample code.

We might get a bit technical here and there, like using big O notation to analyze time complexity and space complexity of different algorithms. But we’ll also provide high-level overviews that should easily be understood by most.

It’s a long read for sure, so let’s get to it!

Contents:

- What Is a Sorting Algorithm?

- What Are Sorting Algorithms Used For?

- Why Are Sorting Algorithms So Important?

- The Different Types of Sorting in Data Structures

- Top 10 Sorting Algorithms You Need to Know

- All Sorting Algorithms Compared

- What’s the Most Common Sorting Algorithm?

What Is a Sorting Algorithm?

Table of Contents

- 1 What Is a Sorting Algorithm?

- 2 What Are Sorting Algorithms Used For?

- 3 Why Are Sorting Algorithms So Important?

- 4 The Different Types of Sorting in Data Structures

- 5 Top 10 Sorting Algorithms You Need to Know

- 6 All Sorting Algorithms Compared

- 7 What’s the Most Common Sorting Algorithm?

- 8 Final Thoughts

Essentially, a sorting algorithm is a computer program that organizes data into a specific order, such as alphabetical order or numerical order, usually either ascending or descending.

What Are Sorting Algorithms Used For?

Sorting algorithms are mainly used to rearrange large amounts of data in an efficient manner so that it can be searched and manipulated more easily. They are also used to improve the efficiency of other algorithms such as searching and merging, which rely on sorted data for their operations.

Why Are Sorting Algorithms So Important?

Sorting algorithms are used to organize data in a particular order, which makes it easier to search, access, and analyze. In many applications, sorting is a critical part of the data processing pipeline, and the efficiency of the sorting algorithm can have a significant impact on the overall performance of the system.

-

In databases. Sorting is used to retrieve records in a particular order, such as by date, alphabetical order, or numerical order. This allows users to quickly find the data they need, without having to manually search through large amounts of unsorted data.

-

In search engines. To rank search results in order of relevance. By sorting the results in this way, users can quickly find the information they’re looking for, without having to sift through irrelevant or unrelated results.

-

In many scientific and engineering applications. Researchers can run data analysis and simulations to gain insights into complex systems and make more accurate predictions about future behavior.

The Different Types of Sorting in Data Structures

There are various types of sorting available. The choice of sorting algorithm depends on various factors, such as the size of the data set, the type of data being sorted, and the desired time and space complexity.

Comparison-based sorting algorithms

These compare elements of the data set and determine their order based on the result of the comparison. Examples of comparison-based sorting algorithms include bubble sort, insertion sort, quicksort, merge sort, and heap sort.

Non-comparison-based sorting algorithms

These don’t compare elements directly, but rather use other properties of the data set to determine their order. Examples of non-comparison-based sorting algorithms include counting sort, radix sort, and bucket sort.

In-place sorting algorithms

These algorithms sort the data set in-place, meaning they don’t require additional memory to store intermediate results. Examples of in-place sorting algorithms include bubble sort, insertion sort, quicksort, and shell sort.

Stable sorting algorithms

These preserve the relative order of equal elements in the data set. Examples of stable sorting algorithms include insertion sort, merge sort, and Timsort.

Adaptive sorting algorithms

These take advantage of any existing order in the data set to improve their efficiency. Examples of adaptive sorting algorithms include insertion sort, bubble sort, and Timsort.

Top 10 Sorting Algorithms You Need to Know

Let’s now go through ten of the top sorting algorithms to be aware of when looking to choose one.

Bubble sort

Bubble sort is a simple sorting algorithm that repeatedly steps through a given list of items, comparing each pair of adjacent items and swapping them if they’re in the wrong order. The algorithm continues until it makes a pass through the entire list without swapping any items.

Bubble sort is also sometimes referred to as “sinking sort”.

The history of bubble sort

The origins of bubble sort trace back to the late 1950s, with Donald Knuth popularizing it in his classic 1968 book The Art of Computer Programming.

Since then, it’s been widely used in various applications, including sorting algorithms for compilers, sorting elements in databases, and even in the sorting of playing cards.

Advantages and drawbacks of bubble sort

Bubble sort is considered to be a relatively inefficient sorting algorithm, as its average and worst-case complexity are both $O(n^2)$. This makes it much less efficient than most other sorting algorithms, such as quicksort or mergesort.

Technical Note: $O(n^2)$ complexity means that the time it takes for an algorithm to finish is proportional to the square of the size of the input. This means that larger input sizes cause the algorithm to take significantly longer to complete.

For example, if you consider an algorithm that sorts an array of numbers, it may take one second to sort an array of ten numbers, but it could take four seconds to sort an array of 20 numbers. This is because the algorithm must compare each element in the array with every other element, so it must do 20 comparisons for the larger array, compared to just ten for the smaller array.

It is, however, very simple to understand and implement, and it’s often used as an introduction to sort and as a building block for more complex algorithms. But these days it’s rarely used in practice.

Use cases for bubble sort

Bubble sort is a simple algorithm that can be used for sorting small lists or arrays of elements. It’s easy to implement and understand, and therefore can be used in situations where simplicity and clarity are more important than performance.

-

Educational purposes. It’s often used in computer science courses as an example of a simple sorting algorithm. Students can learn about basic sorting techniques and gain an understanding of how algorithms work by studying bubble sort.

-

Sorting small data sets. It can be used for sorting small data sets of up to a few hundred elements. In cases where performance is not a critical concern, bubble sort can be a quick and easy way to sort small lists.

-

Pre-sorting data. It can be used as a preliminary step in more complex sorting algorithms. For example, if the data is already partially sorted, bubble sort can be used to further sort the data before running a more complex algorithm.

-

Sorting data with limited resources. It’s useful in situations where resources are limited, such as in embedded systems or microcontrollers, because it requires very little memory and processing power.

-

Building blocks for more complex algorithms. It’s often used in conjunction with merge sort or quicksort, and sorting small subarrays with insertion sort, given these other algorithms can achieve better performance on larger data sets.

Bubble sort implementation

- Use nested loops to iterate through items.

- Compare adjacent items in the list.

- Swap items if they are in the wrong order.

- Continue until the list is sorted.

Bubble sort in Python

def bubble_sort(items): for i in range(len(items)): for j in range(len(items)-1-i): if items[j] > items[j+1]: items[j], items[j+1] = items[j+1], items[j] return items items = [6,20,8,19,56,23,87,41,49,53]

print(bubble_sort(items))

Bubble sort in JavaScript

function bubbleSort(items) { let swapped; do { swapped = false; for (let i = 0; i < items.length - 1; i++) { if (items[i] > items[i + 1]) { let temp = items[i]; items[i] = items[i + 1]; items[i + 1] = temp; swapped = true; } } } while (swapped); return items;

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(bubbleSort(items));

Insertion sort

Insertion sort is another simple algorithm that builds the final sorted array one item at a time, and it’s named like this for the way smaller elements are inserted into their correct positions in the sorted array.

History of Insertion sort

In The Art of Computer Programming, Knuth comments that insertion sort “was mentioned by John Mauchly as early as 1946, in the first published discussion of computer sorting”, describing it as a “natural” algorithm that can easily be understood and implemented.

By the late 1950s, Donald L. Shell made series of improvements in his shell sort method (covered below), which compares elements separated by a distance that decreases on each pass, reducing the algorithm’s complexity to $O(n^{3/2})$ and $O(n^{4/3})$ in two different variants. This might not sound as much, but it’s quite a significant improvement for practical applications!

Technical Notes: $O(n^{3/2})$ and $O(n^{4/3})$ complexities are more efficient than $O(n^2)$ complexity, meaning they take less time to finish. This is because they don’t need to perform as many comparisons as $O(n^2)$ complexity.

For example, it may take one second to sort an array of ten numbers using a $O(n^2)$ algorithm, but it could take 0.5 seconds to sort the same array using a $O(n^{3/2})$ algorithm. This is because the algorithm can perform fewer comparisons when using the $O(n^{3/2})$ algorithm, resulting in a faster runtime.

In 2006 Bender, Martin Farach-Colton, and Mosteiro published a new variant of insertion sort called library sort or “gapped insertion sort”, which leaves a small number of unused spaces (or “gaps”) spread throughout the array, further improving the running time to $O(n log n)$.

Technical Notes: $O(n log n)$ complexity is more efficient than $O(n^2)$ complexity, and $O(n^{3/2})$ and $O(n^{4/3})$ complexities. This is because it uses a divide-and-conquer approach, which means that it can break the problem into smaller pieces and solve them more quickly.

For example, it may take one second to sort an array of ten numbers using a $O(n^2)$ algorithm, 0.5 seconds to sort the same array using a $O(n^{3/2})$ algorithm, but it could take 0.1 seconds to sort the same array using a $O(n log n)$ algorithm. This is because the algorithm can break the array into smaller pieces and solve them in parallel, resulting in a faster runtime.

Advantages and drawbacks of insertion sort

Insertion sort is often used in practice for small data sets or as a building block for more complex algorithms.

Just like with bubble sort, its worst-case and average-case time complexity is $O(n^2)$. But unlike bubble sort, insertion sort can be used to sort data sets in-place, meaning that it doesn’t require additional memory to store intermediate results.

Use cases for insertion sort

Simple and efficient, insertion sort is often used in situations where the input data is already partially sorted, or where the size of the input data is relatively small. It’s also used for sorting small data sets and for building blocks for more complex algorithms, just like bubble sort.

-

Partially sorted data. It’s well-suited for situations where the data is already partially sorted. In this case, the algorithm can quickly insert new elements into their correct positions without the need for complex sorting operations.

-

Online sorting. It’s often used for online sorting applications where the input data isn’t known in advance. In these cases, the algorithm can incrementally sort the input data as it’s received.

-

Adaptive sorting. Insertion sort is a candidate for adaptive sorting because it can take advantage of existing order in the input data. As the input data becomes more ordered, the algorithm’s performance improves.

Insertion sort implementation

- Take an unsorted list and select the first item as a “pivot”.

- Iterate through the list, inserting the pivot into its correct place in the sorted list.

- Repeat the process with the next item in the list.

- Continue until the list is sorted.

Insertion sort in Python

def insertion_sort(items): for i in range(1, len(items)): j = i while j > 0 and items[j-1] > items[j]: items[j-1], items[j] = items[j], items[j-1] j -= 1 return items items = [6,20,8,19,56,23,87,41,49,53]

print(insertion_sort(items))

Insertion sort in JavaScript

function insertionSort(items) { for (let i = 1; i < items.length; i++) { let j = i; while (j > 0 && items[j - 1] > items[j]) { let temp = items[j]; items[j] = items[j - 1]; items[j - 1] = temp; j--; } } return items;

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(insertionSort(items));

Quicksort

Quicksort is a popular divide-and-conquer sorting algorithm based on the principle of partitioning an array into two sub-arrays — one containing elements smaller than a “pivot” element and the other containing elements larger than the pivot element. The two sub-arrays are then sorted recursively.

The basic steps of quicksort include:

- Choose a pivot element from the array.

- Partition the array into two sub-arrays, one containing elements smaller than the pivot and the other containing elements larger than the pivot.

- Sort the two sub-arrays recursively using quicksort.

- Combine the two sorted sub-arrays.

The history of quicksort

Quicksort was invented by Tony Hoare in 1959. Hoare was working at the Elliott Brothers computer company in Britain when he developed the algorithm as a way to sort words in the memory of the Ferranti Mark I computer.

Quicksort was initially published as a research paper in 1961, and it quickly became one of the most widely used sorting algorithms due to its simplicity, efficiency, and ease of implementation.

Advantages of quicksort

- It has an average case time complexity of

$O(n log n)$. - It requires very little additional memory, as it sorts the array in place.

- It’s easy to implement and is widely understood.

- It can easily be parallelized.

Drawbacks of quicksort

Its worst-case time complexity is $O(n^2)$ when the pivot is chosen poorly, making it less efficient than other algorithms like merge sort or heapsort in certain situations.

Technical Note: we don’t want to choose a pivot that’s too small or too big, or the algorithm will run in quadratic time. The ideal would be to choose the median as the pivot, but it’s not always possible unless we have prior knowledge of the data distribution.

Use cases of quicksort

As a highly efficient sorting algorithm, quicksort has a wide range of applications.

-

Large data sets. Its average-case time complexity is

$O(n log n)$, which means that it can sort large amounts of data quickly. -

Random data. It performs well on randomly ordered data, because it relies on the pivot element to divide the data into two sub-arrays, which are then sorted recursively. When the data is random, the pivot element is likely to be close to the median, which leads to good performance.

-

Parallel processing. It can easily be parallelized, which makes it ideal for sorting large data sets on multi-core processors. By dividing the data into smaller sub-arrays, the algorithm can be executed on multiple cores simultaneously, leading to faster performance.

-

External sorting. It’s often used as part of an external sorting algorithm, which is used to sort data that’s too large to fit into memory. In this case, the data is sorted into chunks, which are then merged using a merge-sort algorithm.

-

Data compression. It’s used in some data compression algorithms, such as the Burrows-Wheeler transform, which is used in the bzip2 compression software. The algorithm is used to sort the data in the Burrows-Wheeler matrix, which is then transformed to produce the compressed data.

Quicksort implementation

- Use a “pivot” point, ideally the median, to divide the list into two parts.

- Quickly sort the left part and the right part.

- Continue until the list is sorted.

Quicksort in Python

def quick_sort(items): if len(items) > 1: pivot = items[0] left = [i for i in items[1:] if i < pivot] right = [i for i in items[1:] if i >= pivot] return quick_sort(left) + [pivot] + quick_sort(right) else: return items items = [6,20,8,19,56,23,87,41,49,53]

print(quick_sort(items))

Quicksort in JavaScript

function quickSort(items) { if (items.length > 1) { let pivot = items[0]; let left = []; let right = []; for (let i = 1; i < items.length; i++) { if (items[i] < pivot) { left.push(items[i]); } else { right.push(items[i]); } } return quickSort(left).concat(pivot, quickSort(right)); } else { return items; }

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(quickSort(items));

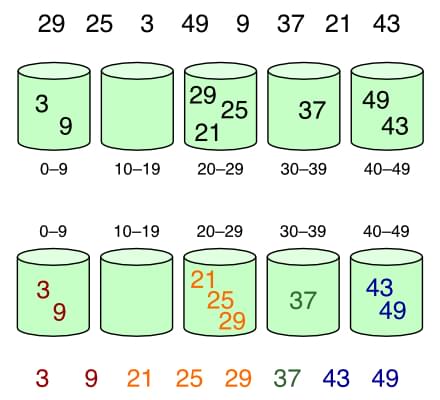

Bucket sort

Bucket sort is a useful algorithm for sorting uniformly distributed data, and it can easily be parallelized for improved performance.

The basic steps of bucket sort include:

- Create an array of empty buckets.

- Scatter the input data into the buckets according to a defined function.

- Sort each bucket using another algorithm or recursively with bucket sort.

- Gather the sorted elements from each bucket into the original array.

Advantages of bucket sort

- It’s efficient for uniformly distributed data, with an average-case time complexity of

$O(n+k)$, where$n$is the number of elements and$k$is the number of buckets. - It can easily be parallelized, allowing it to take advantage of multiple cores in modern processors.

- It’s stable, meaning that it preserves the relative order of equal elements in the original array.

- It can be used for data with non-uniform distributions by adjusting the bucket function.

Technical Note: $O(n+k)$ complexity is more efficient than $O(n^2)$ complexity, $O(n^{3/2})$ and $O(n^{4/3})$ complexities, and $O(n log n)$ complexity. This is because it only has to perform a linear number of operations, regardless of the size of the input.

*For example, consider an algorithm that sorts an array of numbers. It may take one second to sort an array of ten numbers using a $O(n^2)$ algorithm, 0.5 seconds to sort the same array using a $O(n^{3/2})$ algorithm, 0.1 seconds to sort the same array using a $O(n log n)$ algorithm, but it could take 0.05 seconds to sort the same array using a $O(n+k)$ algorithm. This is because the algorithm doesn’t need to perform as many comparisons.

Drawbacks of bucket sort

Bucket sort is less efficient than other sorting algorithms on data that isn’t uniformly distributed, with a worst-case performance of $O(n^2)$. Additionally, it requires additional memory to store the buckets, which can be a problem for very large data sets.

The history of bucket sort

The are implementations of bucket sort already in the 1950s, with sources claiming the method has been around since the 1940s.

Either way, it’s still in widespread use these days.

Use cases for bucket sort

Just like quicksort, bucket sort can easily be parallelized and used for external sorting, but bucket sort is particularly useful when dealing with uniformly distributed data.

-

Sorting floating-point numbers. In this case, the range is divided into a fixed number of buckets, each of which represents a sub-range of the input data. The numbers are then placed into their corresponding buckets and sorted using another algorithm, such as insertion sort. Finally, the sorted data is concatenated into a single array.

-

Sorting strings. Strings are grouped into buckets based on the first letter of the string. The strings in each bucket are then sorted using another algorithm, or recursively with bucket sort. This process is repeated for each subsequent letter in the strings until the entire set is sorted.

-

Histogram generation. This can be used to generate histograms of data, which are used to represent the frequency distribution of a set of values. In this case, the range of data is divided into a fixed number of buckets, and the number of values in each bucket is counted. The resulting histogram can be used to visualize the distribution of the data.

Bucket sort implementation

- Split a list of items into “buckets”.

- Each bucket is sorted using a different sorting algorithm.

- The buckets are then merged back into one sorted list.

Bucket sort in Python

def bucket_sort(items): buckets = [[] for _ in range(len(items))] for item in items: bucket = int(item/len(items)) buckets[bucket].append(item) for bucket in buckets: bucket.sort() return [item for bucket in buckets for item in bucket] items = [6,20,8,19,56,23,87,41,49,53]

print(bucket_sort(items))

Bucket sort in JavaScript

function bucketSort(items) { let buckets = new Array(items.length); for (let i = 0; i < buckets.length; i++) { buckets[i] = []; } for (let j = 0; j < items.length; j++) { let bucket = Math.floor(items[j] / items.length); buckets[bucket].push(items[j]); } for (let k = 0; k < buckets.length; k++) { buckets[k].sort(); } return [].concat(...buckets);

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(bucketSort(items));

Shell sort

Shell sort uses an insertion sort algorithm, but instead of sorting the entire list at once, the list is divided into smaller sub-lists. These sub-lists are then sorted using an insertion sort algorithm, thus reducing the number of exchanges needed to sort the list.

Also known as “Shell’s method”, it works by first defining a sequence of integers called the increment sequence. The increment sequence is used to determine the size of the sub-lists that will be sorted independently. The most commonly used increment sequence is “the Knuth sequence”, which is defined as follows (where $h$ is interval with initial value and $n$ is the length of the list):

h = 1

while h < n: h = 3*h + 1

Once the increment sequence has been defined, the Shell sort algorithm proceeds by sorting the sub-lists of elements. The sub-lists are sorted using an insertion sort algorithm, with the increment sequence as the step size. The algorithm sorts the sub-lists, starting with the largest increment and then iterating down to the smallest increment.

The algorithm stops when the increment size is 1, at which point it’s equivalent to a regular insertion sort algorithm.

The history of shell sort

Shell sort was invented by Donald Shell in 1959 as a variation of insertion sort, which aims to improve its performance by breaking the original list into smaller sub-lists and sorting these sub-lists independently.

Advantages of shell sort

- It’s a generalization of insertion sort and therefore easy to understand and implement.

- It has a time complexity that’s better than

$O(n^2)$for many sequences of input data. - It’s an in-place sorting algorithm, meaning that it doesn’t require additional memory.

Drawbacks of shell sort

It can be difficult to predict the time complexity of shell sorting, as it depends on the choice of increment sequence.

Use sases for shell sort

Shell sort is a general-purpose algorithm for sorting data in a variety of applications, particularly when sorting large data sets like with quicksort and bucket sort.

-

Sorting mostly sorted data. Shell sort reduces the number of comparisons and swaps required to sort data. This makes it faster than other sorting algorithms such as quicksort or merge sort in this particular scenario.

-

Sorting arrays with a small number of inversions. Inversion is a measure of how unsorted an array is, and is defined as the number of pairs of elements that are in the wrong order. Shell sort is more efficient than some other algorithms such as bubble sort or insertion sort when sorting arrays with a small number of inversions.

-

In-place sorting. Shell sort doesn’t require additional memory to sort the input, making it a contender for in-place sorting. This makes it useful in situations where memory is limited or when additional memory usage is undesirable.

-

Sorting in a distributed environment. By dividing the input data into smaller sub-lists and sorting them independently, each sub-list can be sorted on a separate processor or node, reducing the time required to sort the data.

Shell sort implementation

- Divide a list of items into “buckets” based on some criteria

- Sort each bucket individually

- Combine the sorted buckets

Shell sort implementation in Python

def shell_sort(items): sublistcount = len(items)//2 while sublistcount > 0: for start in range(sublistcount): gap_insertion_sort(items, start, sublistcount) sublistcount = sublistcount // 2 return items def gap_insertion_sort(items, start, gap): for i in range(start+gap, len(items), gap): currentvalue = items[i] position = i while position >= gap and items[position-gap] > currentvalue: items[position] = items[position-gap] position = position-gap items[position] = currentvalue items = [6,20,8,19,56,23,87,41,49,53]

print(shell_sort(items))

Shell sort implementation in JavaScript

function shellSort(items) { let sublistcount = Math.floor(items.length / 2); while (sublistcount > 0) { for (let start = 0; start < sublistcount; start++) { gapInsertionSort(items, start, sublistcount); } sublistcount = Math.floor(sublistcount / 2); } return items;

} function gapInsertionSort(items, start, gap) { for (let i = start + gap; i < items.length; i += gap) { let currentValue = items[i]; let position = i; while (position >= gap && items[position - gap] > currentValue) { items[position] = items[position - gap]; position = position - gap; } items[position] = currentValue; }

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(shellSort(items));

Merge sort

The basic idea of merge sort is to divide the input list in half, sort each half recursively using merge sort, and then merge the two sorted halves back together. The merge step is performed by repeatedly comparing the first element of each half and adding the smaller of the two to the sorted list. This process is repeated until all elements have been merged back together.

Advantages of merge sort

Merge sort has a time complexity of $O(n log n)$ in the worst-case scenario, which makes it more efficient than other popular sorting algorithms such as bubble sort, insertion sort, or selection sort.

Merge sort is also a algorithm, meaning that it preserves the relative order of equal elements.

Drawbacks of merge sort

Merge sort has some disadvantages when it comes to memory usage. The algorithm requires additional memory to store the two halves of the list during the divide step, as well as additional memory to store the final sorted list during the merge step. This can be a concern when sorting very large lists.

The history of merge sort

Merge sort was invented by John von Neumann in 1945, as a comparison-based sorting algorithm that works by dividing an input list into smaller sub-lists, sorting those sub-lists recursively, and then merging them back together to produce the final sorted list.

Use cases for merge sort

Merge sort is a general-purpose sorting algorithm that can be parallelized to sort large data sets and in external sorting (à la quicksort and bucket sort), and it’s also commonly use as a building block for more complex algorithms (like bubble sort and insertion sort).

-

Stable sorting. stable sorting for merge sort means that it preserves the relative order of equal elements. This makes it useful in situations where maintaining the order of equal elements is important, such as in financial applications or when sorting data for visualization purposes.

-

Implementing binary search. It’s used to efficiently search for a specific element in a sorted list, as it relies on a sorted input. Merge sort can be used to efficiently sort the input for binary search and other similar algorithms.

Merge sort implementation

- Use recursion to split a list into smaller, sorted sub-lists

- Merge the sub-lists back together, comparing and sorting items as they are merged

Merge sort implementation in Python

def merge_sort(items): if len(items) <= 1: return items mid = len(items) // 2 left = items[:mid] right = items[mid:] left = merge_sort(left) right = merge_sort(right) return merge(left, right) def merge(left, right): merged = [] left_index = 0 right_index = 0 while left_index < len(left) and right_index < len(right): if left[left_index] > right[right_index]: merged.append(right[right_index]) right_index += 1 else: merged.append(left[left_index]) left_index += 1 merged += left[left_index:] merged += right[right_index:] return merged items = [6,20,8,19,56,23,87,41,49,53]

print(merge_sort(items))

Merge sort implementation in JavaScript

function mergeSort(items) { if (items.length <= 1) { return items; } let mid = Math.floor(items.length / 2); let left = items.slice(0, mid); let right = items.slice(mid); return merge(mergeSort(left), mergeSort(right));

} function merge(left, right) { let merged = []; let leftIndex = 0; let rightIndex = 0; while (leftIndex < left.length && rightIndex < right.length) { if (left[leftIndex] > right[rightIndex]) { merged.push(right[rightIndex]); rightIndex++; } else { merged.push(left[leftIndex]); leftIndex++; } } return merged.concat(left.slice(leftIndex)).concat(right.slice(rightIndex));

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(mergeSort(items));

Selection sort

Selection sort repeatedly selects the smallest element from an unsorted portion of a list and swaps it with the first element of the unsorted portion. This process continues until the entire list is sorted.

The history of selection sort

Selection sort is a simple and intuitive sorting algorithm that’s been around since the early days of computer science. It’s likely that similar algorithms were developed independently by researchers in the 1950s.

It was one of the first sorting algorithms to be developed, and it remains a popular algorithm for educational purposes and for simple sorting tasks.

Advantages of selection sort

Selection sort is used in some applications where simplicity and ease of implementation are more important than efficiency. It’s also useful as a teaching tool for introducing students to sorting algorithms and their properties, as it’s easy to understand and implement.

Drawbacks of selection sort

Despite its simplicity, selection sort is not very efficient compared to other sorting algorithms such as merge sort or quicksort. It has a worst-case time complexity of $O(n^2)$, and it can take a long time to sort large lists.

Selection sort also isn’t a stable sorting algorithm, meaning that it may not preserve the order of equal elements.

Use cases for selection sort

Selection sort is similar to bubble sort and insertion sort in that in can be used to sort small data sets, and its simplicity also makes it a useful tool for teaching and learning about sorting algorithms. Other uses include:

-

Sorting data with limited memory. It requires only a constant amount of additional memory to perform the sort, making it useful in situations where memory usage is limited.

-

Sorting data with unique values. It doesn’t depend on the input being mostly sorted, making it a good choice for data sets with unique values where other sorting algorithms may have to perform additional checks or optimizations.

Selection sort implementation

- Iterate through the list, selecting the lowest item

- Swap the lowest item with the item at the current position

- Repeat the process for the rest of the list

Selection sort implementation in Python

def selection_sort(items): for i in range(len(items)): min_idx = i for j in range(i+1, len(items)): if items[min_idx] > items[j]: min_idx = j items[i], items[min_idx] = items[min_idx], items[i] return items items = [6,20,8,19,56,23,87,41,49,53]

print(selection_sort(items))

Selection sort implementation in JavaScript

function selectionSort(items) { let minIdx; for (let i = 0; i < items.length; i++) { minIdx = i; for (let j = i + 1; j < items.length; j++) { if (items[j] < items[minIdx]) { minIdx = j; } } let temp = items[i]; items[i] = items[minIdx]; items[minIdx] = temp; } return items;

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(selectionSort(items));

Radix sort

The basic idea behind radix sort is to sort data by grouping it by each digit in the numbers or characters being sorted, from right to left or left to right. This process is repeated for each digit, resulting in a sorted list.

Its worst-case performance is ${O(wcdot n)}$, where $n$ is the number of keys, and $w$ is the key length.

The history of radix sort

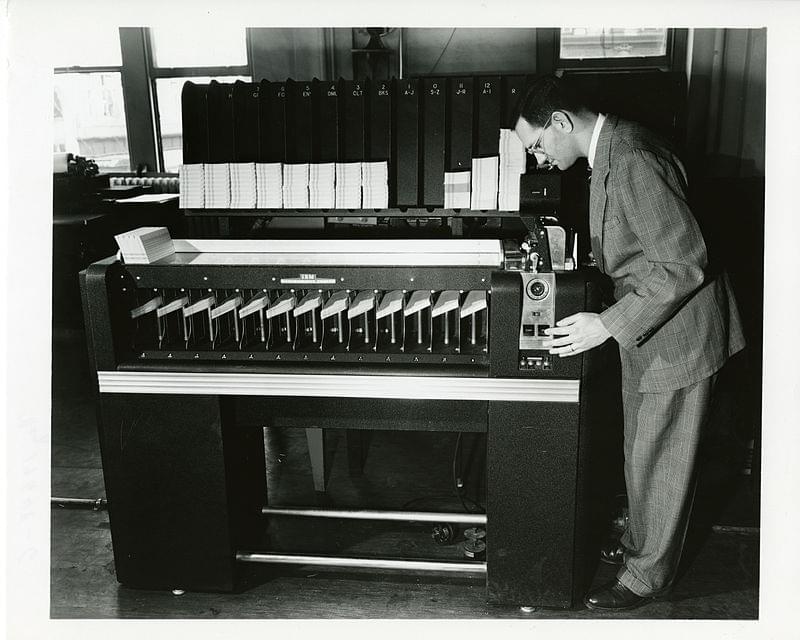

Radix sort was first introduced by Herman Hollerith in the late 19th century as a way to efficiently sort data on punched cards, where each column represented a digit in the data.

It was later adapted and popularized by several researchers in the mid-20th century to sort binary data by grouping the data by each bit in the binary representation. But it’s also used to sort string data, where each character is treated as a digit in the sort.

In recent years, radix sort has seen renewed interest as a sorting algorithm for parallel and distributed computing environments, as it’s easily parallelizable and can be used to sort large data sets in a distributed fashion.

Advantages of radix sort

Radix sort is a linear-time sorting algorithm, meaning that its time complexity is proportional to the size of the input data. This makes it an efficient algorithm for sorting large data sets, although it may not be as efficient as other sorting algorithms for smaller data sets.

Its linear-time complexity and stability make it a useful tool for sorting large data sets, and its parallelizability (yeah, that’s an actual word) makes it useful for sorting data in distributed computing environments.

Radix sort is also a stable sorting algorithm, meaning that it preserves the relative order of equal elements.

Use cases for radix sort

Radix sort can be used in various applications where efficient sorting of large data sets is required. It’s particularly useful for sorting string data and fixed-length keys, and can also be used in parallel and distributed computing environments.

-

Parallel processing. Radix sort is often preferred for sorting large data sets (over merge sort, quicksort and bucket sort). And like bucket sort, radix can sort string data efficiently, which makes it suitable for natural language processing applications.

-

Sorting data with fixed-length keys. Radix sort is particularly efficient when sorting data with fixed-length keys, as it can perform the sort by examining each key one digit at a time.

Radix sort implementation

- Compare the digits of each item in the list.

- Group the items according to the digits.

- Sort the groups by size.

- Recursively sort each group until each item is in its correct position.

Radix sort implementation in Python

def radix_sort(items): max_length = False tmp, placement = -1, 1 while not max_length: max_length = True buckets = [list() for _ in range(10)] for i in items: tmp = i // placement buckets[tmp % 10].append(i) if max_length and tmp > 0: max_length = False a = 0 for b in range(10): buck = buckets[b] for i in buck: items[a] = i a += 1 placement *= 10 return items items = [6,20,8,19,56,23,87,41,49,53]

print(radix_sort(items))

Radix sort implementation in JavaScript

function radixSort(items) { let maxLength = false; let tmp = -1; let placement = 1; while (!maxLength) { maxLength = true; let buckets = Array.from({ length: 10 }, () => []); for (let i = 0; i < items.length; i++) { tmp = Math.floor(items[i] / placement); buckets[tmp % 10].push(items[i]); if (maxLength && tmp > 0) { maxLength = false; } } let a = 0; for (let b = 0; b < 10; b++) { let buck = buckets[b]; for (let j = 0; j < buck.length; j++) { items[a] = buck[j]; a++; } } placement *= 10; } return items;

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(radixSort(items));

Comb sort

Comb sort compares pairs of elements that are a certain distance apart, and swap them if they’re out of order. The distance between the pairs is initially set to the size of the list being sorted, and is then reduced by a factor (called the “shrink factor”) with each pass, until it reaches a minimum value of $1$. This process is repeated until the list is fully sorted.

The comb sort algorithm is similar to the bubble sort algorithm, but with a larger gap between the compared elements. This larger gap allows for larger values to move more quickly to their correct position in the list.

k = 1.24733. Image source: Wikimedia Commons, CC BY-SA 3.0.The history of comb sort

The comb sort algorithm is a relatively recent sorting algorithm that was first introduced in 1980 by Włodzimierz Dobosiewicz and Artur Borowy. The algorithm was inspired by the idea of using a comb to straighten out tangled hair, and it uses a similar process to straighten out a list of unsorted values.

Advantages of comb sort

Comb sort has a worst-case time complexity of $O(n^2)$, but in practice it’s often faster than other $O(n^2)$ sorting algorithms such as bubble sort, due to its use of the shrink factor. The shrink factor allows the algorithm to quickly move large values towards their correct position, reducing the number of passes required to fully sort the list.

Use cases for comb sort

Comb sort is a relatively simple and efficient sorting algorithm that has several use cases in various applications.

-

Sorting data with a large range of values. The use of a larger gap between compared elements allows larger values to move more quickly to their correct position in the list.

-

Sorting data in real-time applications. As a stable sorting algorithm, comb sort preserves the relative order of equal elements. This makes it useful for sorting data in real-time applications where the order of equal elements needs to be preserved.

-

Sorting data in memory-constrained environments. Comb sort doesn’t require additional memory to sort the data. This makes it useful for sorting data in memory-constrained environments where additional memory isn’t available.

Comb sort implementation

- Start with a large gap between items.

- Compare items at the ends of the gap and swap them if they are in the wrong order.

- Reduce the gap and repeat the process until the gap is

1. - Finish with a bubble sort on the remaining items.

Comb sort implementation in Python

def comb_sort(items): gap = len(items) shrink = 1.3 sorted = False while not sorted: gap //= shrink if gap <= 1: sorted = True else: for i in range(len(items)-gap): if items[i] > items[i+gap]: items[i],items[i+gap] = items[i+gap],items[i] return bubble_sort(items) def bubble_sort(items): for i in range(len(items)): for j in range(len(items)-1-i): if items[j] > items[j+1]: items[j], items[j+1] = items[j+1], items[j] return items items = [6,20,8,19,56,23,87,41,49,53]

print(comb_sort(items))

Comb sort implementation in JavaScript

function combSort(items) { let gap = items.length; let shrink = 1.3; let sorted = false; while (!sorted) { gap = Math.floor(gap / shrink); if (gap <= 1) { sorted = true; } else { for (let i = 0; i < items.length - gap; i++) { if (items[i] > items[i + gap]) { let temp = items[i]; items[i] = items[i + gap]; items[i + gap] = temp; } } } } return bubbleSort(items);

} function bubbleSort(items) { let swapped; do { swapped = false; for (let i = 0; i < items.length - 1; i++) { if (items[i] > items[i + 1]) { let temp = items[i]; items[i] = items[i + 1]; items[i + 1] = temp; swapped = true; } } } while (swapped); return items;

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(combSort(items));

Timsort

The Timsort algorithm works by dividing the input data into smaller sub-arrays, and then using insertion sort to sort these sub-arrays. These sorted sub-arrays are then combined using merge sort to produce a fully sorted array.

Timsort has a worst-case time complexity of $O(n log n)$, which makes it efficient for sorting large data sets. It’s also a stable sorting algorithm, meaning that it preserves the relative order of equal elements.

Advantages of Timsort

One of the key features of Timsort is its ability to handle different types of data efficiently. It does this by detecting “runs”, which are sequences of elements that are already sorted. Timsort then combines these runs in a way that minimizes the number of comparisons and swaps required to produce a fully sorted array.

Another important feature of Timsort is its ability to handle data that’s partially sorted. In this case, Timsort can detect the partially sorted regions and use insertion sort to quickly sort them, reducing the time required to fully sort the data.

The history of Timsort

Timsort was developed by Tim Peters in 2002 for use in the Python programming language. It’s a hybrid sorting algorithm that uses a combination of insertion sort and merge sort techniques, and is designed to efficiently sort a variety of different types of data.

It’s since been adopted by several other programming languages, including Java and C#, due to its efficiency and versatility in handling different types of data.

Use cases for Timsort

As an advanced algorithm, Timsort can be used when sorting data on memory-constrained systems.

-

Sorting in programming languages. Timsort is often used as the default sorting algorithm in these languages because of its efficiency and ability to handle different types of data.

-

Sorting real-world data. Timsort is particularly efficient when sorting real-world data that may be partially sorted or contain already sorted sub-arrays, as it’s able to detect these runs and use insertion sort to quickly sort them, reducing the time required to fully sort the data.

-

Sorting data with different types. It’s designed to efficiently handle different types of data, including numbers, strings, and custom objects. It can detect runs of data with the same type and combine them efficiently using merge sort, reducing the number of comparisons and swaps required.

Timsort implementation

- Take an unsorted list and breaks it into smaller, sorted sub-lists.

- Merge the sub-lists to form a larger, sorted list.

- Repeat the process until the entire list is sorted.

Timsort implementation in Python

def insertion_sort(arr, left=0, right=None): if right is None: right = len(arr) - 1 for i in range(left + 1, right + 1): key_item = arr[i] j = i - 1 while j >= left and arr[j] > key_item: arr[j + 1] = arr[j] j -= 1 arr[j + 1] = key_item return arr def merge(left, right): if not left: return right if not right: return left if left[0] < right[0]: return [left[0]] + merge(left[1:], right) return [right[0]] + merge(left, right[1:]) def timsort(arr): min_run = 32 n = len(arr) for i in range(0, n, min_run): insertion_sort(arr, i, min((i + min_run - 1), n - 1)) size = min_run while size < n: for start in range(0, n, size * 2): midpoint = start + size - 1 end = min((start + size * 2 - 1), (n-1)) merged_array = merge( left=arr[start:midpoint + 1], right=arr[midpoint + 1:end + 1] ) arr[start:start + len(merged_array)] = merged_array size *= 2 return arr items = [6,20,8,19,56,23,87,41,49,53]

print(timsort(items))

Timsort implementation in JavaScript

function insertionSort(arr, left = 0, right = arr.length - 1) { for (let i = left + 1; i <= right; i++) { const keyItem = arr[i]; let j = i - 1; while (j >= left && arr[j] > keyItem) { arr[j + 1] = arr[j]; j--; } arr[j + 1] = keyItem; } return arr;

} function merge(left, right) { let i = 0; let j = 0; const merged = []; while (i < left.length && j < right.length) { if (left[i] < right[j]) { merged.push(left[i]); i++; } else { merged.push(right[j]); j++; } } return merged.concat(left.slice(i)).concat(right.slice(j));

} function timsort(arr) { const minRun = 32; const n = arr.length; for (let i = 0; i < n; i += minRun) { insertionSort(arr, i, Math.min(i + minRun - 1, n - 1)); } let size = minRun; while (size < n) { for (let start = 0; start < n; start += size * 2) { const midpoint = start + size - 1; const end = Math.min(start + size * 2 - 1, n - 1); const merged = merge( arr.slice(start, midpoint + 1), arr.slice(midpoint + 1, end + 1) ); arr.splice(start, merged.length, ...merged); } size *= 2; } return arr;

} let items = [6, 20, 8, 19, 56, 23, 87, 41, 49, 53];

console.log(timsort(items));

All Sorting Algorithms Compared

Note that the time complexity and space complexity listed in the table are worst-case complexities, and actual performance may vary depending on the specific implementation and input data.

What’s the Most Common Sorting Algorithm?

The most commonly used sorting algorithm is probably quicksort. It’s widely used in many programming languages, including C, C++, Java, and Python, as well as in many software applications and libraries. Quicksort is favored for its efficiency and versatility in handling different types of data, and is often used as the default sorting algorithm in programming languages and software frameworks.

However, other sorting algorithms like merge sort and Timsort are also commonly used in various applications due to their efficiency and unique features.

Final Thoughts

Knowing the basics of sorting algorithms is essential for anyone interested in programming, data analysis, or computer science. By understanding the different sorting algorithms and their characteristics, you can improve your ability to select and implement the best algorithm for your specific use case.

The best choice of sorting algorithm depends on several factors, including the size of the input data, the distribution of the data, the available memory, and the desired time complexity.

Sorting algorithms can be categorized based on their time complexity, space complexity, in-place sorting, stable sorting, and adaptive sorting. It’s important to understand the characteristics and trade-offs of different sorting algorithms to select the most appropriate algorithm for a specific use case.