So what is the one thing that people can do is to make their website better? To answer that, let’s take a step back in time …

The year is 1998, and the web is on the rise. In an attempt to give a high-level overview of the architecture of the WWW, Tim Berners-Lee — yes, that Tim Berners-Lee — publishes a paper called “Web Architecture from 50,000 feet”. The report covers lots of things: content negotiation, the semantic web, HTML, CSS, and cool URIs (which don’t change), among others.

In the article, Berners-Lee also notes a few design principles very early on. One of those principles is the “Rule of Least Power.”

The Rule of Least Power goes like this:

When designing computer systems, one is often faced with a choice between using a more or less powerful language for publishing information, for expressing constraints, or for solving some problem. […] The “Rule of Least Power” suggests choosing the least powerful language suitable for a given purpose.

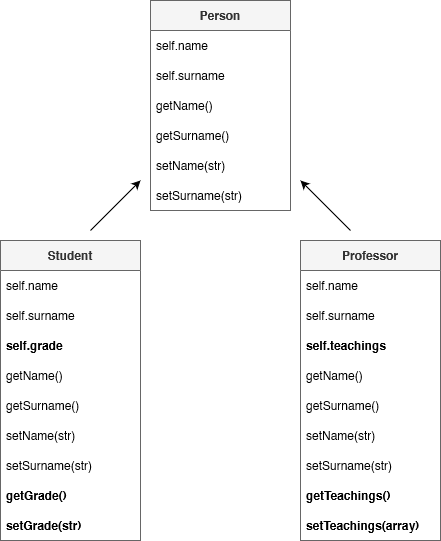

We have three main languages available on the web for the front end:

HTML

Table of Contents

Semantically describes the content

CSS

Controls the presentation and layout

JavaScript

Adds interactivity and behavior

The Rule of Least Power suggests trying and doing as much as possible using HTML before resorting to CSS. Once CSS is no longer sufficient, grab for JavaScript — but only if you really have to.

As Derek Featherstone nicely put it:

In the web front-end stack — HTML, CSS, JS, and ARIA — if you can solve a problem with a simpler solution lower in the stack, you should. It’s less fragile, more foolproof, and just works.

???? Hold up: This doesn’t mean you should set font sizes and colors via markup — a thing we used to do in the “olden” days of the web. Case in point: one of the rules covered in Berners-Lee’s piece is the separation of form and content.

The broken web

It has been almost 25 years since Berners-Lee published that article. Yet, somehow, the message he sent didn’t get through, and many developers — but not all — are unaware of it. Take this situation Drew Devault encountered not so long ago:

My browser has been perfectly competent at submitting HTML forms for the past 28 years, but for some stupid reason some developer decided to reimplement the form in JavaScript, and now I can’t pay my electricity bill without opening up the dev tools.

Sadly, this is no isolated case but rather a familiar phenomenon. All too often, I see websites and libraries that try to be clever or attempt to reinvent the wheel — primarily by throwing a bunch of JavaScript at it. In their attempt to do so, they achieve the exact opposite of what they were aiming at: those websites become less functional, less accessible, or — even worse — don’t work at all under certain conditions.

While a form might be a familiar example, there are more situations where applying the Rule of Least Power gives better results:

- Smooth scrolling?

???? No need for JavaScript because CSS can do that. - Need to communicate errors from your JSON-based API?

???? Don’t use an HTTP 200 with{error: true}in the response body, but an HTTP Status Code to communicate the error instead. - Closing a

<dialog>via JavaScript?

???? A<form>element with[method="dialog"]will do just fine. - Want to lazy load images?

???? That will soon be supported by all modern browsers directly in HTML markup with an attribute. - A customizable

<select>?

???? That’s in development as we speak. - Want to link animations to a scroll-offset?

???? There’s no need for an external JavaScript library as that now is a browser native API, and soon also something that can be done using only CSS. - Need to prevent certain characters in form inputs?

???? Don’t disable pasting but instead choose a proper input type and/or use thepatternattribute. - Need collapsing sections?

???? <details> and <summary> are your friends. - and so on…

In all these examples, we can move some functionality from an upper into a lower layer. Berners-Lee would be proud of us.

Resilience

By choosing a technology lower in the web stack, closer to the core of the web platform, we also gain the benefit of built-in resilience against failures.

JavaScript is terrible at failing. One script that fails to load or gets mangled in the process, or one wrong argument to a function, and your whole app may no longer work. If an error message like “Cannot read property x of undefined” sounds familiar to you, you know what I’m talking about.

CSS, on the other hand, is very good at failing. Even if you have a syntax error in one of your style sheets, the rest of your CSS will still work. Same with HTML. These are forgiving languages.

If you doubt why you should use the Rule of Least Power, Jeremy Keith brings us the answer in his article “Evaluating Technology”:

We tend to ask “How well does it work?”, but what you should really be asking is “How well does it fail?”

Jeremy Keith

We can do better

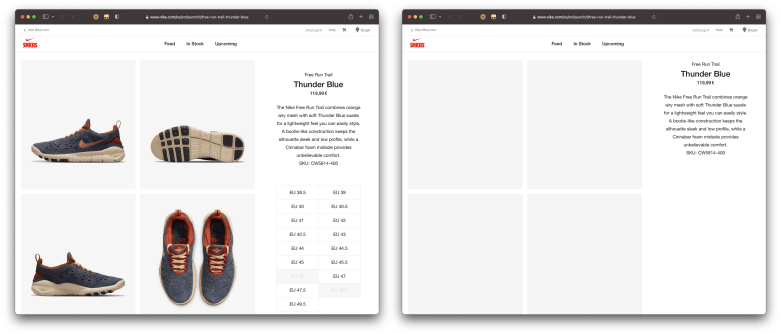

A high-profile website that could benefit from the Rule of Least Power is the Nike website. When you visit their site with JavaScript disabled, you don’t get to see any images, nor do you get to order any shoes.

This over-reliance on JavaScript is not necessary as all those broken features can be built with technologies lower in the frontend stack:

- Why not immediately include

<img>elements to show the shoe photos instead of dynamically injecting them via JavaScript? - Why not use a

<form>with a<select>(to choose your size) and a submit<button>to order the shoes, rather than managing the entire state and submission through JavaScript?

☝️ If you’re wondering why someone would ever browse the web with JavaScript disabled: it’s often not their choice but an external factor that’s interfering. See “Everyone has JavaScript, right?” for a good explanation on the topic.

Even worse offenders in this category are high-profile sites like Instagram and Twitter. Without JavaScript these websites don’t work at all. They either give you a warning, or simply remain blank. Since when is JavaScript required to show text and images on the web?

Progressive enhancement

It doesn’t have to be as bad as this isolated Nike example. Sometimes it’s smaller components that refuse to work when JavaScript fails. Take a tabbed interface as an example. Even though you can find many

JavaScript libraries that provide this functionality, the kicker is that you don’t need JavaScript for that, as HTML, CSS, and ARIA themselves are already capable of getting you very far.

Once you have those base layers in place, use JavaScript to improve the experience further. Think of JavaScript as an enhancement instead of a requirement.

The same goes for certain CSS features that may or may not be available. Provide basic styling, and when a feature is available — detectable using @supports — enhance the result you already had.

This approach is known as progressive enhancement: You add functionality as more features become available, making the result better as far as the experience goes, but not so much that the feature cannot work without those extra flourishes.

And for browsers that don’t yet support a particular new feature, you can try and find a polyfill that provides that functionality to the browsers.

The web catches up

Since the web’s early days, we’ve seen the web platform gain many new features over time. New HTML elements were defined, JavaScript (the language) has matured, and CSS has gotten many powerful new features for building layouts, animating elements, etc.

Things that were impossible years ago and that could only be done by relying on external technologies, like Flash, are now built into the browser itself.

A classic example is the features jQuery introduced. Over ten years ago, jQuery was the very first thing to drop into a project. Today, that’s no longer the case, as the web platform has caught up and now has document.querySelectorAll(), Element.classList, etc. built-in — all features inspired upon features jQuery gave us. You could say that jQuery was a polyfill before polyfills even were called polyfills.

While jQuery might be a familiar example, there are many other situations where the web has caught up:

The central theme here is that these features no longer rely on a polyfill or an external library but have shifted closer to the core of the web stack. The web catches up.

While some of these new APIs can be pretty abstract, there are libraries out there that you can plug into your code. An example is Redaxios, which uses fetch under the hood while also exposing a developer-friendly, Axios-compatible API. It wouldn’t surprise me if these convenience methods eventually trickle down into the web platform.

In closing

What Berners-Lee wrote almost 25 years ago stands the test of time. It’s up to us, developers, to honor that message. By embracing what the web platform gives us — instead of trying to fight against it — we can build better websites.

Keep it simple. Apply the Rule of Least Power. Build with progressive enhancement in mind.

HTML, CSS, and JavaScript — in that order.