Have you ever wondered how Google knows about all those websites it shows when you do a search? It is a machine, so it is not omniscient (we hope). Therefore, it must have a way of collecting information about websites on the internet. How else will it show them to you in a search result otherwise? It is actually a pretty interesting process, and we are going to tell you all about it. It is all done by software applications (bots) called web crawlers. They are called crawlers because they crawl all over the World Wide Web like spiders!

These web crawlers do as the name suggests: they crawl the internet to index it. That is done to learn what each page online is about so that it can then be brought up in a relevant search result. Search engines almost always operate such crawlers and visit countless websites daily. Crawling is entirely automated because, as you can imagine, there are too many online pages for people to assign them manually. With that said, though, let’s dive deeper into what a web crawler is and how it can actually be beneficial for your website!

What Is a Web Crawler?

Table of Contents

Let’s talk about web crawlers more in depth. They are such an essential part of the internet nowadays. Without them, search engines would not be as precise as they are currently, nor would they have vast numbers of search results.

For us to explain what a web crawler is in more detail, though, we must first understand what search indexing is. It is actually very simple, if we give a real-world parallel. Search indexing is like creating a catalog for a library. Such a catalog helps visitors to find books more quickly instead of searching shelf by shelf. That is precisely what search indexing is: a catalog of online pages. Search engines then use this catalog to fetch pages relevant to the search query they were given.

Web crawlers are the librarians of the online world. Like a librarian tidying up and cataloging a messy library, web crawlers surf the internet and note each page’s content, then assign it to the appropriate index. As you can see, search indexing and web crawlers are very familiar concepts applied to the cyber world instead.

How Does a Web Crawler Work?

Now that we know what search indexing and, more importantly, web crawlers are, it is time to discuss how a crawler knows which pages to go to and how often to visit them. Because the internet is such a vast network that never ceases to expand, it might be difficult to imagine the sheer number of pages crawlers are trying to index. The process itself is probably never-ending. Where do they even start? They start from a list of URLs called a seed. This seed sends the crawler to specific web pages, where the process begins. One of the great things about web pages nowadays is that they have hyperlinks, which the crawlers will follow. And then follow the hyperlinks on the consequent pages, and so on.

Web crawlers don’t strive to index the entirety of the internet since, as we mentioned above, that is a nigh impossible task. Instead, they follow a few rules that dictate which pages get indexed and which ones are left for later.

- Page Importance – Web crawlers try to index pages that contain valuable information, regardless of the topic. They determine whether a page has such information based on several factors: the number of backlinks and internal and outbound links, traffic, and domain and page authority. That is the foundational rule of web crawlers and has the highest impact on page crawling;

- Robots.txt Rules – The robots.txt is a file and a protocol: the robots exclusion protocol. The file is on the website’s hosting server and has access rules for the bots themselves. Before crawling a website, the bots will check for a robots.txt file, and if one is present, they will see its rules. If any forbid crawling, the bot will not index the page and move on. The file is helpful to prevent bots from crawling your pages or want only specific bots to have access. We will show you how to do that later on;

- Re-crawling Frequency – Pages on the internet are updated constantly and ceaselessly, which means they have to get re-crawled and re-indexed. But a bot can’t just sit on a page and wait for it to get new content so it can do those things. Because of that, each search engine has its own frequency for returning to pages to look for new content. For instance, Google does it between once a week and once a month, depending on the page’s importance. You can also manually request a crawl from Google by following their guide here. More popular pages get re-crawled more often.

And that is how a crawler works! It all makes sense when we sit and think about it, and it also makes us appreciate the scope of the internet. It is a truly vast place that we take for granted, but at least now you have more insight into what makes it tick.

Types of Web Crawlers

Web crawlers all index the internet but don’t use the same method or are hosted by the same company. In this part of our blog post, we will explore the different types of web crawlers based on their functions and who is hosting them.

On the one hand, we have web crawlers separated depending on how they crawl a website and their general purpose. These crawlers all index the internet, but some move ever onward, indexing new pages all the time, while others re-trace those steps to look for updates. That said, here are the four most popular types of web crawlers, depending on their functionality.

- Focused Web Crawler – This is your most fundamental bot. These types of bots will collect information based on a predetermined set of requirements. If you remember the seed we discussed earlier, those requirements are often outlined in it. For instance, they will look solely for content regarding web hosting and follow hyperlinks only if they pertain to that. These bots move forward constantly, mapping out the internet;

- Incremental Web Crawler – Opposite to the above one, these bots will move through previously indexed pages to look for any updates. For instance, they will replace any outdated links with new ones in the index;

- Parallel Web Crawler – A bit more advanced than the other two, this web crawler utilizes parallelization to perform multiple crawls simultaneously.

On the other hand, we have different crawlers based on who hosts or owns them. You are probably familiar with a few of them already, but if not, here are the most popular ones, along with their user-agent. We will explain what that is in the next part of the post.

- Googlebot – The most famous web crawler, Googlebot is the colloquial name for two bots: Googlebot Desktop and Googlebot Mobile. As the name implies, Google owns and operates it, making it the most effective crawler currently in use. Its user-agent is Googlebot;

- Bingbot – This is Microsoft’s own crawler, deployed in 2010. It indexes the internet for its own search engine, Bing. It is in many ways similar to Googlebot, and its user-agent is one example of that: Bingbot;

- Slurp – This is the bot powering Yahoo Search Results, in combination with Bingbot from above. Many Yahoo websites use Bing, but Slurp crawls Yahoo-specific websites much more frequently to update its search results. Its user-agent is Slurp;

- DuckDuckBot – DuckDuckGo has recently become a rather popular search engine since it offers privacy unlike any of the previous engines. It does not track your searches, which has become something people have taken a great liking to. Its user-agent is DuckDuckBot;

While it is not imperative to understand the different types of web crawlers, knowing what types there are and who owns them is helpful knowledge. It can help you optimize your website for a specific search engine’s requirements, for instance.

How Can a Web Crawler Benefit Your Website?

We know how it sounds: some automated bot is accessing your website without your knowledge. It doesn’t sound appealing, but we assure you all legitimate bots crawl your website for its own benefit. If it doesn’t undergo crawling, it will not appear in search results. That might be great for some if it is a personal website. Still, for businesses and companies, it is imperative they have an online presence. To achieve that their website must appear in search results.

That is the most significant upside: discoverability and exposure. When a website launches online it gets crawled and appears in search results. That is important if you want your website to have great SEO; we all know how important that is nowadays. The more optimized your website is for crawling, the easier of a time the crawler will have, the more it will “like” the website and will keep returning to it each time you update it.

Your link structure should be robust and easy to read and navigate. For instance, your pages should have clear names and slugs; the same goes for each image or other media. Avoid randomly generated links, as in the same way people don’t remember those, crawlers don’t enjoy them either. The great thing here is that will not only help with your website getting crawled more often and more quickly, but it will also help with SEO in general.

The more optimized your website is (not just regarding its links, but its keywords as well), the higher it will appear in search results. The higher it is placed, the more traffic it will get, attracting bots to crawl it more often. Getting crawled more often will update the index, keep the search engine information relevant, and drive more people to your website.

Crawlers sound great, don’t they? That is because they are, and they don’t even impact the performance of your website since they run entirely in the background. But some crawlers are not as benevolent.

How to Deny Crawlers

As with every other application online, a malicious version of web crawlers does exist. Fortunately, they cannot directly hack your website and compromise its functionality. However, they can generate unexpected script requests and bandwidth usage. That can be an issue for websites hosted on servers with monthly quotas. Fortunately, the robots.txt and user-agent we discussed earlier in this post can help.

You can create a robots.txt file anywhere within a website’s directory on the hosting server. Where you place it matters, as it will only affect pages from the directory in which it is located. For instance, if you create it in the website’s root directory, crawlers must respect its rules for all your website’s pages. If it is in a subdirectory, then the rules will cover only the pages or resources contained within that subdirectory. But how exactly do you stop a web crawler from accessing your website, and how do you even identify the malicious one?

To stop a web crawler from indexing your website, you only need to place these few lines in the robots.txt file.

//Block One Crawler

User-agent: Googlebot

Disallow: /We used Googlebot as an example. That will stop Googlebot from crawling your website. The first line, beginning with slashes, serves as a comment that the crawler will ignore. It is there to remind you of the purpose of the other lines. The second line specifies the bot that the third line will affect. You can either disallow or allow. The dash after disallow represents the root directory of the website. Therefore, the bot will not crawl the website at all. If we had instead used /images, the crawler would have indexed the entire website except for the /images directory.

Additionally, remember that the bot will read the file from top to bottom, meaning you have flexibility with what you want to allow and disallow.

//Allow only Googlebot

User-agent: Googlebot

Allow: /

//Stop All Other Bots

User-agent: *

Disallow: /The example from above will allow only Googlebot to crawl your website. The slash after allow or disallow represents the root directory of your website, so you can place the path to any directory or file after it to stop bots from crawling just those things. Meanwhile, the asterisk signifies all other bots. But how do you identify potentially problematic bots? That depends entirely on the service hosting your website.

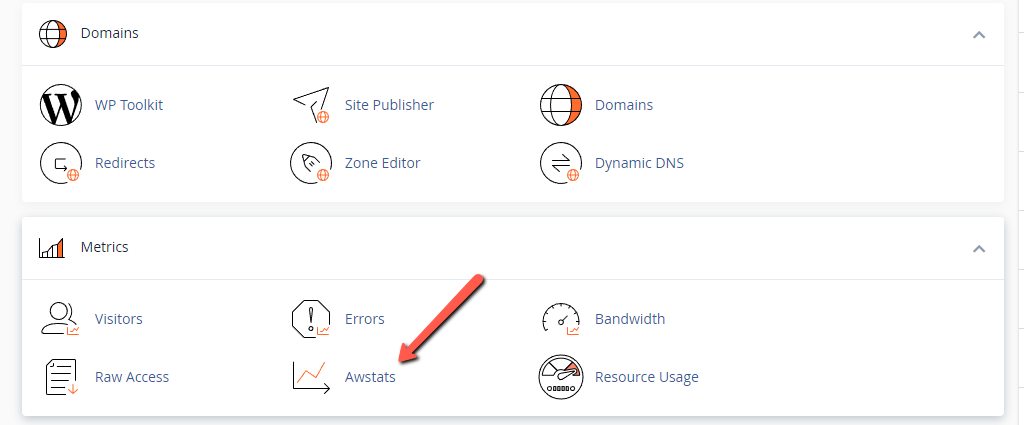

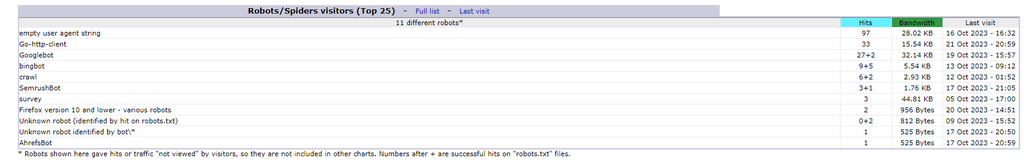

We will use our hosting plans as an example. They utilize cPanel as their control panel, which has a tool called Awstats.

Within it, you can find a specific table that shows all bots that have visited the website. It will tell you the name of the bot, as well as how many times it has visited and what bandwidth it has generated.

The name you see is the user-agent you need to place in the robots.txt file. Do keep in mind that only legitimate bots will comply with the file. Said legitimate bots will visit your website more often than not, and you shouldn’t worry about them. They will not overstay their welcome and will not use up much of your hosting plan’s resource quotas (if any are in place). If you notice any spikes in script executions or bandwidth, contact your hosting provider immediately. The cause might be an overly eager bot that is crawling your website far too often. You can either block it or reduce its crawl rate. Google offers such functionality from their Google Search Central. Placing this line in the robots.txt file can slow down other bots.

- Crawl-delay:

You can place any number after the colon, which will be the time (in seconds) a bot will wait before it tries to re-crawl.

If you use WordPress, you can also install the Blackhole for Bad Bots plugin, which will trap bots that do not adhere to the rules in your robots.txt file: the “Bad Bots.” It is a harmless plugin that will add a layer of security to your site, protecting it from bots wasting server resources. We have an excellent blog post about security in which we talk about it if you want to read more.

Final Thoughts

Web crawlers are significant for your website’s discoverability and also help with its SEO. Without them, we also wouldn’t have the search engines we use daily and a slew of other services that rely on those search engines. The internet would have been a much more closed-off space, but with the help of the crawlers, all we could ever need or want is at our fingertips.