As AI-powered image generators have become more accessible, so have websites that digitally remove the clothes of people in photos. One of these sites has an unsettling feature that provides a glimpse of how these apps are used: two feeds of what appear to be photos uploaded by users who want to “nudify” the subjects.

The feeds of images are a shocking display of intended victims. WIRED saw some images of girls who were clearly children. Other photos showed adults and had captions indicating that they were female friends or female strangers. The site’s homepage does not display any fake nude images that may have been produced to visitors who aren’t logged in.

People who want to create and save deepfake nude images are asked to log in to the site using a cryptocurrency wallet. Pricing isn’t currently listed, but in a 2022 video posted by an affiliated YouTube page, the website let users buy credits to create deepfake nude images, starting at 5 credits for $5. WIRED learned about the site from a post on a subreddit about NFT marketplace OpenSea, which linked to the YouTube page. After WIRED contacted YouTube, the platform said it terminated the channel; Reddit told WIRED that the user had been banned.

WIRED is not identifying the website, which is still online, to protect the women and girls who remain on its feeds. The site’s IP address, which went live in February 2022, belongs to internet security and infrastructure provider Cloudflare. When asked about its involvement, company spokesperson Jackie Dutton noted the difference between providing a site’s IP address, as Cloudflare does, and hosting its contents, which it does not.

WIRED notified the National Center for Missing & Exploited Children, which helps report cases of child exploitation to law enforcement, about the site’s existence.

AI developers like OpenAI and Stability AI say their image generators are for commercial and artistic uses and have guardrails to prevent harmful content. But open source AI image-making technology is now relatively powerful and creating pornography is one of the most popular use cases. As image generation has become more readily available, the problem of nonconsensual nude deepfake images, most often targeting women, has grown more widespread and severe. Earlier this month, WIRED reported that two Florida teenagers were arrested for allegedly creating and sharing AI-generated nude images of their middle school classmates without consent, in what appears to be the first case of its kind.

Mary Anne Franks, a professor at the George Washington University School of Law who has studied the problem of nonconsensual explicit imagery, says that the deepnude website highlights a grim reality: There are far more incidents involving AI-generated nude images of women without consent and minors than the public currently knows about. The few public cases were only exposed because the images were shared within a community, and someone heard about it and raised the alarm.

“There’s gonna be all kinds of sites like this that are impossible to chase down, and most victims have no idea that this has happened to them until someone happens to flag it for them,” Franks says.

Nonconsensual Images

Table of Contents

The website reviewed by WIRED has feeds with apparently user-submitted photos on two separate pages. One is labeled “Home” and the other “Explore.” Several of the photos clearly showed girls under the age of 18.

One image showed a young girl with a flower in her hair standing against a tree. Another a girl in what appears to be a middle or high school classroom. The photo, seemingly taken discreetly by a classmate, is captioned “PORN.”

Another image on the site showed a group of young teens who appear to be in middle school: a boy taking a selfie in what appears to be a school gymnasium with two girls, who smile and pose for the picture. The boy’s features were obscured by a Snapchat lens that enlarged his eyes so much that they covered his face.

Captions on the apparently uploaded images indicated they include images of friends, classmates, and romantic partners. “My gf” one caption says, showing a young woman taking a selfie in a mirror.

Many of the photos showed influencers who are popular on TikTok, Instagram, and other social media platforms. Other photos appeared to be Instagram screenshots of people sharing images from their everyday lives. One image showed a young woman smiling with a dessert topped with a celebratory candle.

Several images appeared to show people who were complete strangers to the person who took the photo. One image taken from behind depicted a woman or girl who is not posing for a photo, but simply standing near what appears to be a tourist attraction.

Some of the images in the feeds reviewed by WIRED were cropped to remove the faces of women and girls, showing only their chest or crotch.

Huge Audience

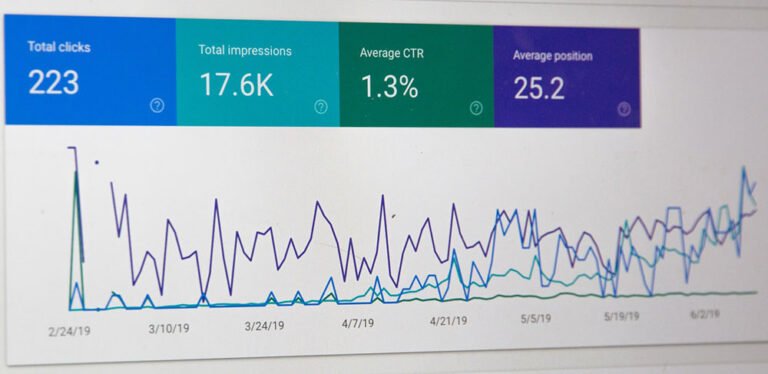

Over an eight-day period of monitoring the site, WIRED saw five new images of women appear on the Home feed, and three on the Explore page. Stats listed on the site showed that most of these images accumulated hundreds of “views.” It’s unclear if all images submitted to the site make it to the Home or Explore feed, or how views are tabulated. Every post on the Home feed has at least a few dozen views.

Photos of celebrities and people with large Instagram followings top the list of “Most Viewed” images listed on the site. The most-viewed people of all time on the site are actor Jenna Ortega with more than 66,000 views, singer-songwriter Taylor Swift with more than 27,000 views, and an influencer and DJ from Malaysia with more than 26,000 views.

Swift and Ortega have been targeted with deepfake nudes before. The circulation of fake nude images of Swift on X in January triggered a moment of renewed discussion about the impacts of deepfakes and the need for greater legal protections for victims. This month, NBC reported that, for seven months, Meta had hosted ads for a deepnude app. The app boasted about its ability to “undress” people, using a picture of Jenna Ortega from when she was 16 years old.

In the US, no federal law targets the distribution of fake, nonconsensual nude images. A handful of states have enacted their own laws. But AI-generated nude images of minors come under the same category as other child sexual abuse material, or CSAM, says Jennifer Newman, executive director of the NCMEC’s Exploited Children’s Division.

“If it is indistinguishable from an image of a live victim, of a real child, then that is child sexual abuse material to us,” Newman says. “And we will treat it as such as we’re processing our reports, as we’re getting these reports out to law enforcement.”

In 2023, Newman says, NCMEC received about 4,700 reports that “somehow connect to generative AI technology.”

“Pathetic Bros”

People who want to create and save deepfake nude images on the site are asked to log in using either a Coinbase, Metamask, or WalletConnect cryptocurrency wallet. Coinbase spokesperson McKenna Otterstedt said that the company is launching an internal investigation into the site’s integration with the company’s wallet. WalletConnect and ConsenSys-owned Metamask did not respond to requests for comment.

In November 2022, the deepnude site’s YouTube channel posted a video claiming users could “buy credit” with Visa or Mastercard. Neither of the two payment processors returned WIRED’s requests for comment.

On OpenSea, a marketplace for NFTs, the site listed 30 NFTs in 2022 with unedited, not deepfaked, pictures of different Instagram and TikTok influencers, all women. After buying an NFT with the ether cryptocurrency—$280 worth at today’s exchange rate—owners would get access to the website, which according to a web archive, was in its early stages at the time. “Privacy is the ultimate priority” for its users, the NFT listings said.

The NFTs were categorized with tags referring to the women’s perceived features. The categories included Boob Size, Country (with most of the women listed as from Malaysia or Taiwan), and Traits, with tags including “cute,” “innocent,” and “motherly.”

None of the NFTs listed by the account ever sold. OpenSea deleted the listings and the account within 90 minutes of WIRED contacting the company. None of the women shown in the NFTs responded for comment.

It’s unclear who, or how many people, created or own the deepnude website. The now deleted OpenSea account had a profile image identical to the third Google Image result for “nerd.” The account bio said that the creator’s mantra is to “reveal the shitty thing in this world” and then share it with “all douche and pathetic bros.”

An X account linked from the OpenSea account used the same bio and also linked to a now inactive blog about “Whitehat, Blackhat Hacking” and “Scamming and Money Making.” The account’s owner appears to have been one of three contributors to the blog, where he went by the moniker 69 Fucker.

The website was promoted on Reddit by just one user, who had a profile picture of a man of East Asian descent who appeared to be under 50. However, an archive of the website from March 2022 claims that the site “was created by 9 horny skill-full people.” The majority of the profile images appeared to be stock photos, and the job titles were all facetious. Three of them were Horny Director, Scary Stalker, and Booty Director.

An email address associated with the website did not respond for comment.

Title: The Disturbing Impact of a Deepfake Nude Generator on Its Victims Exposed

Introduction:

In the digital age, advancements in technology have brought both convenience and risks. One such risk is the emergence of deepfake technology, which has the potential to manipulate and exploit individuals by creating highly realistic fake content. Among the various forms of deepfakes, the creation and distribution of non-consensual nude images using deepfake algorithms have become a growing concern. This article explores the disturbing impact of a deepfake nude generator on its victims, shedding light on the consequences and the urgent need for preventive measures.

Understanding Deepfake Nude Generators:

Deepfake nude generators utilize artificial intelligence (AI) algorithms to superimpose a person’s face onto explicit content, creating realistic-looking fake nude images or videos. These generators exploit the ease of access to personal photos and videos available on social media platforms, making it easier than ever for perpetrators to create and distribute non-consensual explicit content.

The Psychological Toll on Victims:

The victims of deepfake nude generators often experience severe emotional distress, anxiety, and humiliation. The violation of their privacy and the fear of being exposed to friends, family, or colleagues can lead to long-lasting psychological trauma. The impact on their mental health can be devastating, causing depression, self-isolation, and even suicidal thoughts. The feeling of powerlessness and loss of control over one’s own image exacerbates the distress experienced by victims.

Professional and Personal Repercussions:

The consequences of being targeted by a deepfake nude generator can extend beyond personal well-being. Victims may face professional repercussions, such as damage to their reputation, loss of job opportunities, or even harassment in the workplace. The release of such explicit content can also strain personal relationships, leading to strained friendships, broken trust, and strained familial bonds.

Legal Challenges and Inadequate Protections:

Addressing deepfake nude generators poses significant legal challenges. Many jurisdictions lack specific legislation that adequately addresses the creation and distribution of non-consensual explicit content. This legal gap makes it difficult for victims to seek justice and hold perpetrators accountable. Furthermore, the global nature of the internet complicates the enforcement of laws, as deepfake content can be easily shared across borders.

Combating Deepfake Nudes: The Need for Collective Action:

To combat the disturbing impact of deepfake nude generators, a multi-faceted approach is required. Technology companies must invest in developing advanced detection algorithms capable of identifying deepfake content promptly. Social media platforms should implement stricter policies to prevent the spread of non-consensual explicit content and provide support mechanisms for victims. Governments need to enact comprehensive legislation that criminalizes the creation and distribution of deepfake nudes, ensuring that perpetrators face appropriate legal consequences.

Empowering Victims and Raising Awareness:

Empowering victims is crucial in mitigating the impact of deepfake nude generators. Providing resources such as counseling services, legal aid, and support groups can help victims navigate the aftermath of such traumatic experiences. Additionally, raising awareness about the existence and potential dangers of deepfake technology is essential to educate individuals on how to protect themselves and their digital identities.

Conclusion:

The rise of deepfake nude generators poses a significant threat to personal privacy, mental well-being, and professional lives. The distress experienced by victims demands urgent action from technology companies, social media platforms, governments, and society as a whole. By implementing robust preventive measures, raising awareness, and providing support to victims, we can collectively combat this disturbing impact and protect individuals from the harmful consequences of deepfake nudes.